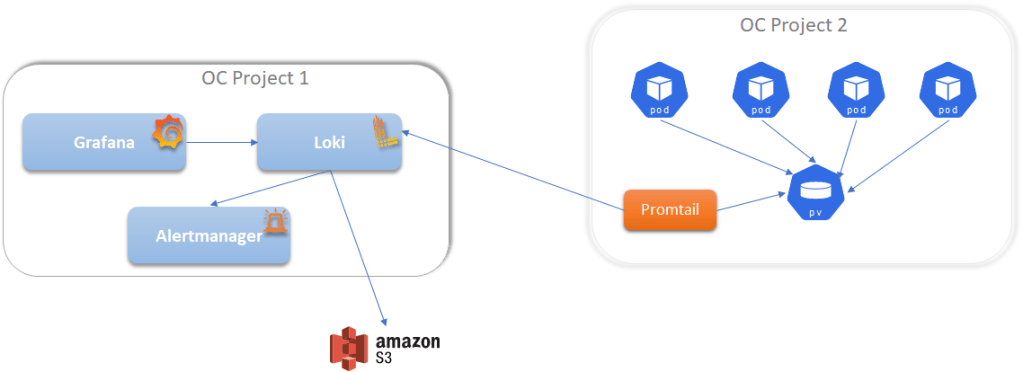

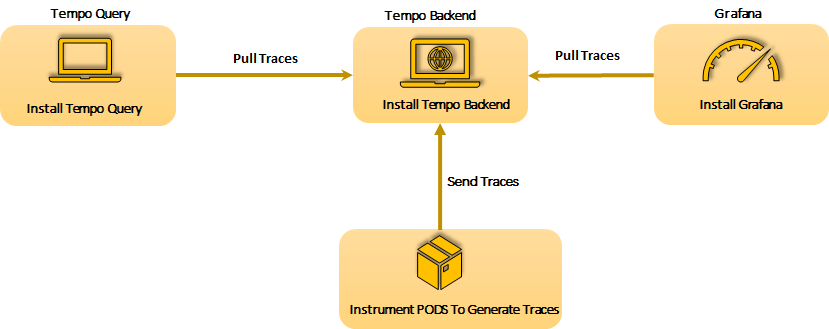

Architecture

Learn more about the modules here

Components

Deployment Strategy

Another possible strategy could be

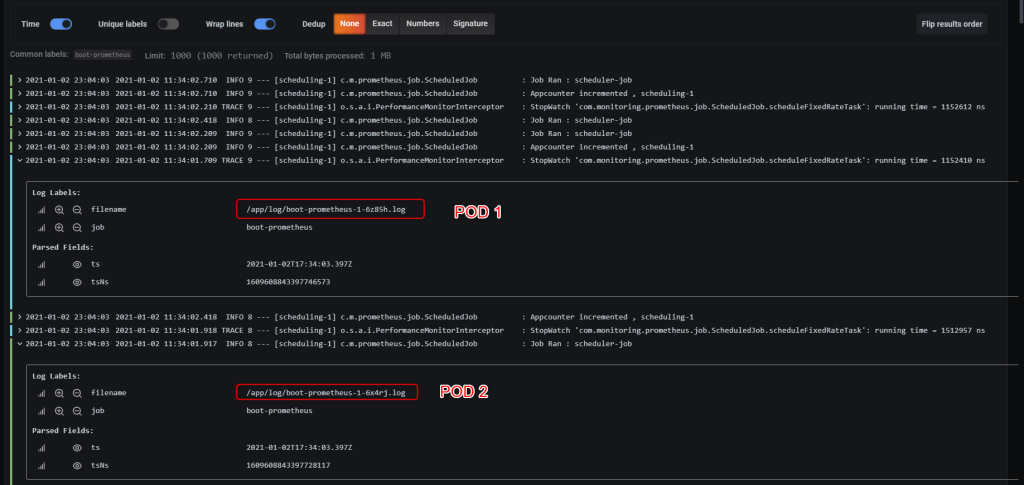

Distribute Tracing Solutions

Open Source Distributed Tracing:

- OpenTelemetry: the next major version of both OpenCensus and OpenTracing supported by the Cloud Native Computing Foundation (CNCF)

- OpenTracing

- OpenCensus

- OpenZipkin

- Jaeger

- Datadog

- Apache SkyWalking

- Haystack

- Pinpoint

- Veneur

Enterprise Tracing Solutions:

- Amazon X-Ray

- Datadog

- Dynatrace

- Google Cloud Trace

- Honeycomb

- Instana

- Lightstep

- New Relic

- Wavefront

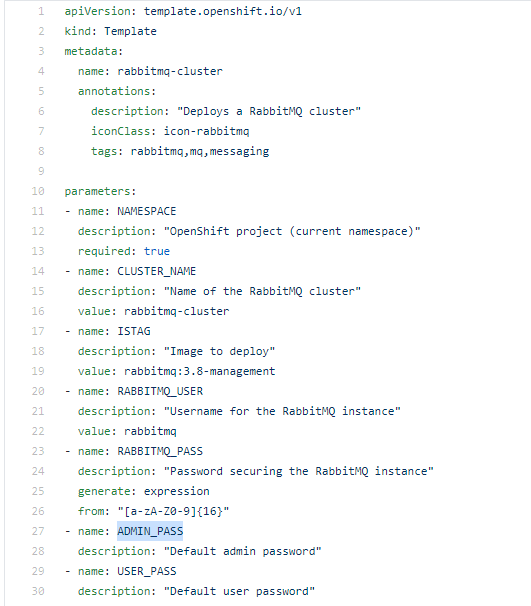

Perquisites

Dockerfile

We have to create custom docker image because of a bug for now.

FROM grafana/tempo-query:0.5.0 AS builder

FROM alpine:latest

COPY --from=builder /tmp/tempo-query /tmp/tempo-query

COPY --from=builder /go/bin/query-linux /go/bin/query-linux

ENV SPAN_STORAGE_TYPE=grpc-plugin \

GRPC_STORAGE_PLUGIN_BINARY=/tmp/tempo-query

RUN chgrp -R 0 /tmp && chmod -R g+rwX /tmp

EXPOSE 16686/tcp

ENTRYPOINT ["/go/bin/query-linux"]

Tempo Backend Config

tempo-s3.yaml

auth_enabled: false

server:

http_listen_port: 3100

grpc_server_max_recv_msg_size: 10485760

grpc_server_max_send_msg_size: 10485760

distributor:

receivers: # this configuration will listen on all ports and protocols that tempo is capable of.

jaeger: # the receives all come from the OpenTelemetry collector. more configuration information can

protocols: # be found there: https://github.com/open-telemetry/opentelemetry-collector/tree/master/receiver

thrift_http: #

grpc: # for a production deployment you should only enable the receivers you need!

thrift_binary:

thrift_compact:

zipkin:

otlp:

protocols:

http:

grpc:

opencensus:

ingester:

trace_idle_period: 10s # the length of time after a trace has not received spans to consider it complete and flush it

max_block_bytes: 100 # cut the head block when it his this number of traces or ...

#traces_per_block: 100

max_block_duration: 5m # this much time passes

querier:

frontend_worker:

frontend_address: 127.0.0.1:9095

compactor:

compaction:

compaction_window: 1h # blocks in this time window will be compacted together

max_compaction_objects: 1000000 # maximum size of compacted blocks

block_retention: 336h

compacted_block_retention: 10m

flush_size_bytes: 5242880

storage:

trace:

backend: s3 # backend configuration to use

block:

bloom_filter_false_positive: .05 # bloom filter false positive rate. lower values create larger filters but fewer false positives

index_downsample: 10 # number of traces per index record

encoding: lz4-64k # block encoding/compression. options: none, gzip, lz4-64k, lz4-256k, lz4-1M, lz4, snappy, zstd

wal:

path: /tmp/tempo/wal # where to store the the wal locally

s3:

endpoint: <s3-endpoint>

bucket: tempo # how to store data in s3

access_key: <access_key>

secret_key: <secret_key>

insecure: false

pool:

max_workers: 100 # the worker pool mainly drives querying, but is also used for polling the blocklist

queue_depth: 10000

Secret

oc create secret generic app-secret --from-file=tempo.yaml=tempo-s3.yaml

openssl base64 -A -in tempo-s3.yaml -out temp-s3-base64encoded.txt

oc create secret generic app-secret --from-literal=tempo.yaml=<BASE64EncodedYaml>

Openshift Deployment

tempo-monolithic.yaml

apiVersion: v1

kind: Template

metadata:

name: Tempo

annotations:

"openshift.io/display-name": Tempo

description: |

A Tracing solution for an OpenShift cluster.

iconClass: fa fa-cogs

tags: "Tracing, Tempo, time-series"

parameters:

- name: TEMP_QUERY_IMAGE

description: "Tempo query docker image name"

- name: APP_NAME

description: "Value for app label."

- name: NAME_SPACE

description: "The name of the namespace (Openshift project)"

- name: REPLICAS

description: "number of replicas"

value: "1"

objects:

- apiVersion: apps.openshift.io/v1

kind: DeploymentConfig

metadata:

labels:

app: tempo

name: tempo

namespace: "${NAME_SPACE}"

spec:

replicas: "${{REPLICAS}}"

selector:

app: tempo

template:

metadata:

labels:

app: tempo

name: tempo

annotations:

prometheus.io/scrape: "true"

#prometheus.io/port: "3100"

prometheus.io/path: "/metrics"

spec:

containers:

- name: tempo

image: grafana/tempo:0.5.0

imagePullPolicy: "Always"

args:

- -config.file=/etc/tempo/tempo.yaml

ports:

- name: metrics

containerPort: 3100

- name: http

containerPort: 3100

- name: ot

containerPort: 55680

- name: tc

containerPort: 6831

- name: tb

containerPort: 6832

- name: th

containerPort: 14268

- name: tg

containerPort: 14250

- name: zipkin

containerPort: 9411

livenessProbe:

failureThreshold: 3

httpGet:

path: /ready

port: metrics

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /ready

port: metrics

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

volumeMounts:

- name: tempo-config

mountPath: /etc/tempo

volumes:

- name: tempo-config

secret:

secretName: app-secret

items:

- key: tempo.yaml

path: tempo.yaml

- apiVersion: apps.openshift.io/v1

kind: DeploymentConfig

metadata:

labels:

app: tempo-query

name: tempo-query

namespace: "${NAME_SPACE}"

spec:

replicas: "${{REPLICAS}}"

selector:

app: tempo-query

template:

metadata:

labels:

app: tempo-query

name: tempo-query

spec:

containers:

- name: tempo-query

image: ${TEMP_QUERY_IMAGE}

imagePullPolicy: "Always"

args:

- --grpc-storage-plugin.configuration-file=/etc/tempo/tempo-query.yaml

ports:

- name: http

containerPort: 16686

livenessProbe:

failureThreshold: 3

tcpSocket:

port: http # named port

initialDelaySeconds: 60

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

tcpSocket:

port: http # named port

initialDelaySeconds: 30

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

volumeMounts:

- name: tempo-query-config-vol

mountPath: /etc/tempo

volumes:

- name: tempo-query-config-vol

configMap:

defaultMode: 420

name: tempo-query-config

- apiVersion: v1

kind: ConfigMap

metadata:

name: tempo-query-config

data:

tempo-query.yaml: |-

backend: "tempo:3100"

- apiVersion: v1

kind: Service

metadata:

labels:

name: tempo-query

name: tempo-query

namespace: "${NAME_SPACE}"

spec:

ports:

- name: tempo-query

port: 16686

protocol: TCP

targetPort: http

selector:

app: tempo-query

- apiVersion: v1

kind: Service

metadata:

labels:

name: tempo

name: tempo

namespace: "${NAME_SPACE}"

spec:

ports:

- name: http

port: 3100

protocol: TCP

targetPort: http

- name: zipkin

port: 9411

protocol: TCP

targetPort: zipkin

selector:

app: tempo

- apiVersion: v1

kind: Service

metadata:

labels:

name: tempo

name: tempo-egress

namespace: "${NAME_SPACE}"

spec:

ports:

- name: ot

port: 55680

protocol: TCP

targetPort: ot

- name: tc

port: 6831

protocol: TCP

targetPort: tc

- name: tb

port: 6832

protocol: TCP

targetPort: tb

- name: th

port: 14268

protocol: TCP

targetPort: th

- name: tg

port: 14250

protocol: TCP

targetPort: tg

loadBalancerIP:

type: LoadBalancer

selector:

app: tempo

- apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: tempo-query

namespace: "${NAME_SPACE}"

spec:

host: app-trace-fr.org.com

port:

targetPort: tempo-query

to:

kind: Service

name: tempo-query

weight: 100

wildcardPolicy: None

- apiVersion: route.openshift.io/v1

kind: Route

metadata:

labels:

name: tempo

name: tempo-zipkin

namespace: "${NAME_SPACE}"

spec:

host: app-trace.org.com

path: /zipkin

port:

targetPort: zipkin

to:

kind: Service

name: tempo

weight: 100

wildcardPolicy: None

- apiVersion: route.openshift.io/v1

kind: Route

metadata:

labels:

name: tempo

name: tempo-http

namespace: "${NAME_SPACE}"

spec:

host: app-trace.org.com

path: /

port:

targetPort: http

to:

kind: Service

name: tempo

weight: 100

wildcardPolicy: None

oc process -f tempo-monolithic.yaml -p TEMP_QUERY_IMAGE=tempo:1.0.18-main -p APP_NAME=tempo -p NAME_SPACE=demo-app -p REPLICAS=1

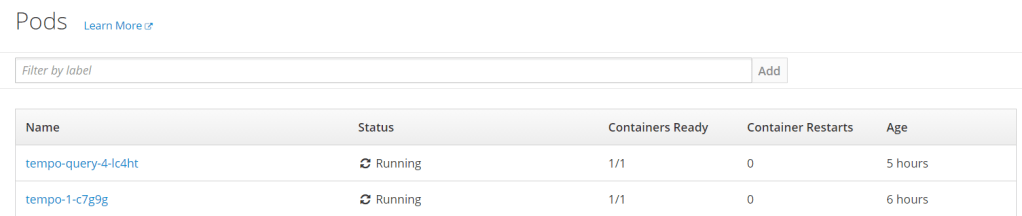

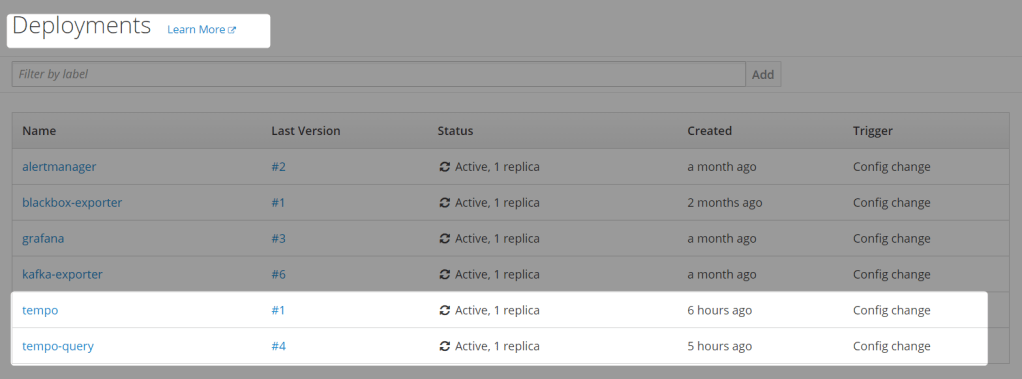

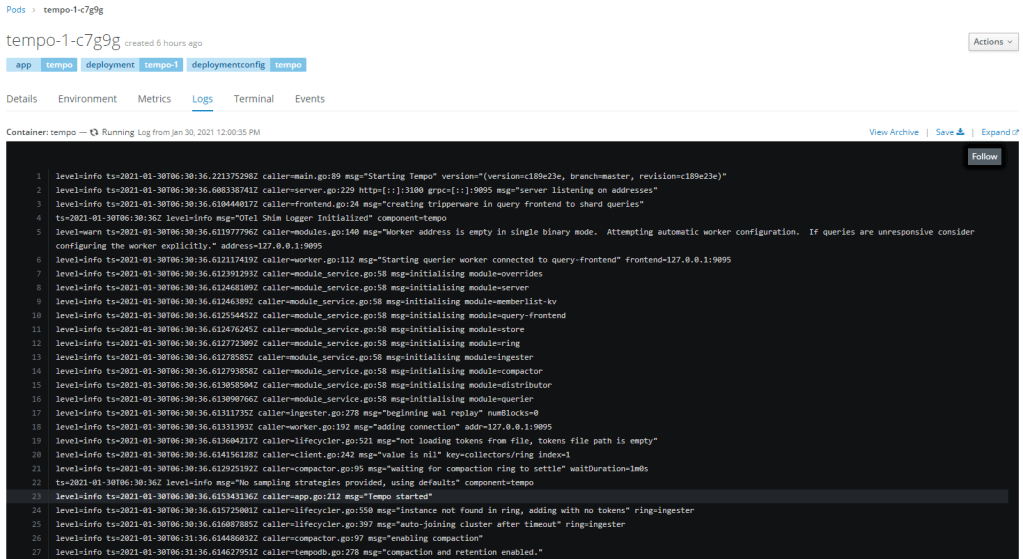

Artifacts created

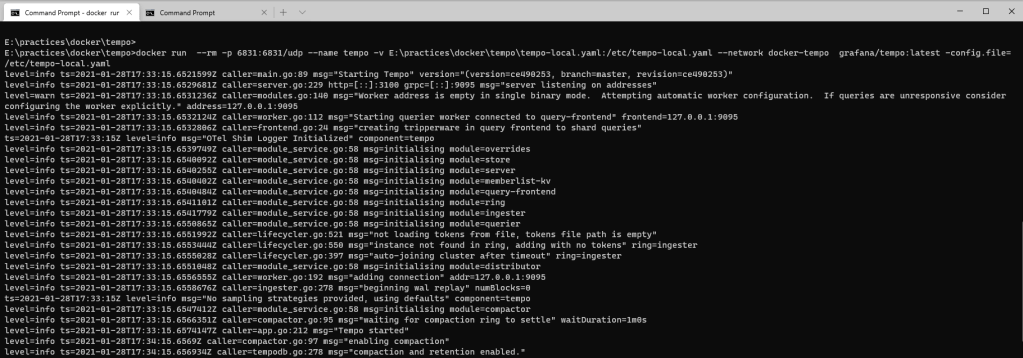

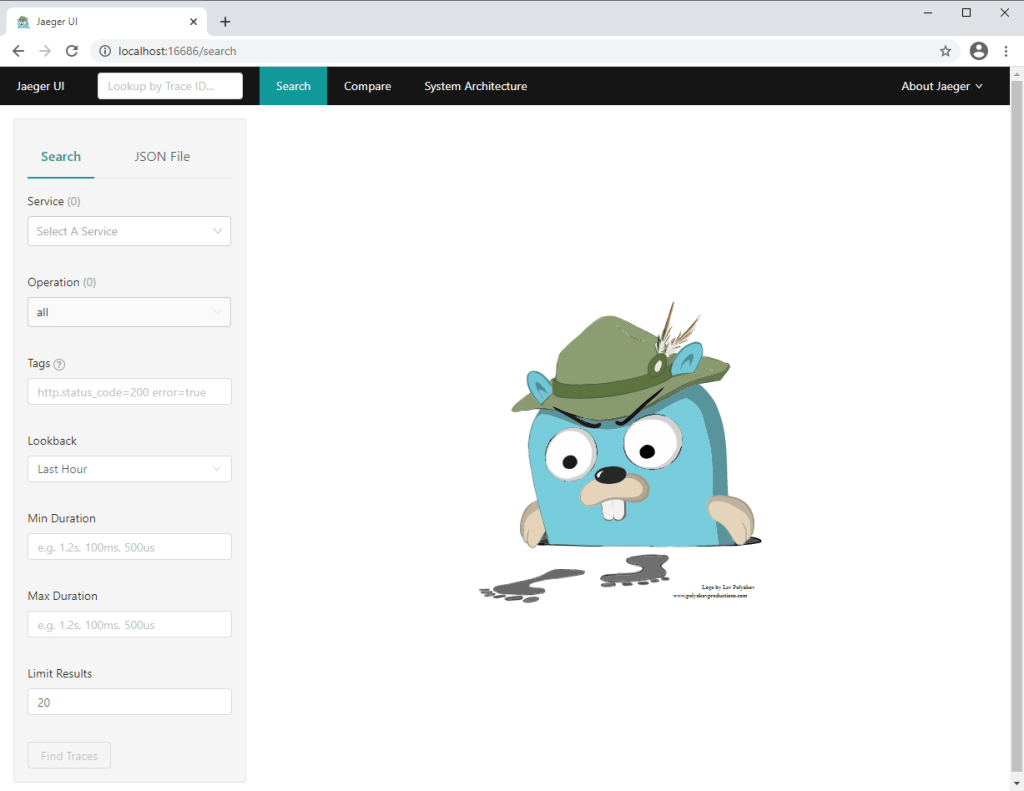

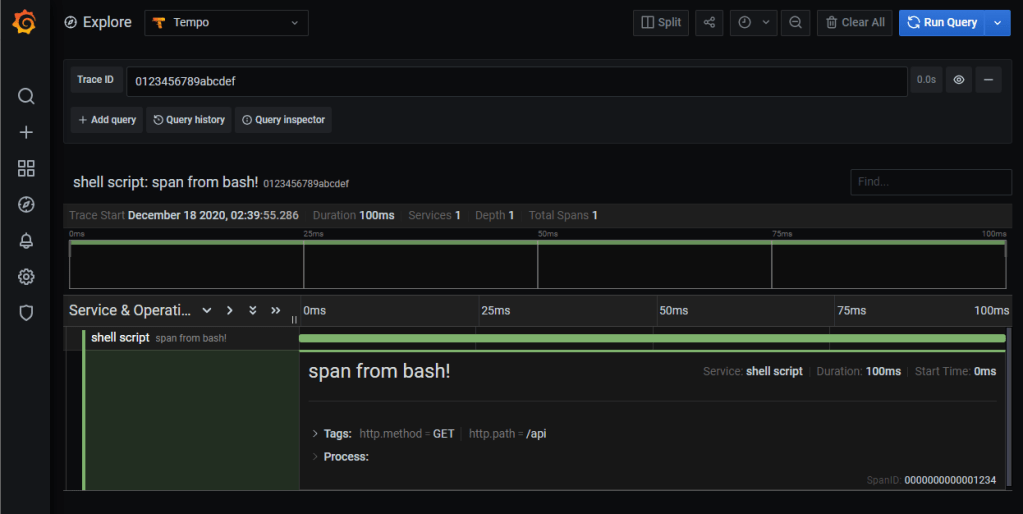

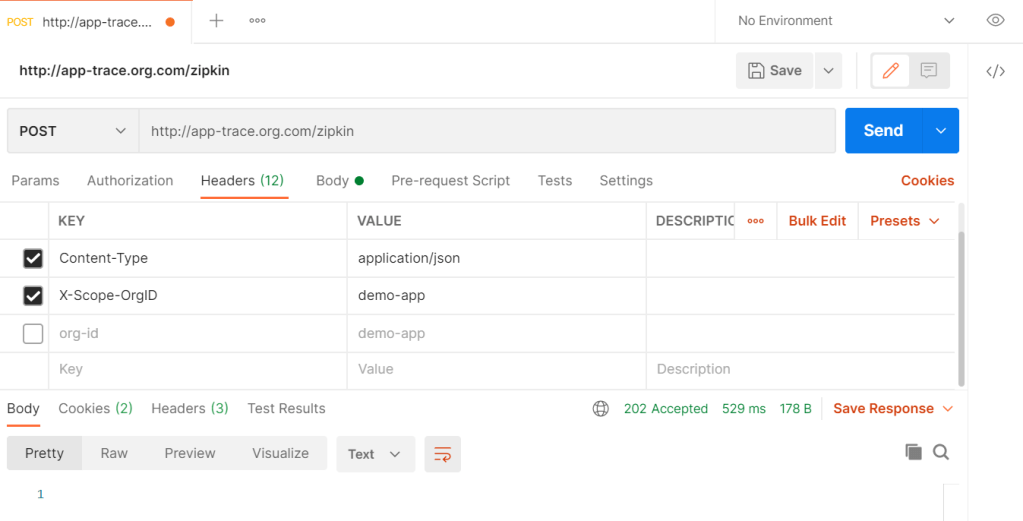

Sending traces

[{

"id": "1234",

"traceId": "0123456789abcdef",

"timestamp": 1608239395286533,

"duration": 100000,

"name": "span from bash!",

"tags": {

"http.method": "GET",

"http.path": "/api"

},

"localEndpoint": {

"serviceName": "shell script"

}

}]

X-Scope-OrgID

Data Getting stored in S3

Bigger Picture

Another Strategy could be

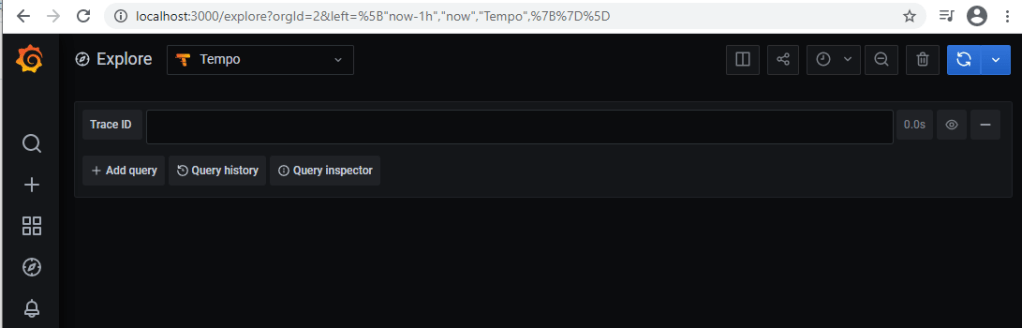

Grafana

References

- Openshift Templates

- Cloud Native Computing Foundation projects

- Jaeger and Opentelemetry

- https://www.trustradius.com/observability-tools#overview

- Opentelemetry Specification