【DL輪読会】Towards Understanding Ensemble, Knowledge Distillation and Self-Distillation in Deep Learning

Download as pptx, pdf0 likes727 views

2023/6/30 Deep Learning JP http://deeplearning.jp/seminar-2/

1 of 21

Downloaded 17 times

![DEEP LEARNING JP

[DL Papers]

http://deeplearning.jp/

”Towards Understanding Ensemble, Knowledge Distillation

and Self-Distillation in Deep Learning” ICRL2023

Kensuke Wakasugi, Panasonic Holdings Corporation.

1](https://image.slidesharecdn.com/230630dlhdv2-230630030621-2ab756c1/85/DL-Towards-Understanding-Ensemble-Knowledge-Distillation-and-Self-Distillation-in-Deep-Learning-1-320.jpg)

Ad

Recommended

[DL輪読会]ドメイン転移と不変表現に関するサーベイ![[DL輪読会]ドメイン転移と不変表現に関するサーベイ](https://cdn.slidesharecdn.com/ss_thumbnails/20190614iwasawa-190614005939-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ドメイン転移と不変表現に関するサーベイ](https://cdn.slidesharecdn.com/ss_thumbnails/20190614iwasawa-190614005939-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ドメイン転移と不変表現に関するサーベイ](https://cdn.slidesharecdn.com/ss_thumbnails/20190614iwasawa-190614005939-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ドメイン転移と不変表現に関するサーベイ](https://cdn.slidesharecdn.com/ss_thumbnails/20190614iwasawa-190614005939-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]ドメイン転移と不変表現に関するサーベイDeep Learning JP 2019/06/14

Deep Learning JP:

http://deeplearning.jp/seminar-2/

敵対的生成ネットワーク(GAN)

敵対的生成ネットワーク(GAN)cvpaper. challenge cvpaper.challenge の Meta Study Group 発表スライド

cvpaper.challenge はコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ・アイディア考案・議論・実装・論文投稿に取り組み、凡ゆる知識を共有します。2019の目標「トップ会議30+本投稿」「2回以上のトップ会議網羅的サーベイ」

http://xpaperchallenge.org/cv/

【DL輪読会】The Forward-Forward Algorithm: Some Preliminary

【DL輪読会】The Forward-Forward Algorithm: Some PreliminaryDeep Learning JP 2023/1/6

Deep Learning JP

http://deeplearning.jp/seminar-2/

PRMLの線形回帰モデル(線形基底関数モデル)

PRMLの線形回帰モデル(線形基底関数モデル)Yasunori Ozaki PRML上巻勉強会 at 東京大学の資料です。

この資料はChristopher M. Bishop 著「Pattern Recognition and Machine Learning」の日本語版「パターン認識と機械学習 上 - ベイズ理論による統計的予測」について補足説明を入れた上でなるべくわかりやすくしたものです。

本資料では第3章の前半、特に3.1節を中心に解説しています。

詳しくはこちらのサイト(外部)を御覧ください。

http://ibisforest.org/index.php?PRML

ブラックボックス最適化とその応用

ブラックボックス最適化とその応用gree_tech This document discusses methods for automated machine learning (AutoML) and optimization of hyperparameters. It focuses on accelerating the Nelder-Mead method for hyperparameter optimization using predictive parallel evaluation. Specifically, it proposes using a Gaussian process to model the objective function and perform predictive evaluations in parallel to reduce the number of actual function evaluations needed by the Nelder-Mead method. The results show this approach reduces evaluations by 49-63% compared to baseline methods.

【DL輪読会】Transformers are Sample Efficient World Models

【DL輪読会】Transformers are Sample Efficient World ModelsDeep Learning JP 2022/11/25

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL輪読会]相互情報量最大化による表現学習![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]相互情報量最大化による表現学習Deep Learning JP 2019/09/13

Deep Learning JP:

http://deeplearning.jp/seminar-2/

深層学習の不確実性 - Uncertainty in Deep Neural Networks -

深層学習の不確実性 - Uncertainty in Deep Neural Networks -tmtm otm Twitter: ottamm_190

追記 2022/4/24

speakerdeck版:https://speakerdeck.com/masatoto/shen-ceng-xue-xi-falsebu-que-shi-xing-uncertainty-in-deep-neural-networks

コンパクト版:https://speakerdeck.com/masatoto/shen-ceng-xue-xi-niokerubu-que-shi-xing-ru-men

【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...

【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...Deep Learning JP 2023/7/27

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic DatasetsDeep Learning JP 2022/03/25

Deep Learning JP:

http://deeplearning.jp/seminar-2/

Deep State Space Models for Time Series Forecasting の紹介

Deep State Space Models for Time Series Forecasting の紹介Chihiro Kusunoki Deep State Space Models for Time Series Forecasting の紹介

【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "

【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "Deep Learning JP 2023/7/21

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...Deep Learning JP 2019/04/26

Deep Learning JP:

http://deeplearning.jp/seminar-2/

【DL輪読会】Efficiently Modeling Long Sequences with Structured State Spaces

【DL輪読会】Efficiently Modeling Long Sequences with Structured State SpacesDeep Learning JP This document summarizes a research paper on modeling long-range dependencies in sequence data using structured state space models and deep learning. The proposed S4 model (1) derives recurrent and convolutional representations of state space models, (2) improves long-term memory using HiPPO matrices, and (3) efficiently computes state space model convolution kernels. Experiments show S4 outperforms existing methods on various long-range dependency tasks, achieves fast and memory-efficient computation comparable to efficient Transformers, and performs competitively as a general sequence model.

三次元点群を取り扱うニューラルネットワークのサーベイ

三次元点群を取り扱うニューラルネットワークのサーベイNaoya Chiba Ver. 2を公開しました: https://speakerdeck.com/nnchiba/point-cloud-deep-learning-survey-ver-2

PointNet前後~ECCV2018の点群深層学習関連の論文についてまとめました.

間違いなどあればご指摘頂けるとありがたいです.

【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...

【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...Deep Learning JP 2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL輪読会]Pay Attention to MLPs (gMLP)![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Pay Attention to MLPs (gMLP)Deep Learning JP The document summarizes a research paper that compares the performance of MLP-based models to Transformer-based models on various natural language processing and computer vision tasks. The key points are:

1. Gated MLP (gMLP) architectures can achieve performance comparable to Transformers on most tasks, demonstrating that attention mechanisms may not be strictly necessary.

2. However, attention still provides benefits for some NLP tasks, as models combining gMLP and attention outperformed pure gMLP models on certain benchmarks.

3. For computer vision, gMLP achieved results close to Vision Transformers and CNNs on image classification, indicating gMLP can match their data efficiency.

DQNからRainbowまで 〜深層強化学習の最新動向〜

DQNからRainbowまで 〜深層強化学習の最新動向〜Jun Okumura DQN(Deep Q Network)以前からRainbow、またApe-Xまでのゲームタスクを扱った深層強化学習アルゴリズムの概観。

※ 分かりにくい箇所や、不正確な記載があればコメントいただけると嬉しいです。

Direct feedback alignment provides learning in Deep Neural Networks

Direct feedback alignment provides learning in Deep Neural NetworksDeep Learning JP 2016/9/23

Deep Learning JP:

http://deeplearning.jp/seminar-2/

Triplet Loss 徹底解説

Triplet Loss 徹底解説tancoro ClassificationとMetric Learningの違い、Contrastive Loss と Triplet Loss、Triplet Lossの改良の変遷など

最適輸送入門

最適輸送入門joisino IBIS 2021 https://ibisml.org/ibis2021/ における最適輸送についてのチュートリアルスライドです。

『最適輸送の理論とアルゴリズム』好評発売中! https://www.amazon.co.jp/dp/4065305144

Speakerdeck にもアップロードしました: https://speakerdeck.com/joisino/zui-shi-shu-song-ru-men

【DL輪読会】"Masked Siamese Networks for Label-Efficient Learning"

【DL輪読会】"Masked Siamese Networks for Label-Efficient Learning"Deep Learning JP 2022/05/06

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−Deep Learning JP 2019/04/15

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]ICLR2020の分布外検知速報![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]ICLR2020の分布外検知速報Deep Learning JP 2019/09/27

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]Ensemble Distribution Distillation![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Ensemble Distribution DistillationDeep Learning JP 2020/01/10

Deep Learning JP:

http://deeplearning.jp/seminar-2/

200704 revisiting knowledge distillation via label smoothing regularization

200704 revisiting knowledge distillation via label smoothing regularization 亮宏 藤井 第三回 全日本コンピュータビジョン勉強会(前編)で発表した資料

More Related Content

What's hot (20)

[DL輪読会]相互情報量最大化による表現学習![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]相互情報量最大化による表現学習](https://cdn.slidesharecdn.com/ss_thumbnails/20190913iwasawa-190913002312-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]相互情報量最大化による表現学習Deep Learning JP 2019/09/13

Deep Learning JP:

http://deeplearning.jp/seminar-2/

深層学習の不確実性 - Uncertainty in Deep Neural Networks -

深層学習の不確実性 - Uncertainty in Deep Neural Networks -tmtm otm Twitter: ottamm_190

追記 2022/4/24

speakerdeck版:https://speakerdeck.com/masatoto/shen-ceng-xue-xi-falsebu-que-shi-xing-uncertainty-in-deep-neural-networks

コンパクト版:https://speakerdeck.com/masatoto/shen-ceng-xue-xi-niokerubu-que-shi-xing-ru-men

【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...

【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...Deep Learning JP 2023/7/27

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic DatasetsDeep Learning JP 2022/03/25

Deep Learning JP:

http://deeplearning.jp/seminar-2/

Deep State Space Models for Time Series Forecasting の紹介

Deep State Space Models for Time Series Forecasting の紹介Chihiro Kusunoki Deep State Space Models for Time Series Forecasting の紹介

【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "

【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "Deep Learning JP 2023/7/21

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...](https://cdn.slidesharecdn.com/ss_thumbnails/20190426akuzawa-190426020057-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]“SimPLe”,“Improved Dynamics Model”,“PlaNet” 近年のVAEベース系列モデルの進展とそのモデルベース...Deep Learning JP 2019/04/26

Deep Learning JP:

http://deeplearning.jp/seminar-2/

【DL輪読会】Efficiently Modeling Long Sequences with Structured State Spaces

【DL輪読会】Efficiently Modeling Long Sequences with Structured State SpacesDeep Learning JP This document summarizes a research paper on modeling long-range dependencies in sequence data using structured state space models and deep learning. The proposed S4 model (1) derives recurrent and convolutional representations of state space models, (2) improves long-term memory using HiPPO matrices, and (3) efficiently computes state space model convolution kernels. Experiments show S4 outperforms existing methods on various long-range dependency tasks, achieves fast and memory-efficient computation comparable to efficient Transformers, and performs competitively as a general sequence model.

三次元点群を取り扱うニューラルネットワークのサーベイ

三次元点群を取り扱うニューラルネットワークのサーベイNaoya Chiba Ver. 2を公開しました: https://speakerdeck.com/nnchiba/point-cloud-deep-learning-survey-ver-2

PointNet前後~ECCV2018の点群深層学習関連の論文についてまとめました.

間違いなどあればご指摘頂けるとありがたいです.

【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...

【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...Deep Learning JP 2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL輪読会]Pay Attention to MLPs (gMLP)![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Pay Attention to MLPs (gMLP)](https://cdn.slidesharecdn.com/ss_thumbnails/kobayashi-210528032327-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Pay Attention to MLPs (gMLP)Deep Learning JP The document summarizes a research paper that compares the performance of MLP-based models to Transformer-based models on various natural language processing and computer vision tasks. The key points are:

1. Gated MLP (gMLP) architectures can achieve performance comparable to Transformers on most tasks, demonstrating that attention mechanisms may not be strictly necessary.

2. However, attention still provides benefits for some NLP tasks, as models combining gMLP and attention outperformed pure gMLP models on certain benchmarks.

3. For computer vision, gMLP achieved results close to Vision Transformers and CNNs on image classification, indicating gMLP can match their data efficiency.

DQNからRainbowまで 〜深層強化学習の最新動向〜

DQNからRainbowまで 〜深層強化学習の最新動向〜Jun Okumura DQN(Deep Q Network)以前からRainbow、またApe-Xまでのゲームタスクを扱った深層強化学習アルゴリズムの概観。

※ 分かりにくい箇所や、不正確な記載があればコメントいただけると嬉しいです。

Direct feedback alignment provides learning in Deep Neural Networks

Direct feedback alignment provides learning in Deep Neural NetworksDeep Learning JP 2016/9/23

Deep Learning JP:

http://deeplearning.jp/seminar-2/

Triplet Loss 徹底解説

Triplet Loss 徹底解説tancoro ClassificationとMetric Learningの違い、Contrastive Loss と Triplet Loss、Triplet Lossの改良の変遷など

最適輸送入門

最適輸送入門joisino IBIS 2021 https://ibisml.org/ibis2021/ における最適輸送についてのチュートリアルスライドです。

『最適輸送の理論とアルゴリズム』好評発売中! https://www.amazon.co.jp/dp/4065305144

Speakerdeck にもアップロードしました: https://speakerdeck.com/joisino/zui-shi-shu-song-ru-men

【DL輪読会】"Masked Siamese Networks for Label-Efficient Learning"

【DL輪読会】"Masked Siamese Networks for Label-Efficient Learning"Deep Learning JP 2022/05/06

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−Deep Learning JP 2019/04/15

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]ICLR2020の分布外検知速報![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]ICLR2020の分布外検知速報Deep Learning JP 2019/09/27

Deep Learning JP:

http://deeplearning.jp/seminar-2/

Similar to 【DL輪読会】Towards Understanding Ensemble, Knowledge Distillation and Self-Distillation in Deep Learning (20)

[DL輪読会]Ensemble Distribution Distillation![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Ensemble Distribution Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/ensembledistributiondistillation-200110020132-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Ensemble Distribution DistillationDeep Learning JP 2020/01/10

Deep Learning JP:

http://deeplearning.jp/seminar-2/

200704 revisiting knowledge distillation via label smoothing regularization

200704 revisiting knowledge distillation via label smoothing regularization 亮宏 藤井 第三回 全日本コンピュータビジョン勉強会(前編)で発表した資料

Hello deeplearning!

Hello deeplearning!T2C_ 今流行のDeep Learningとは何か?

を、全く前知識の無い人向けに数式等を一切用いず

説明する為の資料。自分の勉強用でもあります。

時間は30分(プレゼン20分+質疑応答10分)目標。

きっと沢山間違っていたり誤謬があったりするので

ご指摘いただけると今後の糧となりまする。

[DL輪読会] “Asymmetric Tri-training for Unsupervised Domain Adaptation (ICML2017...![[DL輪読会] “Asymmetric Tri-training for Unsupervised Domain Adaptation (ICML2017...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170728-170728025901-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会] “Asymmetric Tri-training for Unsupervised Domain Adaptation (ICML2017...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170728-170728025901-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会] “Asymmetric Tri-training for Unsupervised Domain Adaptation (ICML2017...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170728-170728025901-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会] “Asymmetric Tri-training for Unsupervised Domain Adaptation (ICML2017...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170728-170728025901-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会] “Asymmetric Tri-training for Unsupervised Domain Adaptation (ICML2017...Yusuke Iwasawa DL輪読会で発表した資料です.“Asymmetric Tri-training for Unsupervised Domain Adaptation (ICML2017)”を中心に最近のニューラルネットワーク×ドメイン適応の研究をまとめました.

【メタサーベイ】数式ドリブン教師あり学習

【メタサーベイ】数式ドリブン教師あり学習cvpaper. challenge cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成・アイディア考案・議論・実装・論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/

Dropout Distillation

Dropout DistillationShotaro Sano ICML2016にて発表された論文『Dropout Distillation』の解説スライドです。こちらのミートアップにて発表させていただきました:http://connpass.com/event/34960/

深層学習(岡本孝之 著) - Deep Learning chap.3_1

深層学習(岡本孝之 著) - Deep Learning chap.3_1Masayoshi Kondo 深層学習(岡本孝之 著)の3章まとめ前半.これから深層学習を学びたい理系B4, M1向けの入門資料.実用目的よりも用語説明含めた基礎知識重視.

機械系のラボがAI(DL)を研究する意義【東京工業大学・鈴木良郎】

機械系のラボがAI(DL)を研究する意義【東京工業大学・鈴木良郎】ssuser1bf283 ・配属先のラボどこにしよう?と迷っている全国の大学3年生(工学系)へ

・特にAI (≒Deep Learning)やってみたいけど、良く知らないからなぁ…という方へ

・機械系ラボでAIを研究する意義なんてあるの?という方へ(6枚目見て下さい)

一般向けのDeep Learning

一般向けのDeep LearningPreferred Networks PFI 全体セミナーで発表した、専門家向けではなく一般向けのDeep Learning(深層学習)の解説です。どのような場面で活躍しているのか、今までの学習手法と何が違うのかを解説しています。

Deep Learning技術の今

Deep Learning技術の今Seiya Tokui 第2回全脳アーキテクチャ勉強会での講演スライドです。Deep Learning の基礎から最近提案されている面白トピックを詰め込んだサーベイになっています。

Semi supervised, weakly-supervised, unsupervised, and active learning

Semi supervised, weakly-supervised, unsupervised, and active learningYusuke Uchida An overview of semi supervised learning, weakly-supervised learning, unsupervised learning, and active learning.

Focused on recent deep learning-based image recognition approaches.

[DL輪読会]10分で10本の論⽂をざっくりと理解する (ICML2020)![[DL輪読会]10分で10本の論⽂をざっくりと理解する (ICML2020)](https://cdn.slidesharecdn.com/ss_thumbnails/20200828-ant-200828025358-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]10分で10本の論⽂をざっくりと理解する (ICML2020)](https://cdn.slidesharecdn.com/ss_thumbnails/20200828-ant-200828025358-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]10分で10本の論⽂をざっくりと理解する (ICML2020)](https://cdn.slidesharecdn.com/ss_thumbnails/20200828-ant-200828025358-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]10分で10本の論⽂をざっくりと理解する (ICML2020)](https://cdn.slidesharecdn.com/ss_thumbnails/20200828-ant-200828025358-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]10分で10本の論⽂をざっくりと理解する (ICML2020)Deep Learning JP 2020/08/028

Deep Learning JP:

http://deeplearning.jp/seminar-2/

[DL輪読会]Life-Long Disentangled Representation Learning with Cross-Domain Laten...![[DL輪読会]Life-Long Disentangled Representation Learning with Cross-Domain Laten...](https://cdn.slidesharecdn.com/ss_thumbnails/20180914iwasawa-180919025635-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Life-Long Disentangled Representation Learning with Cross-Domain Laten...](https://cdn.slidesharecdn.com/ss_thumbnails/20180914iwasawa-180919025635-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Life-Long Disentangled Representation Learning with Cross-Domain Laten...](https://cdn.slidesharecdn.com/ss_thumbnails/20180914iwasawa-180919025635-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Life-Long Disentangled Representation Learning with Cross-Domain Laten...](https://cdn.slidesharecdn.com/ss_thumbnails/20180914iwasawa-180919025635-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Life-Long Disentangled Representation Learning with Cross-Domain Laten...Deep Learning JP 2018/09/14

Deep Learning JP:

http://deeplearning.jp/seminar-2/

Ad

More from Deep Learning JP (20)

【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving Planners

【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving PlannersDeep Learning JP 2023/8/25

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】事前学習用データセットについて

【DL輪読会】事前学習用データセットについてDeep Learning JP 2023/8/24

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】 "Learning to render novel views from wide-baseline stereo pairs." CVP...

【DL輪読会】 "Learning to render novel views from wide-baseline stereo pairs." CVP...Deep Learning JP 2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Zero-Shot Dual-Lens Super-Resolution

【DL輪読会】Zero-Shot Dual-Lens Super-ResolutionDeep Learning JP 2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】BloombergGPT: A Large Language Model for Finance arxiv

【DL輪読会】BloombergGPT: A Large Language Model for Finance arxivDeep Learning JP 2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】マルチモーダル LLM

【DL輪読会】マルチモーダル LLMDeep Learning JP 2023/8/16

Deep Learning JP

http://deeplearning.jp/seminar-2/

【 DL輪読会】ToolLLM: Facilitating Large Language Models to Master 16000+ Real-wo...

【 DL輪読会】ToolLLM: Facilitating Large Language Models to Master 16000+ Real-wo...Deep Learning JP 2023/8/16

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】AnyLoc: Towards Universal Visual Place Recognition

【DL輪読会】AnyLoc: Towards Universal Visual Place RecognitionDeep Learning JP 2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Can Neural Network Memorization Be Localized?

【DL輪読会】Can Neural Network Memorization Be Localized?Deep Learning JP 2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Hopfield network 関連研究について

【DL輪読会】Hopfield network 関連研究についてDeep Learning JP 2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】SimPer: Simple self-supervised learning of periodic targets( ICLR 2023 )

【DL輪読会】SimPer: Simple self-supervised learning of periodic targets( ICLR 2023 )Deep Learning JP 2023/7/28

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】"Secrets of RLHF in Large Language Models Part I: PPO"

【DL輪読会】"Secrets of RLHF in Large Language Models Part I: PPO"Deep Learning JP 2023/7/21

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat Models

【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat ModelsDeep Learning JP 2023/7/20

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】"Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware"

【DL輪読会】"Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware"Deep Learning JP 2023/7/14

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Parameter is Not All You Need:Starting from Non-Parametric Networks fo...

【DL輪読会】Parameter is Not All You Need:Starting from Non-Parametric Networks fo...Deep Learning JP 2023/7/7

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Drag Your GAN: Interactive Point-based Manipulation on the Generative ...

【DL輪読会】Drag Your GAN: Interactive Point-based Manipulation on the Generative ...Deep Learning JP 2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】VIP: Towards Universal Visual Reward and Representation via Value-Impl...

【DL輪読会】VIP: Towards Universal Visual Reward and Representation via Value-Impl...Deep Learning JP 2023/6/23

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】Deep Transformers without Shortcuts: Modifying Self-attention for Fait...

【DL輪読会】Deep Transformers without Shortcuts: Modifying Self-attention for Fait...Deep Learning JP The document proposes modifications to self-attention in Transformers to improve faithful signal propagation without shortcuts like skip connections or layer normalization. Specifically, it introduces a normalization-free network that uses dynamic isometry to ensure unitary transformations, a ReZero technique to implement skip connections without adding shortcuts, and modifications to attention and normalization techniques to address issues like rank collapse in Transformers. The methods are evaluated on tasks like CIFAR-10 classification and language modeling, demonstrating improved performance over standard Transformer architectures.

【DL輪読会】マルチモーダル 基盤モデル

【DL輪読会】マルチモーダル 基盤モデルDeep Learning JP 2023/6/9

Deep Learning JP

http://deeplearning.jp/seminar-2/

【DL輪読会】TrOCR: Transformer-based Optical Character Recognition with Pre-traine...

【DL輪読会】TrOCR: Transformer-based Optical Character Recognition with Pre-traine...Deep Learning JP 2023/6/9

Deep Learning JP

http://deeplearning.jp/seminar-2/

Ad

【DL輪読会】Towards Understanding Ensemble, Knowledge Distillation and Self-Distillation in Deep Learning

- 1. DEEP LEARNING JP [DL Papers] http://deeplearning.jp/ ”Towards Understanding Ensemble, Knowledge Distillation and Self-Distillation in Deep Learning” ICRL2023 Kensuke Wakasugi, Panasonic Holdings Corporation. 1

- 2. 書誌情報 2 タイトル: Towards Understanding Ensemble, Knowledge Distillation and Self-Distillation in Deep Learning 著者: • Zeyuan Allen-Zhu(Meta FAIR Labs) • Yuanzhi Li(Mohamed bin Zayed University of AI) その他: • ICLR 2023 notable top 5% OpenReview 選書理由 • ICLR2023のNotable-top-5%から選出。 • アンサンブルや蒸留の動作原理について興味があったため ※特に記載しない限り、本資料の図表は上記論文からの引用です。

- 3. はじめに 3 ■Contributions • “multi-view”と呼ぶデータ構造を提案 • アンサンブルと蒸留の動作原理を、理論と実験で示した ■背景・課題 • 初期値のみ異なるネットワークの単純平均アンサンブルや蒸留によって予測性能が向上するが、 この現象がなぜ生じているのか理論的に説明できていない。

- 4. 論文概要 4 NTKとDLでは、アンサンブルと蒸留の効果が異なることを理論と実験で示した 𝑓 𝑥 1 𝑁 𝑖 𝑓𝑖 𝑥 1 𝑁 𝑖 𝑓𝑖 𝑥 1 𝑁 𝑖 𝑓𝑖 𝑥 → 𝑔 𝑥 𝑓 𝑥 → 𝑔 𝑥 直接学習 学習後に平均

- 5. 背景 5 (1). Boosting: where the coeffcients associated with the combinations of the single models are actually trained, instead of simply taking average; (2). Bootstrapping/Bagging: the training data are different for each single model; (3). Ensemble of models of different types and architectures; (4). Ensemble of random features or decision trees. ■アンサンブルの理論解析 • いくつかの状況設定で理論解析はあるが、単純平均のアンサンブルにおける理論解析がない 単純平均のアンサンブル学習の理論解析に着目 ■単純平均のアンサンブル学習の理論解析 • 初期化乱数のみ異なるモデル(学習データ、学習率、アーキテクチャ固定)における以下の現 象を 理論的に説明することを試みる Training average does not work: 学習前にモデルをアンサンブルしても効果 なし Knowledge distillation works:単一モデルに複数モデルから蒸留できる Self-distillation works:単一モデルから別の単一モデルへの蒸留でも性能が向上

- 6. Neural Tangent Kernel 6 1、NNパラメータの更新式 𝒘𝑡+1 = 𝒘𝑡 − 𝜂 𝜕𝑙𝑜𝑠𝑠 𝜕𝒘 2、微分方程式とみなすと 𝜕𝒘 𝜕𝑡 = − 𝜕𝑙𝑜𝑠𝑠 𝜕𝒘 = − 𝜕𝒚 𝜕𝒘 (𝒚 − 𝒚) 3、学習中の出力𝑦の変化 𝜕𝒚 𝜕𝑡 = 𝜕𝒚 𝜕𝒘 𝑇 𝜕𝒘 𝜕𝑡 = − 𝜕𝒚 𝜕𝒘 𝑇 𝜕𝒚 𝜕𝒘 (𝒚 − 𝒚) 4、Neural Tangent Kernel 𝝓 = 𝜕𝒚 𝜕𝒘 , 𝑲 = 𝜕𝒚 𝜕𝒘 𝑇 𝜕𝒚 𝜕𝒘 ※ 𝒚は複数の学習データを 並べてベクトル化 ※ 𝜙はカーネル法でいうところの 高次元特徴量空間への写像関数 5、width→∞でK→const 𝜕𝒚 𝜕𝑡 = −𝑲(𝒚 − 𝒚) 6、 𝒅 = 𝒚 − 𝒚について 𝜕𝒅 𝜕𝑡 = −𝑲𝒅 𝒅 𝑡 = 𝒅 0 𝑒−𝑲𝑡 ※𝑲は正定値行列で, 固有値は収束の速さに対応 学習パラメータ 𝒘 出力 𝑦 目的関数 loss 学習データ 𝑥 正解ラベル 𝑦 関数 𝑓 学習中の出力𝑦の変化をNTKで線形近似。大域解に収束できる。 参考:Neural Tangent Kernel: Convergence and Generalization in Neural Networks (neurips.cc) Understanding the Neural Tangent Kernel – Rajat's Blog – A blog about machine learning and math. (rajatvd.github.io)

- 7. NTKにおけるアンサンブル、平均モデル学習、蒸留の効果 7 ■NTKによる出力の近似 • NTK 𝝓 の線形結合で表現 ■アンサンブル • 線形結合をとる𝝓が増える → 特徴選択によって性能向上 ※NTKのアンサンブルでの性能向上は、variance の軽減によるものと思うが、本文中では特徴選択 と記載 アンサンブル、平均モデル学習は機能し、蒸留は機能しない ■平均モデル学習 • 線形結合をとる𝝓が増える+Wも学習 → 特徴選択によって性能向上 ■蒸留 • 蒸留先に、選択された特徴がないの で、 性能向上せず × NTKでは、特徴選択によって性能向上するが、 DLでは別の仕組みで性能向上しているのではないか(特徴 学習)

- 9. 問題設定 9 ■NTK、DLに関する関連研究結果を踏まえ、以下の問題設定を考え る • ガウス分布よりも構造化された入力分布。ラベルノイズなし • 訓練は完璧(誤差0)で、テスト精度にばらつきなし • 初期化乱数のみ異なる複数のモデルを • アーキテクチャや学習データ、学習アルゴの違いなし • 学習の失敗は起こらない 上記を満たしたうえで、 アンサンブルによる性能向上を説明するアイディ アとして、 multi-viewを提案 各種観察事実を説明する仮説としてmulti-viewを提案

- 10. multi-view 10 ■multi-viewデータの例 • 車の構成要素(view)として、 window、headlight、wheelを想 定 • ただし、3つの内1つが欠けた車 もある • 猫を示す特徴を含む場合がある クラスラベルごとに複数viewを持ち、欠損や共存も想定

- 11. multi-view 11 seed違いで異なるviewを獲得。実データでも確認。 • 2クラス分類で、それぞれviewを2つ持つ • ただし、他クラスの特徴を持つ場合、 single viewの場合がある • 学習時、lossを減らすためは、viewのいずれかを 獲得すればよく、viewの未学習が生じる • アンサンブルではそれをカバーするので、性能向 上する • 蒸留では、ソフトラベルによって、0.1だけ含ま れる特徴の学習が促される NTKとの対比として DLでは、必要なviewを学習できるが、 NTK(random feature)では学習できない ため、 振る舞いの違いが生じる

- 12. 理論解析:データ構造 12 P個のパッチにviewが埋め込まれているデータ構造を考える • Vision Transformerのよう な入力形式を想定 • multiとsingleの割合などはパラメータ • 理論解析全体を通して、 各種パラメータをクラス数kで表現する (※理由わからず)。

- 14. 学習 14 一般的な学習方法

- 15. テスト時の精度 15 • 十分高い確率で完璧な学習ができ、 テスト時の不正解確率が0.49μ~0.51μ に収まる • μ:Single Viewの割合 • 1-μ:Multi-Viewの割合 テスト時の精度を解析的に提示

- 16. アンサンブル時の精度 16 • 十分高い確率で完璧な学習ができ、 アンサンブルモデルのテスト時の不正解 確率が<0.001μ • μ:Single Viewの割合 • 1-μ:Multi-Viewの割合 アンサンブルモデルの性能が向上

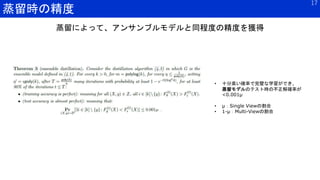

- 17. 蒸留時の精度 17 • 十分高い確率で完璧な学習ができ、 蒸留モデルのテスト時の不正解確率が <0.001μ • μ:Single Viewの割合 • 1-μ:Multi-Viewの割合 蒸留によって、アンサンブルモデルと同程度の精度を獲得

- 18. 自己蒸留の精度 18 • 2モデルアンサンブル相当の性能のため 低めだが、単体モデルよりも性能向上 • μ:Single Viewの割合 • 1-μ:Multi-Viewの割合 2モデルのアンサンブルと同等の精度を獲得

- 21. まとめ・所感 21 まとめ • Multi-view仮説を提案。 • NTKとDLにおけるアンサンブルの働きの違いを理論的に証明。 • ランダムクロップによるデータ拡張など、 Multi-view仮説に基づく特徴獲得手法への発展を期待 所感 • データ構造まで取り扱っており面白い。 • アンサンブルと一言にいっても、機能の仕方が異なっており、その一部をうまく整理 している印象。dropoutなども同様に説明がつきそう。 • まとめにある通り、汎化性能向上策への展開が期待される

![[DeepLearning論文読み会] Dataset Distillation](https://cdn.slidesharecdn.com/ss_thumbnails/datasetdistillation-181114165952-thumbnail.jpg?width=560&fit=bounds)