Custom Pregel Algorithms in ArangoDB

0 likes926 views

These are the slides to the webinar about Custom Pregel algorithms in ArangoDB https://youtu.be/DWJ-nWUxsO8. It provides a brief introduction to the capabilities and use cases for Pregel.

1 of 21

Download to read offline

Ad

Recommended

Java logging

Java loggingJumping Bean A conceptula model for understanding Java logging frameworks presented at the South Africa Oracle Java Developer Conference, May 2012

NoSQL Graph Databases - Why, When and Where

NoSQL Graph Databases - Why, When and WhereEugene Hanikblum NoSQL Graph Databases - Why, When And Where should we use it.

Graph DB - The new era of understanding data

Getting started with MariaDB with Docker

Getting started with MariaDB with DockerMariaDB plc This document discusses using MariaDB and Docker together from development to production. It begins by outlining the benefits of containers and Docker for database deployments. Requirements for databases in containers like data redundancy, self-discovery, self-healing and application tier discovery are discussed. An overview of MariaDB and how it meets these requirements with Galera cluster and MaxScale is provided. The document then demonstrates how to develop and deploy a Python/Flask app with MariaDB from development to a Docker Swarm production cluster behind HAProxy, including scaling the web tier and implementing a hardened database tier with Galera cluster and MaxScale behind secrets. Considerations around storage, networking and upgrades are discussed.

Introducing Riak

Introducing RiakKevin Smith This document introduces Riak and summarizes its key features. Riak is a flexible storage engine that uses a key-value store model and supports document storage via JSON. It has a REST API and supports map/reduce functions. Riak is highly distributed, fault-tolerant, and optimized for availability. It aims to balance consistency and availability according to the CAP theorem by allowing tunable consistency on a per-request basis.

كيفية التعرف على تسجيلات وصف وإتاحة المصادر RDA / إعداد محمد عبدالحميد معوض

كيفية التعرف على تسجيلات وصف وإتاحة المصادر RDA / إعداد محمد عبدالحميد معوضMuhammad Muawwad كيفية التعرف على تسجيلات وصف وإتاحة المصادر (وام)

How to identify RDA records

من خلال مجموعة التعليمات والأمثلة للتمييز بين

التسجيلات الموروثة legacy records

والتسجيلات الجديدة وفق معيار (وام)

والتسجيلات المهجنة التي تتضمن خليط من قواعد

AACR2

وتعليمات

RDA

وبين

RDF, linked data and semantic web

RDF, linked data and semantic webJose Emilio Labra Gayo RDF, linked data and semantic web

Talk given at University of Malta, Master in Science in Artificial Intelligence, 11th December, 2017

Design and Implementation of the Security Graph Language

Design and Implementation of the Security Graph LanguageAsankhaya Sharma Today software is built in fundamentally different

ways from how it was a decade ago. It is increasingly common

for applications to be assembled out of open-source components,

resulting in the use of large amounts of third-party code. This

third-party code is a means for vulnerabilities to make their

way downstream into applications. Recent vulnerabilities such

as Heartbleed, FREAK SSL/TLS, GHOST, and the Equifax data

breach (due to a flaw in Apache Struts) were ultimately caused

by third-party components. We argue that an automated way to

audit the open-source ecosystem, catalog existing vulnerabilities,

and discover new flaws is essential to using open-source safely.

To this end, we describe the Security Graph Language (SGL), a

domain-specific language for analysing graph-structured datasets

of open-source code and cataloguing vulnerabilities. SGL allows

users to express complex queries on relations between libraries

and vulnerabilities in the style of a program analysis language.

SGL queries double as an executable representation for vulnerabilities, allowing vulnerabilities to be automatically checked

against a database and deduplicated using a canonical representation. We outline a novel optimisation for SGL queries based on

regular path query containment, improving query performance up to 3 orders of magnitude. We also demonstrate the

effectiveness of SGL in practice to find zero-day vulnerabilities

by identifying sever

Dart the Better JavaScript

Dart the Better JavaScriptJorg Janke Dart is a new language for the web, enabling you to write JavaScript on a secure and manageable way. No need to worry about "JavaScript: The bad parts".

This presentation concentrates on the developer experience converting from the Java based GWT to Dart.

Building Your First Apache Apex Application

Building Your First Apache Apex ApplicationApache Apex This document provides an overview of building an Apache Apex application, including key concepts like DAGs, operators, and ports. It also includes an example "word count" application and demonstrates how to define the application and operators, and build Apache Apex from source code. The document outlines the sample application workflow and includes information on resources for learning more about Apache Apex.

Building your first aplication using Apache Apex

Building your first aplication using Apache ApexYogi Devendra Vyavahare This document provides an overview of building an Apache Apex application, including key concepts like DAGs, operators, and ports. It also includes an example "word count" application and demonstrates how to define the application and operators, and build Apache Apex from source code. The document outlines the sample application workflow and includes information on resources for learning more about Apache Apex.

GraphQL & DGraph with Go

GraphQL & DGraph with GoJames Tan This document discusses GraphQL and DGraph with GO. It begins by introducing GraphQL and some popular GraphQL implementations in GO like graphql-go. It then discusses DGraph, describing it as a distributed, high performance graph database written in GO. It provides examples of using the DGraph GO client to perform CRUD operations, querying for single and multiple objects, committing transactions, and more.

Hadoop and HBase experiences in perf log project

Hadoop and HBase experiences in perf log projectMao Geng This document discusses experiences using Hadoop and HBase in the Perf-Log project. It provides an overview of the Perf-Log data format and architecture, describes how Hadoop and HBase were configured, and gives examples of using MapReduce jobs and HBase APIs like Put and Scan to analyze log data. Key aspects covered include matching Hadoop and HBase versions, running MapReduce jobs, using column families in HBase, and filtering Scan results.

Oracle to Postgres Schema Migration Hustle

Oracle to Postgres Schema Migration HustleEDB Whether migrating a database or application from Oracle to Postgres, as a first step, we need to analyze the database objects(DDLs), to find out the incompatibilities between both the databases and estimate the time and cost required for the migration. In schema migration, having a good knowledge of Oracle and Postgres helps to identify incompatibilities and choose the right tool for analysis/conversion. In this webinar, we will discuss schema incompatibility hurdles when migrating from Oracle to Postgres and how to overcome them.

What you will learn in this webinar:

- How you identify if your oracle schema is compatible with PostgreSQL

- Incompatibility hurdles and identifying them with Migration tools

- How to Overcome incompatibility hurdles

- Available tools for conversion

- Post migration activities - functional testing, performance analysis, data migration, application switchover

Big Data processing with Apache Spark

Big Data processing with Apache SparkLucian Neghina Get started with Apache Spark parallel computing framework.

This is a keynote from the series "by Developer for Developers"

Java 8

Java 8vilniusjug This document summarizes key parts of Java 8 including lambda expressions, method references, default methods, streams API improvements, removal of PermGen space, and the new date/time API. It provides code examples and explanations of lambda syntax and functional interfaces. It also discusses advantages of the streams API like lazy evaluation and parallelization. Finally, it briefly outlines the motivation for removing PermGen and standardizing the date/time API in Java 8.

Dart the better Javascript 2015

Dart the better Javascript 2015Jorg Janke Do you want to upgrade your GWT application or write a sizable web application? Dart is the efficient choice.

As a brief example, check out http://lightningdart.com

This presentation is updated October 2015 for Silicon Valley Code Camp

BUD17-302: LLVM Internals #2

BUD17-302: LLVM Internals #2 Linaro BUD17-302: LLVM Internals #2

Speaker: Renato Golin, Peter Smith, Diana Picus, Omair Javaid, Adhemerval Zanella

Track: Toolchain

★ Session Summary ★

Continuing from LAS16 and, if we have time, introducing global isel that we’re working on.

---------------------------------------------------

★ Resources ★

Event Page: http://connect.linaro.org/resource/bud17/bud17-302/

Presentation:

Video:

---------------------------------------------------

★ Event Details ★

Linaro Connect Budapest 2017 (BUD17)

6-10 March 2017

Corinthia Hotel, Budapest,

Erzsébet krt. 43-49,

1073 Hungary

---------------------------------------------------

http://www.linaro.org

http://connect.linaro.org

---------------------------------------------------

Follow us on Social Media

https://www.facebook.com/LinaroOrg

https://twitter.com/linaroorg

https://www.youtube.com/user/linaroorg?sub_confirmation=1

https://www.linkedin.com/company/1026961

"

Spark Concepts - Spark SQL, Graphx, Streaming

Spark Concepts - Spark SQL, Graphx, StreamingPetr Zapletal This document provides an overview of Apache Spark modules including Spark SQL, GraphX, and Spark Streaming. Spark SQL allows querying structured data using SQL, GraphX provides APIs for graph processing, and Spark Streaming enables scalable stream processing. The document discusses Resilient Distributed Datasets (RDDs), SchemaRDDs, querying data with SQLContext, GraphX property graphs and algorithms, StreamingContext, and input/output operations in Spark Streaming.

CS267_Graph_Lab

CS267_Graph_LabJaideepKatkar GraphLab is a framework for parallel machine learning that represents data as a graph and uses shared tables. It allows users to define update, fold, and merge functions to modify vertex/edge states and aggregate data in shared tables. The GraphLab toolkit includes applications for topic modeling, graph analytics, clustering, collaborative filtering, and computer vision. Users can run GraphLab on Amazon EC2 by satisfying dependencies, compiling, and running examples like stochastic gradient descent for collaborative filtering on Netflix data.

AWS Big Data Demystified #2 | Athena, Spectrum, Emr, Hive

AWS Big Data Demystified #2 | Athena, Spectrum, Emr, Hive Omid Vahdaty This document provides an overview of various AWS big data services including Athena, Redshift Spectrum, EMR, and Hive. It discusses how Athena allows users to run SQL queries directly on data stored in S3 using Presto. Redshift Spectrum enables querying data in S3 using standard SQL from Amazon Redshift. EMR is a managed Hadoop framework that can run Hive, Spark, and other big data applications. Hive provides a SQL-like interface to query data stored in various formats like Parquet and ORC on distributed storage systems. The document demonstrates features and provides best practices for working with these AWS big data services.

Apache Hive for modern DBAs

Apache Hive for modern DBAsLuis Marques Apache Hive is an open source data warehousing framework built on Hadoop. It allows users to query large datasets using SQL and handles parallelization behind the scenes. Hive supports various file formats like ORC, Parquet, and Avro. It uses a directed acyclic graph (DAG) execution engine like Tez or Spark to improve performance over traditional MapReduce. The metastore stores metadata about databases, tables, and partitions to allow data discovery and abstraction. Hive's cost-based optimizer and in-memory query processing features like LLAP improve performance for interactive queries on large datasets.

Meetup C++ A brief overview of c++17

Meetup C++ A brief overview of c++17Daniel Eriksson Slides uses during my presentation of "A brief overview of C++17" @ Meetup C++ GBG, Sweden 2017-04-05

Design for Scalability in ADAM

Design for Scalability in ADAMfnothaft ADAM is an open source, high performance, distributed platform for genomic analysis built on Apache Spark. It defines a Scala API and data schema using Avro and Parquet to store data in a columnar format, addressing the I/O bottleneck in genomics pipelines. ADAM implements common genomics algorithms as data or graph parallel computations and minimizes data movement by sending code to the data using Spark. It is designed to scale to processing whole human genomes across distributed file systems and cloud infrastructure.

Apache spark - Spark's distributed programming model

Apache spark - Spark's distributed programming modelMartin Zapletal Spark's distributed programming model uses resilient distributed datasets (RDDs) and a directed acyclic graph (DAG) approach. RDDs support transformations like map, filter, and actions like collect. Transformations are lazy and form the DAG, while actions execute the DAG. RDDs support caching, partitioning, and sharing state through broadcasts and accumulators. The programming model aims to optimize the DAG through operations like predicate pushdown and partition coalescing.

Java High Level Stream API

Java High Level Stream APIApache Apex Presenter - Siyuan Hua, Apache Apex PMC Member & DataTorrent Engineer

Apache Apex provides a DAG construction API that gives the developers full control over the logical plan. Some use cases don't require all of that flexibility, at least so it may appear initially. Also a large part of the audience may be more familiar with an API that exhibits more functional programming flavor, such as the new Java 8 Stream interfaces and the Apache Flink and Spark-Streaming API. Thus, to make Apex beginners to get simple first app running with familiar API, we are now providing the Stream API on top of the existing DAG API. The Stream API is designed to be easy to use yet flexible to extend and compatible with the native Apex API. This means, developers can construct their application in a way similar to Flink, Spark but also have the power to fine tune the DAG at will. Per our roadmap, the Stream API will closely follow Apache Beam (aka Google Data Flow) model. In the future, you should be able to either easily run Beam applications with the Apex Engine or express an existing application in a more declarative style.

Tech Talk - Overview of Dash framework for building dashboards

Tech Talk - Overview of Dash framework for building dashboardsAppsilon Data Science Introduction to Dash framework from Plotly, reactive framework for building dashboards in Python. Tech talk covers basics and more advanced topics like custom component and scaling.

Speaker: Damian Rodziewicz.

Resources:

1. Blogpost

https://appsilon.com/overview-of-dash-python-framework-from-plotly-for-building-dashboards/

2. Github

https://github.com/DamianRodziewicz/dash_example

app.py

https://github.com/DamianRodziewicz/dash_example longer_computations_app.py

3. Youtube:

https://youtu.be/NUXUmv-aeG4

Anatomy of spark catalyst

Anatomy of spark catalystdatamantra This document provides an overview of Spark Catalyst including:

- Catalyst trees and expressions represent logical and physical query plans

- Expressions have datatypes and operate on Row objects

- Custom expressions can be defined

- Code generation improves expression evaluation performance by generating Java code via Janino compiler

- Key concepts like trees, expressions, datatypes, rows, code generation and Janino compiler are explained through examples

Hadoop ecosystem

Hadoop ecosystemRan Silberman Hadoop became the most common systm to store big data.

With Hadoop, many supporting systems emerged to complete the aspects that are missing in Hadoop itself.

Together they form a big ecosystem.

This presentation covers some of those systems.

While not capable to cover too many in one presentation, I tried to focus on the most famous/popular ones and on the most interesting ones.

ATO 2022 - Machine Learning + Graph Databases for Better Recommendations (3)....

ATO 2022 - Machine Learning + Graph Databases for Better Recommendations (3)....ArangoDB Database This document summarizes techniques for combining machine learning and graph databases for better recommendations. It discusses using collaborative filtering with AQL, content-based recommendations with TFIDF and FAISS, and graph neural networks with PyTorch. The document also describes an ArangoFlix demo project that combines these techniques on a movie recommendation system using ArangoDB as the backend graph database.

Machine Learning + Graph Databases for Better Recommendations V2 08/20/2022

Machine Learning + Graph Databases for Better Recommendations V2 08/20/2022ArangoDB Database Note: You have to download the slides and use either powerpoint or google slides to make the links clickable.

Machine Learning + Graph Databases for Better Recommendations

Presented by Chris Woodward

More Related Content

Similar to Custom Pregel Algorithms in ArangoDB (20)

Building Your First Apache Apex Application

Building Your First Apache Apex ApplicationApache Apex This document provides an overview of building an Apache Apex application, including key concepts like DAGs, operators, and ports. It also includes an example "word count" application and demonstrates how to define the application and operators, and build Apache Apex from source code. The document outlines the sample application workflow and includes information on resources for learning more about Apache Apex.

Building your first aplication using Apache Apex

Building your first aplication using Apache ApexYogi Devendra Vyavahare This document provides an overview of building an Apache Apex application, including key concepts like DAGs, operators, and ports. It also includes an example "word count" application and demonstrates how to define the application and operators, and build Apache Apex from source code. The document outlines the sample application workflow and includes information on resources for learning more about Apache Apex.

GraphQL & DGraph with Go

GraphQL & DGraph with GoJames Tan This document discusses GraphQL and DGraph with GO. It begins by introducing GraphQL and some popular GraphQL implementations in GO like graphql-go. It then discusses DGraph, describing it as a distributed, high performance graph database written in GO. It provides examples of using the DGraph GO client to perform CRUD operations, querying for single and multiple objects, committing transactions, and more.

Hadoop and HBase experiences in perf log project

Hadoop and HBase experiences in perf log projectMao Geng This document discusses experiences using Hadoop and HBase in the Perf-Log project. It provides an overview of the Perf-Log data format and architecture, describes how Hadoop and HBase were configured, and gives examples of using MapReduce jobs and HBase APIs like Put and Scan to analyze log data. Key aspects covered include matching Hadoop and HBase versions, running MapReduce jobs, using column families in HBase, and filtering Scan results.

Oracle to Postgres Schema Migration Hustle

Oracle to Postgres Schema Migration HustleEDB Whether migrating a database or application from Oracle to Postgres, as a first step, we need to analyze the database objects(DDLs), to find out the incompatibilities between both the databases and estimate the time and cost required for the migration. In schema migration, having a good knowledge of Oracle and Postgres helps to identify incompatibilities and choose the right tool for analysis/conversion. In this webinar, we will discuss schema incompatibility hurdles when migrating from Oracle to Postgres and how to overcome them.

What you will learn in this webinar:

- How you identify if your oracle schema is compatible with PostgreSQL

- Incompatibility hurdles and identifying them with Migration tools

- How to Overcome incompatibility hurdles

- Available tools for conversion

- Post migration activities - functional testing, performance analysis, data migration, application switchover

Big Data processing with Apache Spark

Big Data processing with Apache SparkLucian Neghina Get started with Apache Spark parallel computing framework.

This is a keynote from the series "by Developer for Developers"

Java 8

Java 8vilniusjug This document summarizes key parts of Java 8 including lambda expressions, method references, default methods, streams API improvements, removal of PermGen space, and the new date/time API. It provides code examples and explanations of lambda syntax and functional interfaces. It also discusses advantages of the streams API like lazy evaluation and parallelization. Finally, it briefly outlines the motivation for removing PermGen and standardizing the date/time API in Java 8.

Dart the better Javascript 2015

Dart the better Javascript 2015Jorg Janke Do you want to upgrade your GWT application or write a sizable web application? Dart is the efficient choice.

As a brief example, check out http://lightningdart.com

This presentation is updated October 2015 for Silicon Valley Code Camp

BUD17-302: LLVM Internals #2

BUD17-302: LLVM Internals #2 Linaro BUD17-302: LLVM Internals #2

Speaker: Renato Golin, Peter Smith, Diana Picus, Omair Javaid, Adhemerval Zanella

Track: Toolchain

★ Session Summary ★

Continuing from LAS16 and, if we have time, introducing global isel that we’re working on.

---------------------------------------------------

★ Resources ★

Event Page: http://connect.linaro.org/resource/bud17/bud17-302/

Presentation:

Video:

---------------------------------------------------

★ Event Details ★

Linaro Connect Budapest 2017 (BUD17)

6-10 March 2017

Corinthia Hotel, Budapest,

Erzsébet krt. 43-49,

1073 Hungary

---------------------------------------------------

http://www.linaro.org

http://connect.linaro.org

---------------------------------------------------

Follow us on Social Media

https://www.facebook.com/LinaroOrg

https://twitter.com/linaroorg

https://www.youtube.com/user/linaroorg?sub_confirmation=1

https://www.linkedin.com/company/1026961

"

Spark Concepts - Spark SQL, Graphx, Streaming

Spark Concepts - Spark SQL, Graphx, StreamingPetr Zapletal This document provides an overview of Apache Spark modules including Spark SQL, GraphX, and Spark Streaming. Spark SQL allows querying structured data using SQL, GraphX provides APIs for graph processing, and Spark Streaming enables scalable stream processing. The document discusses Resilient Distributed Datasets (RDDs), SchemaRDDs, querying data with SQLContext, GraphX property graphs and algorithms, StreamingContext, and input/output operations in Spark Streaming.

CS267_Graph_Lab

CS267_Graph_LabJaideepKatkar GraphLab is a framework for parallel machine learning that represents data as a graph and uses shared tables. It allows users to define update, fold, and merge functions to modify vertex/edge states and aggregate data in shared tables. The GraphLab toolkit includes applications for topic modeling, graph analytics, clustering, collaborative filtering, and computer vision. Users can run GraphLab on Amazon EC2 by satisfying dependencies, compiling, and running examples like stochastic gradient descent for collaborative filtering on Netflix data.

AWS Big Data Demystified #2 | Athena, Spectrum, Emr, Hive

AWS Big Data Demystified #2 | Athena, Spectrum, Emr, Hive Omid Vahdaty This document provides an overview of various AWS big data services including Athena, Redshift Spectrum, EMR, and Hive. It discusses how Athena allows users to run SQL queries directly on data stored in S3 using Presto. Redshift Spectrum enables querying data in S3 using standard SQL from Amazon Redshift. EMR is a managed Hadoop framework that can run Hive, Spark, and other big data applications. Hive provides a SQL-like interface to query data stored in various formats like Parquet and ORC on distributed storage systems. The document demonstrates features and provides best practices for working with these AWS big data services.

Apache Hive for modern DBAs

Apache Hive for modern DBAsLuis Marques Apache Hive is an open source data warehousing framework built on Hadoop. It allows users to query large datasets using SQL and handles parallelization behind the scenes. Hive supports various file formats like ORC, Parquet, and Avro. It uses a directed acyclic graph (DAG) execution engine like Tez or Spark to improve performance over traditional MapReduce. The metastore stores metadata about databases, tables, and partitions to allow data discovery and abstraction. Hive's cost-based optimizer and in-memory query processing features like LLAP improve performance for interactive queries on large datasets.

Meetup C++ A brief overview of c++17

Meetup C++ A brief overview of c++17Daniel Eriksson Slides uses during my presentation of "A brief overview of C++17" @ Meetup C++ GBG, Sweden 2017-04-05

Design for Scalability in ADAM

Design for Scalability in ADAMfnothaft ADAM is an open source, high performance, distributed platform for genomic analysis built on Apache Spark. It defines a Scala API and data schema using Avro and Parquet to store data in a columnar format, addressing the I/O bottleneck in genomics pipelines. ADAM implements common genomics algorithms as data or graph parallel computations and minimizes data movement by sending code to the data using Spark. It is designed to scale to processing whole human genomes across distributed file systems and cloud infrastructure.

Apache spark - Spark's distributed programming model

Apache spark - Spark's distributed programming modelMartin Zapletal Spark's distributed programming model uses resilient distributed datasets (RDDs) and a directed acyclic graph (DAG) approach. RDDs support transformations like map, filter, and actions like collect. Transformations are lazy and form the DAG, while actions execute the DAG. RDDs support caching, partitioning, and sharing state through broadcasts and accumulators. The programming model aims to optimize the DAG through operations like predicate pushdown and partition coalescing.

Java High Level Stream API

Java High Level Stream APIApache Apex Presenter - Siyuan Hua, Apache Apex PMC Member & DataTorrent Engineer

Apache Apex provides a DAG construction API that gives the developers full control over the logical plan. Some use cases don't require all of that flexibility, at least so it may appear initially. Also a large part of the audience may be more familiar with an API that exhibits more functional programming flavor, such as the new Java 8 Stream interfaces and the Apache Flink and Spark-Streaming API. Thus, to make Apex beginners to get simple first app running with familiar API, we are now providing the Stream API on top of the existing DAG API. The Stream API is designed to be easy to use yet flexible to extend and compatible with the native Apex API. This means, developers can construct their application in a way similar to Flink, Spark but also have the power to fine tune the DAG at will. Per our roadmap, the Stream API will closely follow Apache Beam (aka Google Data Flow) model. In the future, you should be able to either easily run Beam applications with the Apex Engine or express an existing application in a more declarative style.

Tech Talk - Overview of Dash framework for building dashboards

Tech Talk - Overview of Dash framework for building dashboardsAppsilon Data Science Introduction to Dash framework from Plotly, reactive framework for building dashboards in Python. Tech talk covers basics and more advanced topics like custom component and scaling.

Speaker: Damian Rodziewicz.

Resources:

1. Blogpost

https://appsilon.com/overview-of-dash-python-framework-from-plotly-for-building-dashboards/

2. Github

https://github.com/DamianRodziewicz/dash_example

app.py

https://github.com/DamianRodziewicz/dash_example longer_computations_app.py

3. Youtube:

https://youtu.be/NUXUmv-aeG4

Anatomy of spark catalyst

Anatomy of spark catalystdatamantra This document provides an overview of Spark Catalyst including:

- Catalyst trees and expressions represent logical and physical query plans

- Expressions have datatypes and operate on Row objects

- Custom expressions can be defined

- Code generation improves expression evaluation performance by generating Java code via Janino compiler

- Key concepts like trees, expressions, datatypes, rows, code generation and Janino compiler are explained through examples

Hadoop ecosystem

Hadoop ecosystemRan Silberman Hadoop became the most common systm to store big data.

With Hadoop, many supporting systems emerged to complete the aspects that are missing in Hadoop itself.

Together they form a big ecosystem.

This presentation covers some of those systems.

While not capable to cover too many in one presentation, I tried to focus on the most famous/popular ones and on the most interesting ones.

More from ArangoDB Database (20)

ATO 2022 - Machine Learning + Graph Databases for Better Recommendations (3)....

ATO 2022 - Machine Learning + Graph Databases for Better Recommendations (3)....ArangoDB Database This document summarizes techniques for combining machine learning and graph databases for better recommendations. It discusses using collaborative filtering with AQL, content-based recommendations with TFIDF and FAISS, and graph neural networks with PyTorch. The document also describes an ArangoFlix demo project that combines these techniques on a movie recommendation system using ArangoDB as the backend graph database.

Machine Learning + Graph Databases for Better Recommendations V2 08/20/2022

Machine Learning + Graph Databases for Better Recommendations V2 08/20/2022ArangoDB Database Note: You have to download the slides and use either powerpoint or google slides to make the links clickable.

Machine Learning + Graph Databases for Better Recommendations

Presented by Chris Woodward

Machine Learning + Graph Databases for Better Recommendations V1 08/06/2022

Machine Learning + Graph Databases for Better Recommendations V1 08/06/2022ArangoDB Database Note: You have to download the slides and use either powerpoint or google slides to make the links clickable.

Machine Learning + Graph Databases for Better Recommendations

Presented by Chris Woodward

ArangoDB 3.9 - Further Powering Graphs at Scale

ArangoDB 3.9 - Further Powering Graphs at ScaleArangoDB Database Join our CTO Jörg Schad for an overview of the latest features and updates in ArangoDBs latest release.

GraphSage vs Pinsage #InsideArangoDB

GraphSage vs Pinsage #InsideArangoDBArangoDB Database The ArangoML Group had a detailed discussion on the topic "GraphSage Vs PinSage" where they shared their thoughts on the difference between the working principles of two popular Graph ML algorithms. The following slidedeck is an accumulation of their thoughts about the comparison between the two algorithms.

Webinar: ArangoDB 3.8 Preview - Analytics at Scale

Webinar: ArangoDB 3.8 Preview - Analytics at Scale ArangoDB Database The ArangoDB community and team are proud to preview the next version of ArangoDB, an open-source, highly scalable graph database with multi-model capabilities. Join our CTO, Jörg Schad, Ph.D. and Developer Relation Engineer Chris Woodward in this webinar to learn more about ArangoDB 3.8 and the roadmap for upcoming releases.

Graph Analytics with ArangoDB

Graph Analytics with ArangoDBArangoDB Database This document discusses using graphs and graph databases for machine learning. It provides an overview of graph analytics algorithms that can be used to solve problems with graph data, including recommendations, fraud detection, and network analysis. It also discusses using graph embeddings and graph neural networks for tasks like node classification and link prediction. Finally, it discusses how graphs can be used for machine learning infrastructure and metadata tasks like data provenance, audit trails, and privacy.

Getting Started with ArangoDB Oasis

Getting Started with ArangoDB OasisArangoDB Database These are the slides from the Getting Started with ArangoDB Oasis webinar: https://www.arangodb.com/events/getting-started-with-arangodb-oasis/

Get your own Oasis with a free 14-day trial (no credit card required) at https://cloud.arangodb.com/home.

Hacktoberfest 2020 - Intro to Knowledge Graphs

Hacktoberfest 2020 - Intro to Knowledge GraphsArangoDB Database Hacktoberfest 2020 'Intro to Knowledge Graph' with Chris Woodward of ArangoDB and reKnowledge. Accompanying video is available here: https://youtu.be/ZZt6xBmltz4

A Graph Database That Scales - ArangoDB 3.7 Release Webinar

A Graph Database That Scales - ArangoDB 3.7 Release WebinarArangoDB Database örg Schad (Head of Engineering and ML) and Chris Woodward (Developer Relations Engineer) introduce the new capabilities to work with graph in a distributed setting. In addition explain and showcase the new fuzzy search within ArangoDB's search engine as well as JSON schema validation.

Get started with ArangoDB: https://www.arangodb.com/arangodb-tra...

Explore ArangoDB Cloud for free with 1-click demos: https://cloud.arangodb.com/home

ArangoDB is a native multi-model database written in C++ supporting graph, document and key/value needs with one engine and one query language. Fulltext search and ranking is supported via ArangoSearch the fully integrated C++ based search engine in ArangoDB.

gVisor, Kata Containers, Firecracker, Docker: Who is Who in the Container Space?

gVisor, Kata Containers, Firecracker, Docker: Who is Who in the Container Space?ArangoDB Database View the video of this webinar here: https://www.arangodb.com/arangodb-events/gvisor-kata-containers-firecracker-docker/

Containers* have revolutionized the IT landscape and for a long time. Docker seemed to be the default whenever people were talking about containerization technologies**. But traditional container technologies might not be suitable if strong isolation guarantees are required. So recently new technologies such as gVisor, Kata Container, or firecracker have been introduced to close the gap between the strong isolation of virtual machines and the small resource footprint of containers.

In this talk, we will provide an overview of the different containerization technologies, discuss their tradeoffs, and provide guidance for different use cases.

* We will define the term container in more detailed during the talk

** and yes we will also cover some of the pre-docker container space!

ArangoML Pipeline Cloud - Managed Machine Learning Metadata

ArangoML Pipeline Cloud - Managed Machine Learning MetadataArangoDB Database We all know good training data is crucial for data scientists to build quality machine learning models. But when productionizing Machine Learning, Metadata is equally important. Consider for example:

- Provenance of model allowing for reproducible builds

- Context to comply with GDPR, CCPA requirements

- Identifying data shift in your production data

This is the reason we built ArangoML Pipeline, a flexible Metadata store which can be used with your existing ML Pipeline.

Today we are happy to announce a release of ArangoML Pipeline Cloud. Now you can start using ArangoML Pipeline without having to even start a separate docker container.

In this webinar, we will show how to leverage ArangoML Pipeline Cloud with your Machine Learning Pipeline by using an example notebook from the TensorFlow tutorial.

Find the video here: https://www.arangodb.com/arangodb-events/arangoml-pipeline-cloud/

ArangoDB 3.7 Roadmap: Performance at Scale

ArangoDB 3.7 Roadmap: Performance at ScaleArangoDB Database Find the recording of this webinar here: https://www.arangodb.com/arangodb-events/3-7-roadmap-performance-at-scale/

After the release of ArangoDB 3.6 we are starting to work on the next version with even more exciting features. As an open-source project we would love to hear your ideas and discuss the roadmap with our community.

Would you like to learn more about Satellite Graphs, Schema Validation, a number of performance and security improvements?

Than join Jörg Schad, Head of Engineering and Machine Learning at ArangoDB, who will share the latest plans for the upcoming ArangoDB 3.7 release as well as the long term roadmap.

Webinar: What to expect from ArangoDB Oasis

Webinar: What to expect from ArangoDB OasisArangoDB Database The long-awaited Managed Service for ArangoDB is finally here! Users have a fully managed document, graph, and key/value store, plus a search engine, in one place. As we thought of such a powerful service — something that gives you room to breathe, relax, and having someone else taking care of everything —, we called it Oasis.

In this live webinar, Ewout Prangsma, Architect & Teamlead of ArangoDB Oasis, walks you through all the main capabilities of the new service, including high availability, elastic scalability, enterprise-grade security, and also demo the different deployment modes you have at your fingertips.

Before the Q&A part, Ewout also shares what you will be capable of in the future.

ArangoDB 3.5 Feature Overview Webinar - Sept 12, 2019

ArangoDB 3.5 Feature Overview Webinar - Sept 12, 2019ArangoDB Database The new ArangoDB 3.5 release is here and includes a number of minor and major new features. For example, the ability to perform distributed JOIN operations with SmartJoins, new text search features in ArangoSearch, new consistent backup mechanism, and extended graph database features including k-shortest path queries and the new PRUNE keyword for more efficient queries. Jörg Schad, our Head of Engineering and Machine Learning, will discuss these new features and provide a hands-on demo on how to leverage them for your use case.

3.5 webinar

3.5 webinar ArangoDB Database This document summarizes new features in ArangoDB version 3.5 including distributed joins, streaming transactions, expanded graph and search capabilities, hot backups, data masking, and time-to-live indexes. It also previews upcoming features like fuzzy search, autocomplete, and faceted search in ArangoSearch as well as k-shortest paths and pruning in graphs.

Webinar: How native multi model works in ArangoDB

Webinar: How native multi model works in ArangoDBArangoDB Database These are the slides from the webinar, where Chris & Jan walked through the basic concepts, key features and query options you have within ArangoDB as well as discuss scalability considerations for different data models. Chris is the hands-on guy and will showcase a variety of query options you have with a native multi-model database like ArangoDB

An introduction to multi-model databases

An introduction to multi-model databasesArangoDB Database In these slides, Jan Steemann, core member of the ArangoDB project, introduced to the idea of native multi-model databases and how this approach can provide much more flexibility for developers, software architects & data scientists.

Running complex data queries in a distributed system

Running complex data queries in a distributed systemArangoDB Database With the always-growing amount of data, it is getting increasingly hard to store and get it back efficiently. While the first versions of distributed databases have put all the burden of sharding on the application code, there are now some smarter solutions that handle most of the data distribution and resilience tasks inside the database.

This poses some interesting questions, e.g.

- how are other than by-primary-key queries actually organized and executed in a distributed system, so that they can run most efficiently?

- how do the contemporary distributed databases actually achieve transactional semantics for non-trivial operations that affect different shards/servers?

This talk will give an overview of these challenges and the available solutions that some open source distributed databases have picked to solve them.

Guacamole Fiesta: What do avocados and databases have in common?

Guacamole Fiesta: What do avocados and databases have in common?ArangoDB Database First, our CTO, Frank Celler, does a quick overview of the latest feature developments and what is new with ArangoDB.

Then, Senior Graph Specialist, Michael Hackstein talks about multi-model database movement, diving deeper into main advantages and technological benefits. He introduces three data-models of ArangoDB (Documents, Graphs and Key-Values) and the reasons behind the technology. We have a look at the ArangoDB Query language (AQL) with hands-on examples. Compare AQL to SQL, see where the differences are and what makes AQL better comprehensible for developers. Finally, we touch the Foxx Microservice framework which allows to easily extend ArangoDB and include it in your microservices landscape.

Ad

Recently uploaded (20)

Tableau Finland User Group June 2025.pdf

Tableau Finland User Group June 2025.pdfelinavihriala Finland Tableau User Group June 2025 presentations

apidays New York 2025 - Building Scalable AI Systems by Sai Prasad Veluru (Ap...

apidays New York 2025 - Building Scalable AI Systems by Sai Prasad Veluru (Ap...apidays Building Scalable AI Systems: Cloud Architecture for Performance

Sai Prasad Veluru, Software Engineer at Apple Inc

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

apidays New York 2025 - Two tales of API Change Management by Eric Koleda (Coda)

apidays New York 2025 - Two tales of API Change Management by Eric Koleda (Coda)apidays Two tales of API Change Management from my time at Google

Eric Koleda, Developer Advocate at Coda

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

apidays New York 2025 - Spring Modulith Design for Microservices by Renjith R...

apidays New York 2025 - Spring Modulith Design for Microservices by Renjith R...apidays Spring Modulith Design for Microservices

Renjith Ramachandran, Senior Solutions Architect at BJS Wholesale Club

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

AG-FIRMA FINCOME ARTICLE AI AGENT RAG.pdf

AG-FIRMA FINCOME ARTICLE AI AGENT RAG.pdfAnass Nabil AI CHAT BOT Design of a multilingual AI assistant to optimize agricultural practices in Morocco

Delivery service status checking

Mobile architecture + orchestrator LLM + expert agents (RAG, weather,sensors).

FYE 2025 Business Results and FYE 2026 Management Plan.pptx

FYE 2025 Business Results and FYE 2026 Management Plan.pptxHerianto80 FYE 2025 Business Results and FYE 2026 Management Plan

1022_ExtendEnrichExcelUsingPythonWithTableau_04_16+04_17 (1).pdf

1022_ExtendEnrichExcelUsingPythonWithTableau_04_16+04_17 (1).pdfelinavihriala Ever wondered how to inject your dashboards with the power of Python? This presentation will show how combining Tableau with Python can unlock advanced analytics, predictive modeling, and automation that’ll make your dashboards not just smarter—but practically psychic

apidays New York 2025 - Why an SDK is Needed to Protect APIs from Mobile Apps...

apidays New York 2025 - Why an SDK is Needed to Protect APIs from Mobile Apps...apidays Why an SDK is Needed to Protect APIs from Mobile Apps

Pearce Erensel, Global VP of Sales at Approov Mobile Security

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

apidays New York 2025 - The Challenge is Not the Pattern, But the Best Integr...

apidays New York 2025 - The Challenge is Not the Pattern, But the Best Integr...apidays The Challenge is Not the Pattern, But the Best Integration

Yisrael Gross, CEO at Ammune.ai

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

"Machine Learning in Agriculture: 12 Production-Grade Models", Danil Polyakov

"Machine Learning in Agriculture: 12 Production-Grade Models", Danil PolyakovFwdays Kernel is currently the leading producer of sunflower oil and one of the largest agroholdings in Ukraine. What business challenges are they addressing, and why is ML a must-have? This talk explores the development of the data science team at Kernel—from early experiments in Google Colab to building minimal in-house infrastructure and eventually scaling up through an infrastructure partnership with De Novo. The session will highlight their work on crop yield forecasting, the positive results from testing on H100, and how the speed gains enabled the team to solve more business tasks.

LONGSEM2024-25_CSE3015_ETH_AP2024256000125_Reference-Material-I.pptx

LONGSEM2024-25_CSE3015_ETH_AP2024256000125_Reference-Material-I.pptxvemuripraveena2622 kuyftdrsdgyuh

原版英国威尔士三一圣大卫大学毕业证(UWTSD毕业证书)如何办理

原版英国威尔士三一圣大卫大学毕业证(UWTSD毕业证书)如何办理Taqyea 学校原版文凭补办(UWTSD毕业证书)威尔士三一圣大卫大学毕业证购买毕业证【q微1954292140】威尔士三一圣大卫大学offer/学位证、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作【q微1954292140】Buy University of Wales Trinity Saint David Diploma购买美国毕业证,购买英国毕业证,购买澳洲毕业证,购买加拿大毕业证,以及德国毕业证,购买法国毕业证(q微1954292140)购买荷兰毕业证、购买瑞士毕业证、购买日本毕业证、购买韩国毕业证、购买新西兰毕业证、购买新加坡毕业证、购买西班牙毕业证、购买马来西亚毕业证等。包括了本科毕业证,硕士毕业证。

主营项目:

1、真实教育部国外学历学位认证《英国毕业文凭证书快速办理威尔士三一圣大卫大学学位证书成绩单代办服务》【q微1954292140】《论文没过威尔士三一圣大卫大学正式成绩单》,教育部存档,教育部留服网站100%可查.

2、办理UWTSD毕业证,改成绩单《UWTSD毕业证明办理威尔士三一圣大卫大学文凭认证》【Q/WeChat:1954292140】Buy University of Wales Trinity Saint David Certificates《正式成绩单论文没过》,威尔士三一圣大卫大学Offer、在读证明、学生卡、信封、证明信等全套材料,从防伪到印刷,从水印到钢印烫金,高精仿度跟学校原版100%相同.

3、真实使馆认证(即留学人员回国证明),使馆存档可通过大使馆查询确认.

4、留信网认证,国家专业人才认证中心颁发入库证书,留信网存档可查.

英国威尔士三一圣大卫大学毕业证(UWTSD毕业证书)UWTSD文凭【q微1954292140】高仿真还原英国文凭证书和外壳,定制英国威尔士三一圣大卫大学成绩单和信封。研究生学历信息UWTSD毕业证【q微1954292140】文凭详解细节威尔士三一圣大卫大学offer/学位证英国毕业证、留信官方学历认证(永久存档真实可查)采用学校原版纸张、特殊工艺完全按照原版一比一制作。帮你解决威尔士三一圣大卫大学学历学位认证难题。

【办理威尔士三一圣大卫大学成绩单Buy University of Wales Trinity Saint David Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

威尔士三一圣大卫大学成绩单能够体现您的的学习能力,包括威尔士三一圣大卫大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

Buy University of Wales Trinity Saint David Diploma《正式成绩单论文没过》有文凭却得不到认证。又该怎么办???英国毕业证购买,英国文凭购买,【q微1954292140】英国文凭购买,英国文凭定制,英国文凭补办。专业在线定制英国大学文凭,定做英国本科文凭,【q微1954292140】复制英国University of Wales Trinity Saint David completion letter。在线快速补办英国本科毕业证、硕士文凭证书,购买英国学位证、威尔士三一圣大卫大学Offer,英国大学文凭在线购买。

特殊原因导致无法毕业,也可以联系我们帮您办理相关材料:

1:在威尔士三一圣大卫大学挂科了,不想读了,成绩不理想怎么办?

2:打算回国了,找工作的时候,需要提供认证《UWTSD成绩单购买办理威尔士三一圣大卫大学毕业证书范本》

帮您解决在英国威尔士三一圣大卫大学未毕业难题(University of Wales Trinity Saint David)文凭购买、毕业证购买、大学文凭购买、大学毕业证购买、买文凭、日韩文凭、英国大学文凭、美国大学文凭、澳洲大学文凭、加拿大大学文凭(q微1954292140)新加坡大学文凭、新西兰大学文凭、爱尔兰文凭、西班牙文凭、德国文凭、教育部认证,买毕业证,毕业证购买,买大学文凭,【q微1954292140】学位证1:1完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。《威尔士三一圣大卫大学扫描件文凭定做英国毕业证书办理UWTSD购买毕业证》

购买日韩毕业证、英国大学毕业证、美国大学毕业证、澳洲大学毕业证、加拿大大学毕业证(q微1954292140)新加坡大学毕业证、新西兰大学毕业证、爱尔兰毕业证、西班牙毕业证、德国毕业证,回国证明,留信网认证,留信认证办理,学历认证。从而完成就业。威尔士三一圣大卫大学毕业证办理,威尔士三一圣大卫大学文凭办理,威尔士三一圣大卫大学成绩单办理和真实留信认证、留服认证、威尔士三一圣大卫大学学历认证。学院文凭定制,威尔士三一圣大卫大学原版文凭补办,成绩单如何办理,扫描件文凭定做,100%文凭复刻。

apidays New York 2025 - Building Agentic Workflows with FDC3 Intents by Nick ...

apidays New York 2025 - Building Agentic Workflows with FDC3 Intents by Nick ...apidays Building Agentic Workflows with FDC3 Intents

Nick Kolba, Co-founder & CEO at Connectifi

apidays New York 2025

API Management for Surfing the Next Innovation Waves: GenAI and Open Banking

Convene 360 Madison, New York

May 14 & 15, 2025

------

Check out our conferences at https://www.apidays.global/

Do you want to sponsor or talk at one of our conferences?

https://apidays.typeform.com/to/ILJeAaV8

Learn more on APIscene, the global media made by the community for the community:

https://www.apiscene.io

Explore the API ecosystem with the API Landscape:

https://apilandscape.apiscene.io/

Ad

Custom Pregel Algorithms in ArangoDB

- 1. Feature Preview: Custom Pregel Complex Graph Algorithms made Easy @arangodb @joerg_schad @hkernbach

- 2. 2 tl;dr ● “Many practical computing problems concern large graphs.” ● ArangoDB is a “Beyond Graph Database” supporting multiple data models around a scalable graph foundation ● Pregel is a framework for distributed graph processing ○ ArangoDB supports predefined Prgel algorithms, e.g. PageRank, Single-Source Shortest Path and Connected components. ● Programmable Pregel Algorithms (PPA) allows adding/modifying algorithms on the flight Disclaimer This is an experimental feature and especially the language specification (front-end) is still under development!

- 3. Jörg Schad, PhD Head of Engineering and ML @ArangoDB ● Suki.ai ● Mesosphere ● Architect @SAP Hana ● PhD Distributed DB Systems ● Twitter: @joerg_schad

- 4. 4 Heiko Kernbach Core Engineer (Graphs Team) @ ● Graph ● Custom Pregel ● Geo / UI ● Twitter: @hkernbach ● Slack: hkernbach.ArangoDB

- 5. 5 ● Open Source ● Beyond Graph Database ○ Stores, K/V, Documents connected by scalable Graph Processing ● Scalable ○ Distributed Graphs ● AQL - SQL-like multi-model query language ● ACID Transactions including Multi Collection Transactions

- 7. https://blog.acolyer.org/2015/05/26/pregel-a-system-for-large-scale-graph-processing/ Pregel Max Value While not converged: Communicate: send own value to neighbours Compute: Own value = Max Value from all messages (+ own value) Superstep

- 8. ArangoDB and Pregel: Status Quo ● https://www.arangodb.com/docs/stable/graphs-pregel.html ● https://www.arangodb.com/pregel-community-detection/ Available Algorithms ● Page Rank ● Seeded PageRank ● Single-Source Shortest Path ● Connected Components ○ Component ○ WeaklyConnected ○ StronglyConnected ● Hyperlink-Induced Topic Search (HITS)Permalink ● Vertex Centrality ● Effective Closeness ● LineRank ● Label Propagation ● Speaker-Listener Label Propagation 8 var pregel = require("@arangodb/pregel"); pregel.start("pagerank", "graphname", {maxGSS: 100, threshold: 0.00000001, resultField: "rank"}) ● Pregel support since 2014 ● Predefined algorithms ○ Could be extended via C++ ● Same platform used for PPA Challenges Add and modify Algorithms

- 9. Programmable Pregel Algorithms (PPA) const pregel = require("@arangodb/pregel"); let pregelID = pregel.start("air", graphName, "<custom-algorithm>"); var status = pregel.status(pregelID); ● Add/Modify algorithms on-the-fly ○ Without C++ code ○ Without restarting the Database ● Efficiency (as Pregel) depends on Sharding ○ Smart Graphs ○ Required: Collocation of vertices and edges 9

- 10. Custom Algorithm 10 { "resultField": "<string>", "maxGSS": "<number>", "dataAccess": { "writeVertex": "<program>", "readVertex": "<array>", "readEdge": "<array>" }, "vertexAccumulators": "<object>", "globalAccumulators": "<object>", "customAccumulators": "<object>", "phases": "<array>" } Accumulators Accumulators are used to consume and process messages which are being sent to them during the computational phase (initProgram, updateProgram, onPreStep, onPostStep) of a superstep. After a superstep is done, all messages will be processed. ● max: stores the maximum of all messages received. ● min: stores the minimum of all messages received. ● sum: sums up all messages received. ● and: computes and on all messages received. ● or: computes or and all messages received. ● store: holds the last received value (non-deterministic). ● list: stores all received values in list (order is non-deterministic). ● custom

- 11. Custom Algorithm 11 { "resultField": "<string>", "maxGSS": "<number>", "dataAccess": { "writeVertex": "<program>", "readVertex": "<array>", "readEdge": "<array>" }, "vertexAccumulators": "<object>", "globalAccumulators": "<object>", "customAccumulators": "<object>", "phases": "<array>" } ● resultField (string, optional): Name of the document attribute to store the result in. The vertex computation results will be in all vertices pointing to the given attribute. ● maxGSS (number, required): The max amount of global supersteps After the amount of max defined supersteps is reached, the Pregel execution will stop. ● dataAccess (object, optional): Allows to define writeVertex, readVertex and readEdge. ○ writeVertex: A program that is used to write the results into vertices. If writeVertex is used, the resultField will be ignored. ○ readVertex: An array that consists of strings and/or additional arrays (that represents a path). ■ string: Represents a single attribute at the top level. ■ array of strings: Represents a nested path ○ readEdge: An array that consists of strings and/or additional arrays (that represents a path). ■ string: Represents a single path at the top level which is not nested. ■ array of strings: Represents a nested path ● vertexAccumulators (object, optional): Definition of all used vertex accumulators. ● globalAccumulators (object, optional): Definition all used global accumulators. Global Accumulators are able to access variables at shared global level. ● customAccumulators (object, optional): Definition of all used custom accumulators. ● phases (array): Array of a single or multiple phase definitions. ● debug (optional): See Debugging.

- 12. Phases - Execution order 12 Step 1: Initialization 1. onPreStep (Conductor, executed on Coordinator instances) 2. initProgram (Worker, executed on DB-Server instances) 3. onPostStep (Conductor) Step {2, ...n} Computation 1. onPreStep (Conductor) 2. updateProgram (Worker) 3. onPostStep (Conductor)

- 13. Program - Arango Intermediate Representation (AIR) 13

- 14. Program - Arango Intermediate Representation (AIR) Lisp-like intermediate representation, represented in JSON and supports its data types 14 Specification ● Language Primitives ○ Basic Algebraic Operators ○ Logical operators ○ Comparison operators ○ Lists ○ Sort ○ Dicts ○ Lambdas ○ Reduce ○ Utilities ○ Functional ○ Variables ○ Debug operators ● Math Library ● Special Form ○ let statement ○ seq statement ○ if statement ○ match statement ○ for-each statement ○ quote and quote-splice statements ○ quasi-quote, unquote and unquote-splice statements ○ cons statement ○ and and or statements

- 15. Program - Arango Intermediate Representation (AIR) Lisp-like intermediate representation, represented in JSON and supports its data types 15 Specification ● Language Primitives ○ Basic Algebraic Operators ○ Logical operators ○ Comparison operators ○ Lists ○ Sort ○ Dicts ○ Lambdas ○ Reduce ○ Utilities ○ Functional ○ Variables ○ Debug operators ● Math Library ● Special Form ○ let statement ○ seq statement ○ if statement ○ match statement ○ for-each statement ○ quote and quote-splice statements ○ quasi-quote, unquote and unquote-splice statements ○ cons statement ○ and and or statements

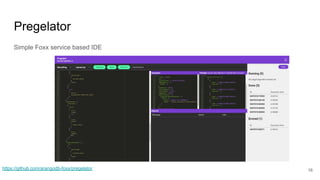

- 16. Pregelator Simple Foxx service based IDE 16https://github.com/arangodb-foxx/pregelator

- 18. PPA: What is next? - Gather Feedback - In particular use-cases - Missing functions & functionality - User-friendly Front-End language - Improve Scale/Performance of underlying Pregel platform - Algorithm library - Blog Post (including Jupyter example) 18 ArangoDB 3.8 (end of year) - Experimental Feature - Initial Library ArangoDB 3.9 (Q1 21) - Draft for Front-End - Extended Library - Platform Improvements ArangoDB 4.0 (Mid 21) - GA

- 19. Pregel vs AQL When to (not) use Pregel… - Can the algorithm be efficiently be expressed in Pregel? - Counter example: Topological Sort - Is the graph size worth the loading? 19 AQL Pregel All Models (Graph, Document, Key-Value, Search, …) Iterative Graph Processing Online Queries Large Graphs, multiple iterations

- 20. How can I start? ● Docker Image: arangodb/enterprise-preview:3.8.0-milestone.3 ● Check existing algorithms ● Preview documentation ● Give Feedback ○ https://slack.arangodb.com/ -> custom-pregel 20

- 21. Thanks for listening! 21 Reach out with Feedback/Questions! • @arangodb • https://www.arangodb.com/ • docker pull arangodb Test-drive Oasis 14-days for free