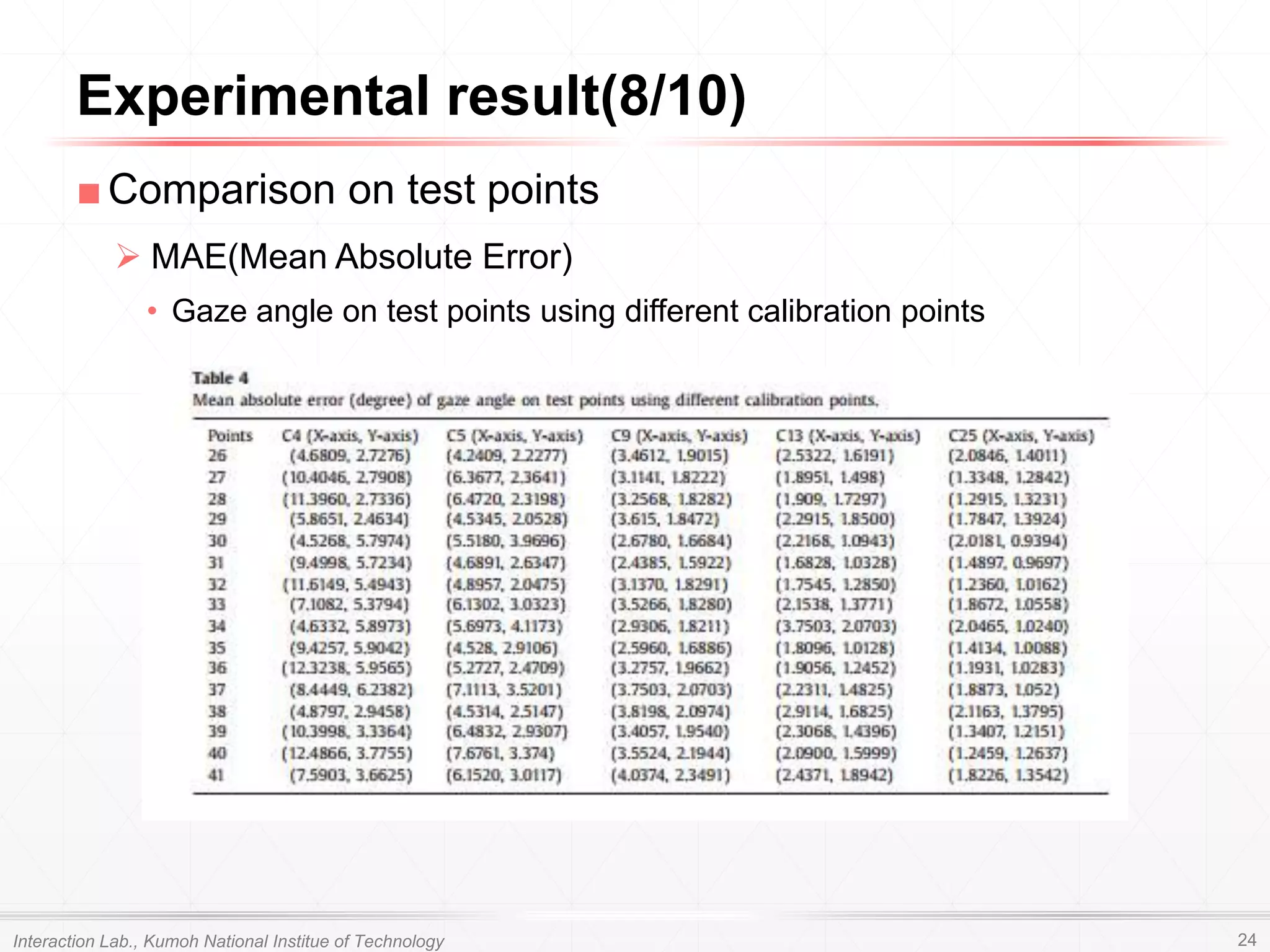

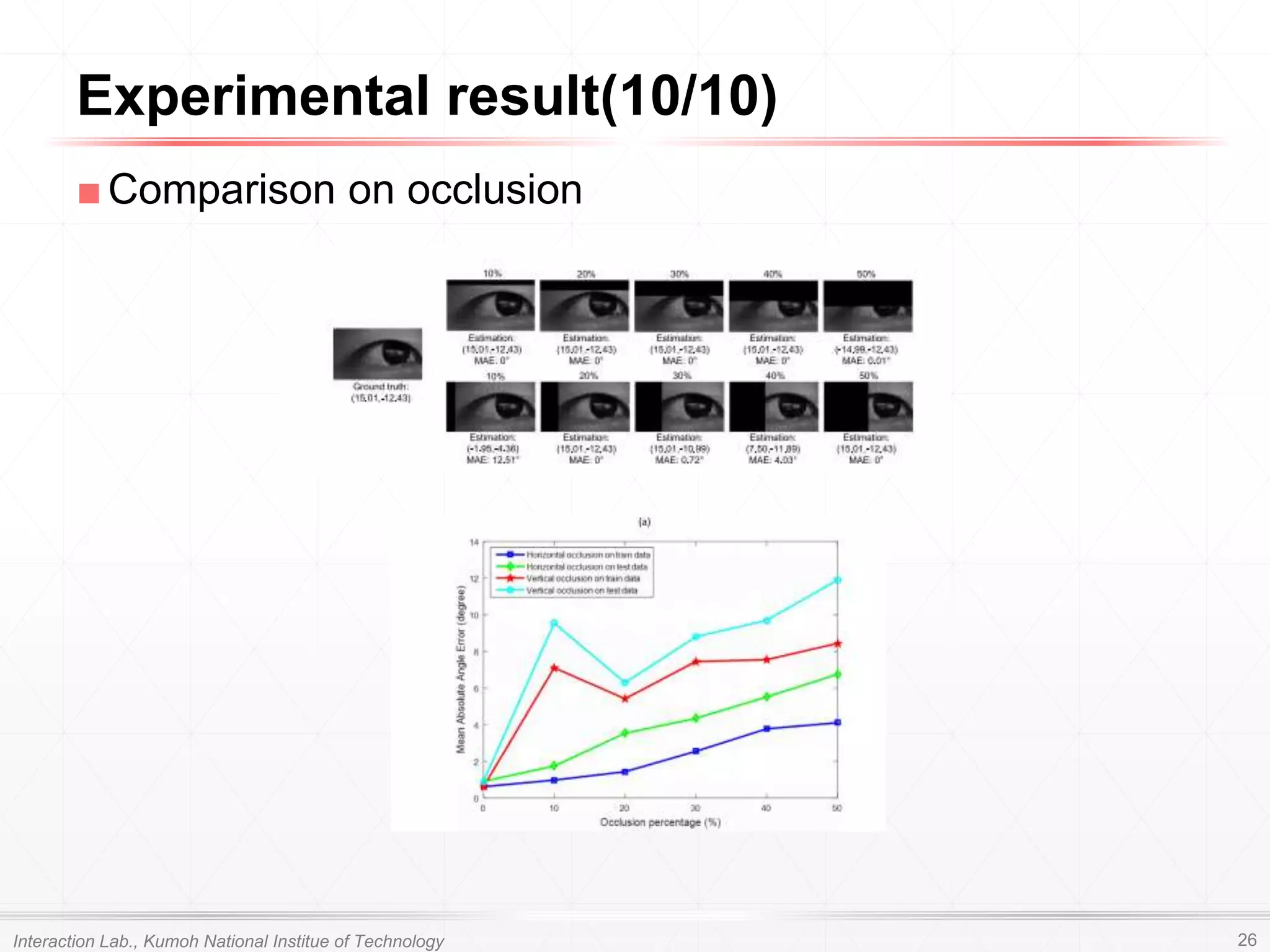

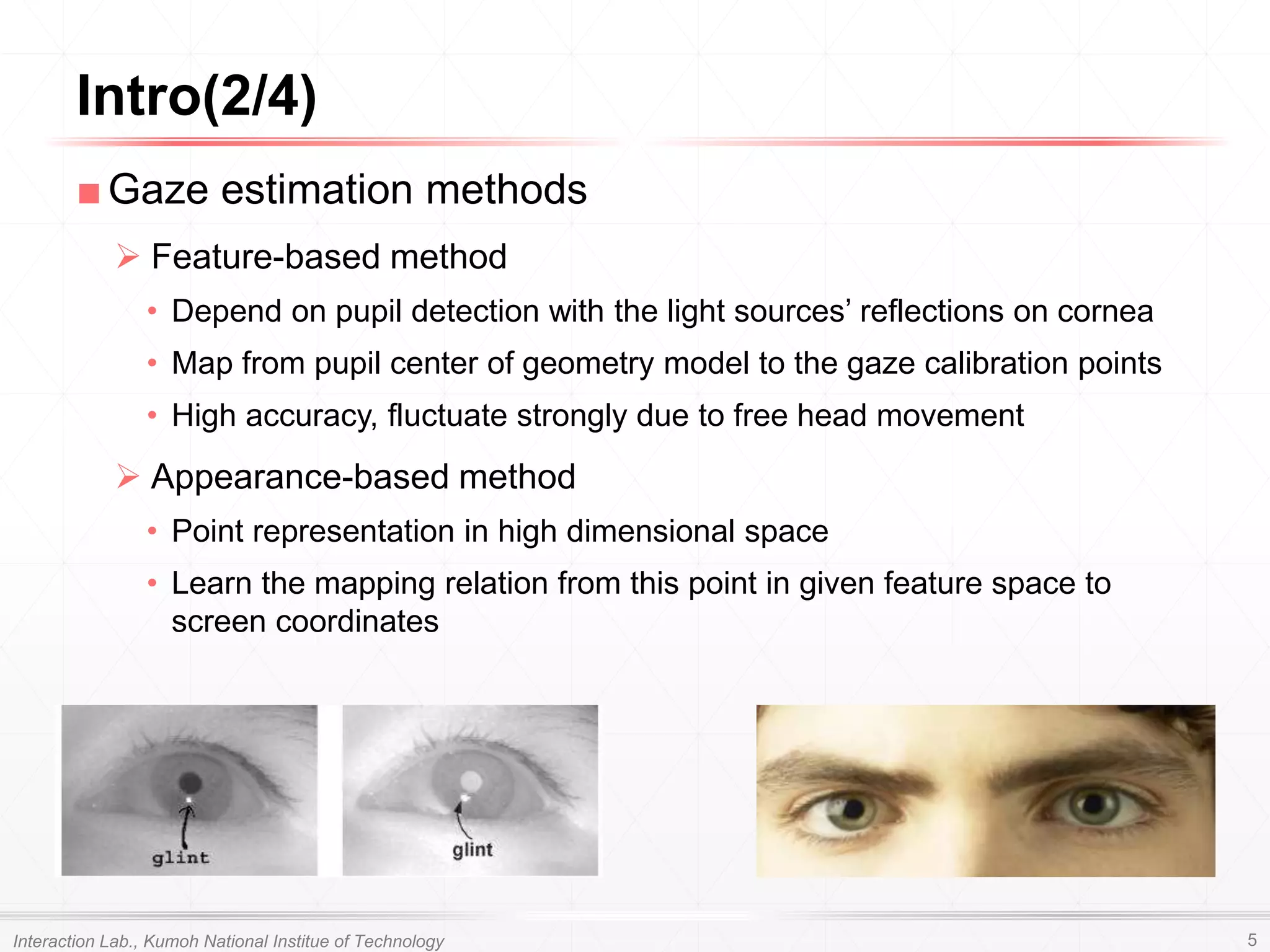

This document summarizes a research paper on appearance-based gaze estimation using deep feature extraction and random forest regression. The method uses a single webcam under natural light to capture eye images, extracts deep features from the images using CNN, and maps the features to gaze coordinates using random forest regression. Experimental results show the deep feature approach improves gaze estimation accuracy compared to other methods, and works well under different lighting conditions and with free head movement. The approach could potentially be applied to real-time driver gaze tracking.

![■Dataset and experimental setup

Distribution of head pose angle in the free head movement

• Using POSIT(Pose from Orthography and Scaling with Iterations)

• Pitch : [0°, 15°], Yaw : [-10°, 10°]

Mean grayscale intensity of images

• Darker light condition has a lower mean grayscale intensity

Experimental result(2/10)

Interaction Lab., Kumoh National Institue of Technology 18](https://image.slidesharecdn.com/appearancebasedgazeestimationusingdeepfeaturesandrandomforestregression-210528115146/75/Appearance-based-gaze-estimation-using-deep-features-and-random-forest-regression-18-2048.jpg)