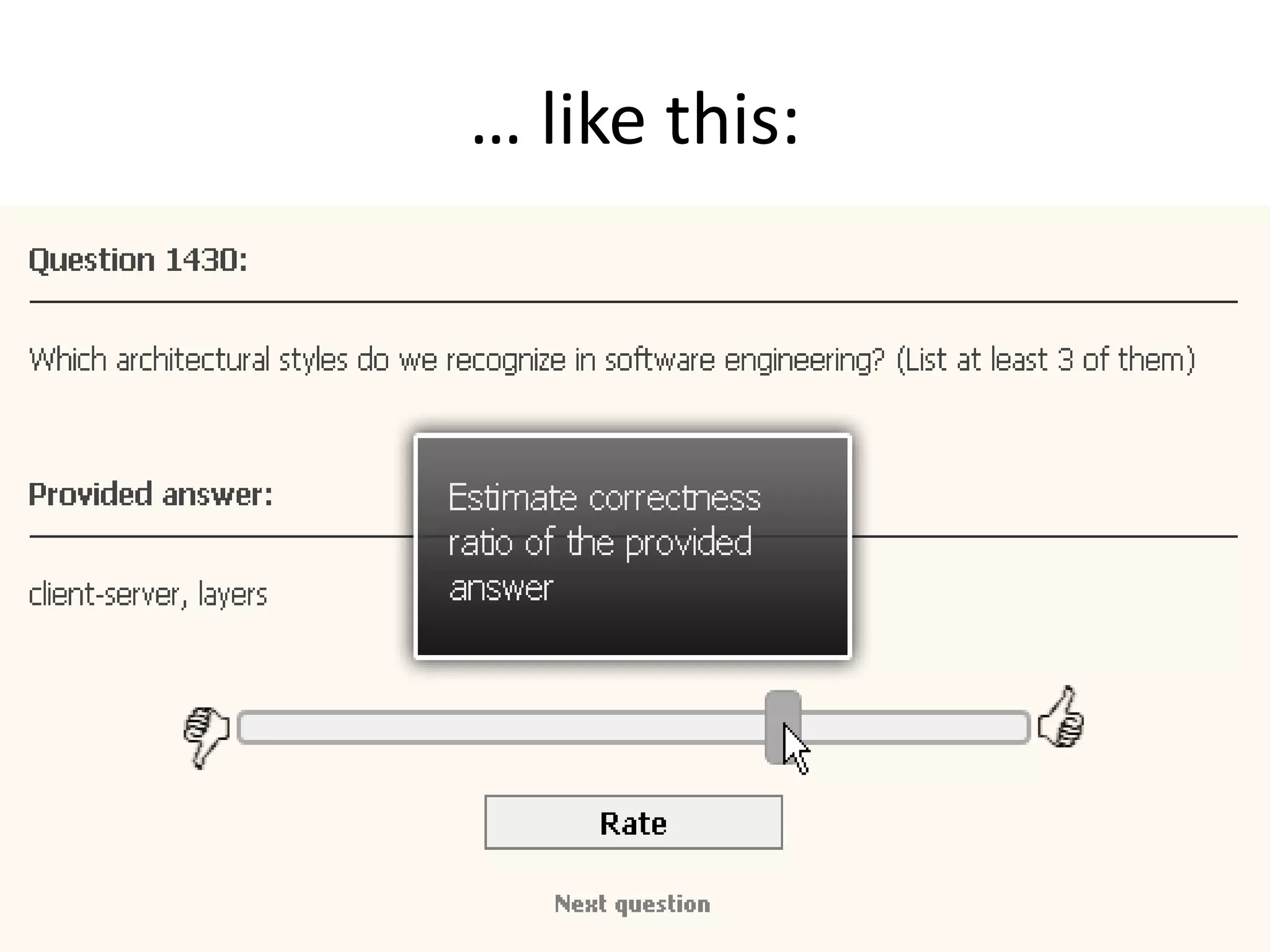

The document discusses the concept of using crowdsourcing among students to validate learning objects through interactive exercises, highlighting benefits such as cost-effectiveness and motivation. It outlines challenges including varied student skills and tendencies to abuse the system, while presenting a method for evaluating answers based on peer feedback. The findings indicate a need for careful management of student evaluations and the potential for immediate feedback to enhance learning outcomes.