cvpr2009 tutorial: kernel methods in computer vision: part II: Statistics and Clustering with Kernels, Structured Output Learning

- 1. Statistics and Clustering with Kernels Christoph Lampert & Matthew Blaschko Max-Planck-Institute for Biological Cybernetics Department Schölkopf: Empirical Inference Tübingen, Germany Visual Geometry Group University of Oxford United Kingdom June 20, 2009

- 2. Overview Kernel Ridge Regression Kernel PCA Spectral Clustering Kernel Covariance and Canonical Correlation Analysis Kernel Measures of Independence

- 3. Kernel Ridge Regression Regularized least squares regression: n min (yi − w, xi )2 + λ w 2 w i=1

- 4. Kernel Ridge Regression Regularized least squares regression: n min (yi − w, xi )2 + λ w 2 w i=1 n Replace w with i=1 α i xi 2 n n n n min yi − xi , xj + λ αi αj xi , xj α i=1 j=1 i=1 j=1 α∗ can be solved in closed form solution α∗ = (K + λI )−1 y

- 5. PCA Equivalent formulations: Minimize squared error between original data and a projection of our data into a lower dimensional subspace Maximize variance of projected data Solutions: Eigenvectors of the empirical covariance matrix

- 6. PCA continued Empirical covariance matrix (biased): ˆ 1 C = (xi − µ)(xi − µ)T n i where µ is the sample mean. ˆ C is positive (semi-)definite symmetric PCA: ˆ wT C w max w w 2

- 7. Data Centering We use the notation X to denote the design matrix where every column of X is a data sample We can define a centering matrix 1 T H =I− ee n where e is a vector of all ones

- 8. Data Centering We use the notation X to denote the design matrix where every column of X is a data sample We can define a centering matrix 1 T H =I− ee n where e is a vector of all ones H is idempotent, symmetric, and positive semi-definite (rank n − 1)

- 9. Data Centering We use the notation X to denote the design matrix where every column of X is a data sample We can define a centering matrix 1 T H =I− ee n where e is a vector of all ones H is idempotent, symmetric, and positive semi-definite (rank n − 1) The design matrix of centered data can be written compactly in matrix form as XH 1 The ith column of XH is equal to xi − µ, where µ = n j xj is the sample mean

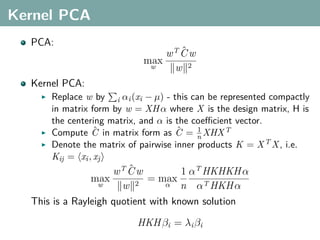

- 10. Kernel PCA PCA: ˆ wT C w max w w 2 Kernel PCA: Replace w by i αi (xi − µ) - this can be represented compactly in matrix form by w = XH α where X is the design matrix, H is the centering matrix, and α is the coefficient vector.

- 11. Kernel PCA PCA: ˆ wT C w max w w 2 Kernel PCA: Replace w by i αi (xi − µ) - this can be represented compactly in matrix form by w = XH α where X is the design matrix, H is the centering matrix, and α is the coefficient vector. ˆ ˆ 1 Compute C in matrix form as C = n XHX T

- 12. Kernel PCA PCA: ˆ wT C w max w w 2 Kernel PCA: Replace w by i αi (xi − µ) - this can be represented compactly in matrix form by w = XH α where X is the design matrix, H is the centering matrix, and α is the coefficient vector. ˆ ˆ 1 Compute C in matrix form as C = n XHX T Denote the matrix of pairwise inner products K = X T X , i.e. Kij = xi , xj

- 13. Kernel PCA PCA: ˆ wT C w max w w 2 Kernel PCA: Replace w by i αi (xi − µ) - this can be represented compactly in matrix form by w = XH α where X is the design matrix, H is the centering matrix, and α is the coefficient vector. ˆ ˆ 1 Compute C in matrix form as C = n XHX T Denote the matrix of pairwise inner products K = X T X , i.e. Kij = xi , xj ˆ wT C w 1 αT HKHKH α max = max w w 2 α n αT HKH α This is a Rayleigh quotient with known solution HKH βi = λi βi

- 14. Kernel PCA Set β to be the eigenvectors of HKH , and λ the corresponding eigenvalues 1 Set α = βλ− 2 Example, image super-resolution: (fig: Kim et al., PAMI 2005.)

- 15. Overview Kernel Ridge Regression Kernel PCA Spectral Clustering Kernel Covariance and Canonical Correlation Analysis Kernel Measures of Independence

- 16. Spectral Clustering Represent similarity of images by weights on a graph Normalized cuts optimizes the ratio of the cost of a cut and the volume of each cluster k ¯ cut(Ai , Ai ) Ncut(A1 , . . . , Ak ) = i=1 vol(Ai )

- 17. Spectral Clustering Represent similarity of images by weights on a graph Normalized cuts optimizes the ratio of the cost of a cut and the volume of each cluster k ¯ cut(Ai , Ai ) Ncut(A1 , . . . , Ak ) = i=1 vol(Ai ) Exact optimization is NP-hard, but relaxed version can be solved by finding the eigenvalues of the graph Laplacian 1 1 L = I − D − 2 AD − 2 where D is the diagonal matrix with entries equal to the row sums of similarity matrix, A.

- 18. Spectral Clustering (continued) 1 1 Compute L = I − D − 2 AD − 2 . Map data points based on the eigenvalues of L Example, handwritten digits (0-9): (fig: Xiaofei He) Cluster in mapped space using k-means

- 19. Overview Kernel Ridge Regression Kernel PCA Spectral Clustering Kernel Covariance and Canonical Correlation Analysis Kernel Measures of Independence

- 20. Multimodal Data A latent aspect relates data that are present in multiple modalities e.g. images and text XYZ[ _^] z M qqq MMM qqq MMM XYZ[ _^] _^] XYZ[ q x & ϕx (x) ϕy (y) x: y: “A view from Idyllwild, California, with pine trees and snow capped Marion Mountain under a blue sky.”

- 21. Multimodal Data A latent aspect relates data that are present in multiple modalities e.g. images and text XYZ[ _^] z M qqq MMM qqq MMM XYZ[ _^] _^] XYZ[ q x & ϕx (x) ϕy (y) x: y: “A view from Idyllwild, California, with pine trees and snow capped Marion Mountain under a blue sky.” Learn kernelized projections that relate both spaces

- 22. Kernel Covariance KPCA is maximization of auto-covariance Instead maximize cross-covariance wx Cxy wy max w ,w x y wx wy

- 23. Kernel Covariance KPCA is maximization of auto-covariance Instead maximize cross-covariance wx Cxy wy max w ,w x y wx wy Can also be kernelized (replace wx by i αi (xi − µx ), etc.) αT HKx HKy H β maxα,β αT HKx H αβ T HKy H β

- 24. Kernel Covariance KPCA is maximization of auto-covariance Instead maximize cross-covariance wx Cxy wy max w ,w x y wx wy Can also be kernelized (replace wx by i αi (xi − µx ), etc.) αT HKx HKy H β maxα,β αT HKx H αβ T HKy H β Solution is given by (generalized) eigenproblem 0 HKx HKy H α HKx H 0 α =λ HKy HKx H 0 β 0 HKy H β

- 25. Kernel Canonical Correlation Analysis (KCCA) Alternately, maximize correlation instead of covariance T wx Cxy wy max wx ,wy T T wx Cxx wx wy Cyy wy

- 26. Kernel Canonical Correlation Analysis (KCCA) Alternately, maximize correlation instead of covariance T wx Cxy wy max wx ,wy T T wx Cxx wx wy Cyy wy Kernelization is straightforward as before αT HKx HKy H β max α,β αT (HKx H )2 αβ T (HKy H )2 β

- 27. KCCA (continued) Problem: If the data in either modality are linearly independent (as many dimensions as data points), there exists a projection of the data that respects any arbitrary ordering Perfect correlation can always be achieved

- 28. KCCA (continued) Problem: If the data in either modality are linearly independent (as many dimensions as data points), there exists a projection of the data that respects any arbitrary ordering Perfect correlation can always be achieved This is even more likely when a kernel is used (e.g. Gaussian)

- 29. KCCA (continued) Problem: If the data in either modality are linearly independent (as many dimensions as data points), there exists a projection of the data that respects any arbitrary ordering Perfect correlation can always be achieved This is even more likely when a kernel is used (e.g. Gaussian) Solution: Regularize T wx Cxy wy max wx ,wy (wx Cxx wx + εx wx 2 ) wy Cyy wy + εy wy T T 2 As εx → ∞, εx → ∞, solution approaches maximum covariance

- 30. KCCA Algorithm Compute Kx , Ky Solve for α and β as the eigenvectors of 0 HKx HKy H α = HKy HKx H 0 β (HKx H )2 + εx HKx H 0 α λ 2 0 (HKy H ) + εy HKy H β

- 31. Content Based Image Retrieval with KCCA Hardoon et al., 2004 Training data consists of images with text captions Learn embeddings of both spaces using KCCA and appropriately chosen image and text kernels Retrieval consists of finding images whose embeddings are related to the embedding of the text query

- 32. Content Based Image Retrieval with KCCA Hardoon et al., 2004 Training data consists of images with text captions Learn embeddings of both spaces using KCCA and appropriately chosen image and text kernels Retrieval consists of finding images whose embeddings are related to the embedding of the text query A kind of multi-variate regression

- 33. Overview Kernel Ridge Regression Kernel PCA Spectral Clustering Kernel Covariance and Canonical Correlation Analysis Kernel Measures of Independence

- 34. Kernel Measures of Independence We know how to measure correlation in the kernelized space

- 35. Kernel Measures of Independence We know how to measure correlation in the kernelized space Independence implies zero correlation

- 36. Kernel Measures of Independence We know how to measure correlation in the kernelized space Independence implies zero correlation Different kernels encode different statistical properties of the data

- 37. Kernel Measures of Independence We know how to measure correlation in the kernelized space Independence implies zero correlation Different kernels encode different statistical properties of the data Use an appropriate kernel such that zero correlation in the Hilbert space implies independence

- 38. Example: Polynomial Kernel First degree polynomial kernel (i.e. linear) captures correlation only Second degree polynomial kernel captures all second order statistics ...

- 39. Example: Polynomial Kernel First degree polynomial kernel (i.e. linear) captures correlation only Second degree polynomial kernel captures all second order statistics ... A Gaussian kernel can be written 2 k(xi , xj ) = e −γ xi −xj = e −γ xi ,xi e 2γ xi ,xj e −γ xj ,xj and we can use the identity ∞ 1 i ez = z i=1 i!

- 40. Example: Polynomial Kernel First degree polynomial kernel (i.e. linear) captures correlation only Second degree polynomial kernel captures all second order statistics ... A Gaussian kernel can be written 2 k(xi , xj ) = e −γ xi −xj = e −γ xi ,xi e 2γ xi ,xj e −γ xj ,xj and we can use the identity ∞ 1 i ez = z i=1 i! We can view the Gaussian kernel as being related to an appropriately scaled infinite dimensional polynomial kernel

- 41. Example: Polynomial Kernel First degree polynomial kernel (i.e. linear) captures correlation only Second degree polynomial kernel captures all second order statistics ... A Gaussian kernel can be written 2 k(xi , xj ) = e −γ xi −xj = e −γ xi ,xi e 2γ xi ,xj e −γ xj ,xj and we can use the identity ∞ 1 i ez = z i=1 i! We can view the Gaussian kernel as being related to an appropriately scaled infinite dimensional polynomial kernel captures all order statistics

- 42. Hilbert-Schmidt Independence Criterion F RKHS on X with kernel kx (x, x ), G RKHS on Y with kernel ky (y, y )

- 43. Hilbert-Schmidt Independence Criterion F RKHS on X with kernel kx (x, x ), G RKHS on Y with kernel ky (y, y ) Covariance operator: Cxy : G → F such that f , Cxy g F = Ex,y [f (x)g(y)] − Ex [f (x)]Ey [g(y)]

- 44. Hilbert-Schmidt Independence Criterion F RKHS on X with kernel kx (x, x ), G RKHS on Y with kernel ky (y, y ) Covariance operator: Cxy : G → F such that f , Cxy g F = Ex,y [f (x)g(y)] − Ex [f (x)]Ey [g(y)] HSIC is the Hilbert-Schmidt norm of Cxy (Fukumizu et al. 2008): 2 HSIC := Cxy HS

- 45. Hilbert-Schmidt Independence Criterion F RKHS on X with kernel kx (x, x ), G RKHS on Y with kernel ky (y, y ) Covariance operator: Cxy : G → F such that f , Cxy g F = Ex,y [f (x)g(y)] − Ex [f (x)]Ey [g(y)] HSIC is the Hilbert-Schmidt norm of Cxy (Fukumizu et al. 2008): 2 HSIC := Cxy HS (Biased) empirical HSIC: 1 HSIC := Tr(Kx HKy H ) n2

- 46. Hilbert-Schmidt Independence Criterion (continued) Ring-shaped density, correlation approx. zero Maximum singular vectors (functions) of Cxy Dependence witness, X 0.5 Correlation: −0.00 1.5 Correlation: −0.90 COCO: 0.14 f(x) 0 1 1 −0.5 0.5 0.5 −1 −2 0 2 g(Y) x 0 Y 0 Dependence witness, Y 0.5 −0.5 −0.5 0 −1 g(y) −0.5 −1 −1.5 −1 −0.5 0 0.5 −2 0 2 f(X) X −1 −2 0 2 y

- 47. Hilbert-Schmidt Normalized Independence Criterion Hilbert-Schmidt Independence Criterion analogous to cross-covariance Can we construct a version analogous to correlation?

- 48. Hilbert-Schmidt Normalized Independence Criterion Hilbert-Schmidt Independence Criterion analogous to cross-covariance Can we construct a version analogous to correlation? Simple modification: decompose Covariance operator (Baker 1973) 1 1 Cxy = Cxx Vxy Cyy 2 2 where Vxy is the normalized cross-covariance operator (maximum singular value is bounded by 1)

- 49. Hilbert-Schmidt Normalized Independence Criterion Hilbert-Schmidt Independence Criterion analogous to cross-covariance Can we construct a version analogous to correlation? Simple modification: decompose Covariance operator (Baker 1973) 1 1 Cxy = Cxx Vxy Cyy 2 2 where Vxy is the normalized cross-covariance operator (maximum singular value is bounded by 1) Use norm of Vxy instead of the norm of Cxy

- 50. Hilbert-Schmidt Normalized Independence Criterion (continued) Define the normalized independence criterion to be the Hilbert-Schmidt norm of Vxy 1 HSNIC := 2 Tr HKx H (HKx H + εx I )−1 n HKy H (HKy H + εy I )−1 where εx and εy are regularization parameters as in KCCA

- 51. Hilbert-Schmidt Normalized Independence Criterion (continued) Define the normalized independence criterion to be the Hilbert-Schmidt norm of Vxy 1 HSNIC := 2 Tr HKx H (HKx H + εx I )−1 n HKy H (HKy H + εy I )−1 where εx and εy are regularization parameters as in KCCA If the kernels on x and y are characteristic (e.g. Gaussian kernels, see Fukumizu et al., 2008) Cxy 2 = Vxy 2 = 0 iff x and y are independent! HS HS

- 52. Applications of HS(N)IC Independence tests - is there anything to gain from the use of multi-modal data?

- 53. Applications of HS(N)IC Independence tests - is there anything to gain from the use of multi-modal data? Kernel ICA

- 54. Applications of HS(N)IC Independence tests - is there anything to gain from the use of multi-modal data? Kernel ICA Maximize dependence with respect to some model parameters Kernel target alignment (Cristianini et al., 2001)

- 55. Applications of HS(N)IC Independence tests - is there anything to gain from the use of multi-modal data? Kernel ICA Maximize dependence with respect to some model parameters Kernel target alignment (Cristianini et al., 2001) Learning spectral clustering (Bach & Jordan, 2003) - relates kernel learning and clustering

- 56. Applications of HS(N)IC Independence tests - is there anything to gain from the use of multi-modal data? Kernel ICA Maximize dependence with respect to some model parameters Kernel target alignment (Cristianini et al., 2001) Learning spectral clustering (Bach & Jordan, 2003) - relates kernel learning and clustering Taxonomy discovery (Blaschko & Gretton, 2008)

- 57. Summary In this section we learned how to Do basic operations in kernel space like: Regularized least squares regression Data centering PCA

- 58. Summary In this section we learned how to Do basic operations in kernel space like: Regularized least squares regression Data centering PCA Learn with multi-modal data Kernel Covariance KCCA

- 59. Summary In this section we learned how to Do basic operations in kernel space like: Regularized least squares regression Data centering PCA Learn with multi-modal data Kernel Covariance KCCA Use kernels to construct statistical independence tests Use appropriate kernels to capture relevant statistics Measure dependence by norm of (normalized) covariance operator Closed form solutions requiring only kernel matrices for each modality

- 60. Summary In this section we learned how to Do basic operations in kernel space like: Regularized least squares regression Data centering PCA Learn with multi-modal data Kernel Covariance KCCA Use kernels to construct statistical independence tests Use appropriate kernels to capture relevant statistics Measure dependence by norm of (normalized) covariance operator Closed form solutions requiring only kernel matrices for each modality Questions?

- 61. Structured Output Learning Christoph Lampert & Matthew Blaschko Max-Planck-Institute for Biological Cybernetics Department Schölkopf: Empirical Inference Tübingen, Germany Visual Geometry Group University of Oxford United Kingdom June 20, 2009

- 62. What is Structured Output Learning? Regression maps from an input space to an output space g:X →Y

- 63. What is Structured Output Learning? Regression maps from an input space to an output space g:X →Y In typical scenarios, Y ≡ R (regression) or Y ≡ {−1, 1} (classification)

- 64. What is Structured Output Learning? Regression maps from an input space to an output space g:X →Y In typical scenarios, Y ≡ R (regression) or Y ≡ {−1, 1} (classification) Structured output learning extends this concept to more complex and interdependent output spaces

- 65. Examples of Structured Output Problems in Computer Vision Multi-class classification (Crammer & Singer, 2001) Hierarchical classification (Cai & Hofmann, 2004) Segmentation of 3d scan data (Anguelov et al., 2005) Learning a CRF model for stereo vision (Li & Huttenlocher, 2008) Object localization (Blaschko & Lampert, 2008) Segmentation with a learned CRF model (Szummer et al., 2008) ... More examples at CVPR 2009

- 66. Generalization of Regression Direct discriminative learning of g : X → Y Penalize errors for this mapping

- 67. Generalization of Regression Direct discriminative learning of g : X → Y Penalize errors for this mapping Two basic assumptions employed Use of a compatibility function f :X ×Y →R g takes the form of a decoding function g(x) = argmax f (x, y) y

- 68. Generalization of Regression Direct discriminative learning of g : X → Y Penalize errors for this mapping Two basic assumptions employed Use of a compatibility function f :X ×Y →R g takes the form of a decoding function g(x) = argmax f (x, y) y linear w.r.t. joint kernel f (x, y) = w, ϕ(x, y)

- 69. Multi-Class Joint Feature Map Simple joint kernel map: define ϕy (yi ) to be the vector with 1 in place of the current class, and 0 elsewhere ϕy (yi ) = [0, . . . , 1 , . . . , 0]T kth position if yi represents a sample that is a member of class k

- 70. Multi-Class Joint Feature Map Simple joint kernel map: define ϕy (yi ) to be the vector with 1 in place of the current class, and 0 elsewhere ϕy (yi ) = [0, . . . , 1 , . . . , 0]T kth position if yi represents a sample that is a member of class k ϕx (xi ) can result from any kernel over X : kx (xi , xj ) = ϕx (xi ), ϕx (xj )

- 71. Multi-Class Joint Feature Map Simple joint kernel map: define ϕy (yi ) to be the vector with 1 in place of the current class, and 0 elsewhere ϕy (yi ) = [0, . . . , 1 , . . . , 0]T kth position if yi represents a sample that is a member of class k ϕx (xi ) can result from any kernel over X : kx (xi , xj ) = ϕx (xi ), ϕx (xj ) Set ϕ(xi , yi ) = ϕy (yi ) ⊗ ϕx (xi ), where ⊗ represents the Kronecker product

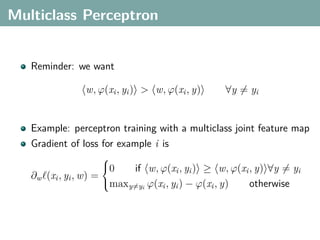

- 72. Multiclass Perceptron Reminder: we want w, ϕ(xi , yi ) > w, ϕ(xi , y) ∀y = yi

- 73. Multiclass Perceptron Reminder: we want w, ϕ(xi , yi ) > w, ϕ(xi , y) ∀y = yi Example: perceptron training with a multiclass joint feature map Gradient of loss for example i is 0 if w, ϕ(xi , yi ) ≥ w, ϕ(xi , y) ∀y = yi ∂w (xi , yi , w) = maxy=y ϕ(xi , yi ) − ϕ(xi , y) otherwise i

- 74. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 75. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 76. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 77. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 78. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 79. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 80. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 81. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 82. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 83. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 84. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 85. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 86. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 87. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 88. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 89. Perceptron Training with Multiclass Joint Feature Map Final result (Credit: Lyndsey Pickup)

- 90. Perceptron Training with Multiclass Joint Feature Map (Credit: Lyndsey Pickup)

- 91. Crammer & Singer Multi-Class SVM Instead of training using a perceptron, we can enforce a large margin and do a batch convex optimization: n 1 min w 2+C ξi w 2 i=1 s.t. w, ϕ(xi , yi ) − w, ϕ(xi , y) ≥ 1 − ξi ∀y = yi

- 92. Crammer & Singer Multi-Class SVM Instead of training using a perceptron, we can enforce a large margin and do a batch convex optimization: n 1 min w 2+C ξi w 2 i=1 s.t. w, ϕ(xi , yi ) − w, ϕ(xi , y) ≥ 1 − ξi ∀y = yi Can also be written only in terms of kernels w= αxy ϕ(x, y) x y Can use a joint kernel k :X ×Y ×X ×Y →R k(xi , yi , xj , yj ) = ϕ(xi , yi ), ϕ(xj , yj )

- 93. Structured Output Support Vector Machines (SO-SVM) Frame structured prediction as a multiclass problem predict a single element of Y and pay a penalty for mistakes

- 94. Structured Output Support Vector Machines (SO-SVM) Frame structured prediction as a multiclass problem predict a single element of Y and pay a penalty for mistakes Not all errors are created equally e.g. in an HMM making only one mistake in a sequence should be penalized less than making 50 mistakes

- 95. Structured Output Support Vector Machines (SO-SVM) Frame structured prediction as a multiclass problem predict a single element of Y and pay a penalty for mistakes Not all errors are created equally e.g. in an HMM making only one mistake in a sequence should be penalized less than making 50 mistakes Pay a loss proportional to the difference between true and predicted error (task dependent) ∆(yi , y)

- 96. Margin Rescaling Variant: Margin-Rescaled Joint-Kernel SVM for output space Y (Tsochantaridis et al., 2005) Idea: some wrong labels are worse than others: loss ∆(yi , y) Solve n 2 min w w +C ξi i=1 s.t. w, ϕ(xi , yi ) − w, ϕ(xi , y) ≥ ∆(yi , y) − ξi ∀y ∈ Y {yi } Classify new samples using g : X → Y: g(x) = argmax w, ϕ(x, y) y∈Y

- 97. Margin Rescaling Variant: Margin-Rescaled Joint-Kernel SVM for output space Y (Tsochantaridis et al., 2005) Idea: some wrong labels are worse than others: loss ∆(yi , y) Solve n 2 min w w +C ξi i=1 s.t. w, ϕ(xi , yi ) − w, ϕ(xi , y) ≥ ∆(yi , y) − ξi ∀y ∈ Y {yi } Classify new samples using g : X → Y: g(x) = argmax w, ϕ(x, y) y∈Y Another variant is slack rescaling (see Tsochantaridis et al., 2005)

- 98. Label Sequence Learning For, e.g., handwritten character recognition, it may be useful to include a temporal model in addition to learning each character individually As a simple example take an HMM

- 99. Label Sequence Learning For, e.g., handwritten character recognition, it may be useful to include a temporal model in addition to learning each character individually As a simple example take an HMM We need to model emission probabilities and transition probabilities Learn these discriminatively

- 100. A Joint Kernel Map for Label Sequence Learning Emissions (blue)

- 101. A Joint Kernel Map for Label Sequence Learning Emissions (blue) fe (xi , yi ) = we , ϕe (xi , yi )

- 102. A Joint Kernel Map for Label Sequence Learning Emissions (blue) fe (xi , yi ) = we , ϕe (xi , yi ) Can simply use the multi-class joint feature map for ϕe

- 103. A Joint Kernel Map for Label Sequence Learning Emissions (blue) fe (xi , yi ) = we , ϕe (xi , yi ) Can simply use the multi-class joint feature map for ϕe Transitions (green)

- 104. A Joint Kernel Map for Label Sequence Learning Emissions (blue) fe (xi , yi ) = we , ϕe (xi , yi ) Can simply use the multi-class joint feature map for ϕe Transitions (green) ft (xi , yi ) = wt , ϕt (yi , yi+1 ) Can use ϕt (yi , yi+1 ) = ϕy (yi ) ⊗ ϕy (yi+1 )

- 105. A Joint Kernel Map for Label Sequence Learning (continued) p(x, y) ∝ e fe (xi ,yi ) e ft (yi ,yi+1 ) for an HMM i i

- 106. A Joint Kernel Map for Label Sequence Learning (continued) p(x, y) ∝ e fe (xi ,yi ) e ft (yi ,yi+1 ) for an HMM i i f (x, y) = fe (xi , yi ) + ft (yi , yi+1 ) i i = we , ϕe (xi , yi ) + wt , ϕt (yi , yi+1 ) i i

- 107. Constraint Generation n 2 min w +C ξi w i=1 s.t. w, ϕ(xi , yi ) − w, ϕ(xi , y) ≥ ∆(yi , y) − ξi ∀y ∈ Y {yi }

- 108. Constraint Generation n 2 min w +C ξi w i=1 s.t. w, ϕ(xi , yi ) − w, ϕ(xi , y) ≥ ∆(yi , y) − ξi ∀y ∈ Y {yi } Initialize constraint set to be empty Iterate until convergence: Solve optimization using current constraint set Add maximially violated constraint for current solution

- 109. Constraint Generation with the Viterbi Algorithm To find the maximially violated constraint, we need to maximize w.r.t. y w, ϕ(xi , y) + ∆(yi , y)

- 110. Constraint Generation with the Viterbi Algorithm To find the maximially violated constraint, we need to maximize w.r.t. y w, ϕ(xi , y) + ∆(yi , y) For arbitrary output spaces, we would need to iterate over all elements in Y

- 111. Constraint Generation with the Viterbi Algorithm To find the maximially violated constraint, we need to maximize w.r.t. y w, ϕ(xi , y) + ∆(yi , y) For arbitrary output spaces, we would need to iterate over all elements in Y For HMMs, maxy w, ϕ(xi , y) can be found using the Viterbi algorithm

- 112. Constraint Generation with the Viterbi Algorithm To find the maximially violated constraint, we need to maximize w.r.t. y w, ϕ(xi , y) + ∆(yi , y) For arbitrary output spaces, we would need to iterate over all elements in Y For HMMs, maxy w, ϕ(xi , y) can be found using the Viterbi algorithm It is a simple modification of this procedure to incorporate ∆(yi , y) (Tsochantaridis et al., 2004)

- 113. Discriminative Training of Object Localization Structured output learning is not restricted to outputs specified by graphical models

- 114. Discriminative Training of Object Localization Structured output learning is not restricted to outputs specified by graphical models We can formulate object localization as a regression from an image to a bounding box g:X →Y X is the space of all images Y is the space of all bounding boxes

- 115. Joint Kernel between Images and Boxes: Restriction Kernel Note: x |y (the image restricted to the box region) is again an image. Compare two images with boxes by comparing the images within the boxes: kjoint ((x, y), (x , y ) ) = kimage (x |y , x |y , ) Any common image kernel is applicable: linear on cluster histograms: k(h, h ) = i hi hi , 1 (h −h )2 χ2 -kernel: kχ2 (h, h ) = exp − γ i hii +hi i pyramid matching kernel, ... The resulting joint kernel is positive definite.

- 116. Restriction Kernel: Examples kjoint , =k , is large. kjoint , =k , is small. kjoint , =k , could also be large. Note: This behaves differently from the common tensor products kjoint ( (x, y), (x , y ) ) = k(x, x )k(y, y )) !

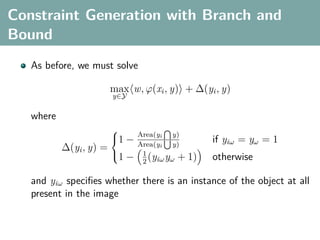

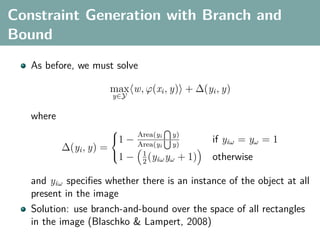

- 117. Constraint Generation with Branch and Bound As before, we must solve max w, ϕ(xi , y) + ∆(yi , y) y∈Y where 1 − Area(yi y) if yiω = yω = 1 Area(yi y) ∆(yi , y) = 1 1 − (y y + 1) otherwise 2 iω ω and yiω specifies whether there is an instance of the object at all present in the image

- 118. Constraint Generation with Branch and Bound As before, we must solve max w, ϕ(xi , y) + ∆(yi , y) y∈Y where 1 − Area(yi y) if yiω = yω = 1 Area(yi y) ∆(yi , y) = 1 1 − (y y + 1) otherwise 2 iω ω and yiω specifies whether there is an instance of the object at all present in the image Solution: use branch-and-bound over the space of all rectangles in the image (Blaschko & Lampert, 2008)

- 119. Discriminative Training of Image Segmentation Frame discriminative image segmentation as learning parameters of a random field model

- 120. Discriminative Training of Image Segmentation Frame discriminative image segmentation as learning parameters of a random field model Like sequence learning, the problem decomposes over cliques in the graph

- 121. Discriminative Training of Image Segmentation Frame discriminative image segmentation as learning parameters of a random field model Like sequence learning, the problem decomposes over cliques in the graph Set the loss to the number of incorrect pixels

- 122. Constraint Generation with Graph Cuts As the graph is loopy, we cannot use Viterbi

- 123. Constraint Generation with Graph Cuts As the graph is loopy, we cannot use Viterbi Loopy belief propagation is approximate and can lead to poor learning performance for structured output learning of graphical models (Finley & Joachims, 2008)

- 124. Constraint Generation with Graph Cuts As the graph is loopy, we cannot use Viterbi Loopy belief propagation is approximate and can lead to poor learning performance for structured output learning of graphical models (Finley & Joachims, 2008) Solution: use graph cuts (Szummer et al., 2008) ∆(yi , y) can be easily incorporated into the energy function

- 125. Summary of Structured Output Learning Structured output learning is the prediction of items in complex and interdependent output spaces

- 126. Summary of Structured Output Learning Structured output learning is the prediction of items in complex and interdependent output spaces We can train regressors into these spaces using a generalization of the support vector machine

- 127. Summary of Structured Output Learning Structured output learning is the prediction of items in complex and interdependent output spaces We can train regressors into these spaces using a generalization of the support vector machine We have shown examples for Label sequence learning with Viterbi Object localization with branch and bound Image segmentation with graph cuts

- 128. Summary of Structured Output Learning Structured output learning is the prediction of items in complex and interdependent output spaces We can train regressors into these spaces using a generalization of the support vector machine We have shown examples for Label sequence learning with Viterbi Object localization with branch and bound Image segmentation with graph cuts Questions?

![Multimodal Data

A latent aspect relates data that are present in multiple

modalities

e.g. images and text

XYZ[

_^]

z M

qqq MMM

qqq MMM

XYZ[

_^] _^]

XYZ[

q

x &

ϕx (x) ϕy (y) x:

y: “A view from Idyllwild, California,

with pine trees and snow capped Marion

Mountain under a blue sky.”](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-20-320.jpg)

![Multimodal Data

A latent aspect relates data that are present in multiple

modalities

e.g. images and text

XYZ[

_^]

z M

qqq MMM

qqq MMM

XYZ[

_^] _^]

XYZ[

q

x &

ϕx (x) ϕy (y) x:

y: “A view from Idyllwild, California,

with pine trees and snow capped Marion

Mountain under a blue sky.”

Learn kernelized projections that relate both spaces](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-21-320.jpg)

![Hilbert-Schmidt Independence Criterion

F RKHS on X with kernel kx (x, x ), G RKHS on Y with kernel

ky (y, y )

Covariance operator: Cxy : G → F such that

f , Cxy g F = Ex,y [f (x)g(y)] − Ex [f (x)]Ey [g(y)]](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-43-320.jpg)

![Hilbert-Schmidt Independence Criterion

F RKHS on X with kernel kx (x, x ), G RKHS on Y with kernel

ky (y, y )

Covariance operator: Cxy : G → F such that

f , Cxy g F = Ex,y [f (x)g(y)] − Ex [f (x)]Ey [g(y)]

HSIC is the Hilbert-Schmidt norm of Cxy (Fukumizu et al. 2008):

2

HSIC := Cxy HS](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-44-320.jpg)

![Hilbert-Schmidt Independence Criterion

F RKHS on X with kernel kx (x, x ), G RKHS on Y with kernel

ky (y, y )

Covariance operator: Cxy : G → F such that

f , Cxy g F = Ex,y [f (x)g(y)] − Ex [f (x)]Ey [g(y)]

HSIC is the Hilbert-Schmidt norm of Cxy (Fukumizu et al. 2008):

2

HSIC := Cxy HS

(Biased) empirical HSIC:

1

HSIC := Tr(Kx HKy H )

n2](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-45-320.jpg)

![Multi-Class Joint Feature Map

Simple joint kernel map:

define ϕy (yi ) to be the vector with 1 in place of the current

class, and 0 elsewhere

ϕy (yi ) = [0, . . . , 1 , . . . , 0]T

kth position

if yi represents a sample that is a member of class k](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-69-320.jpg)

![Multi-Class Joint Feature Map

Simple joint kernel map:

define ϕy (yi ) to be the vector with 1 in place of the current

class, and 0 elsewhere

ϕy (yi ) = [0, . . . , 1 , . . . , 0]T

kth position

if yi represents a sample that is a member of class k

ϕx (xi ) can result from any kernel over X :

kx (xi , xj ) = ϕx (xi ), ϕx (xj )](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-70-320.jpg)

![Multi-Class Joint Feature Map

Simple joint kernel map:

define ϕy (yi ) to be the vector with 1 in place of the current

class, and 0 elsewhere

ϕy (yi ) = [0, . . . , 1 , . . . , 0]T

kth position

if yi represents a sample that is a member of class k

ϕx (xi ) can result from any kernel over X :

kx (xi , xj ) = ϕx (xi ), ϕx (xj )

Set ϕ(xi , yi ) = ϕy (yi ) ⊗ ϕx (xi ), where ⊗ represents the

Kronecker product](https://image.slidesharecdn.com/statisticsandclusteringwithkernelsslide-lampertblaschko-biologicalcybernetics-20092-110407220415-phpapp02/85/cvpr2009-tutorial-kernel-methods-in-computer-vision-part-II-Statistics-and-Clustering-with-Kernels-Structured-Output-Learning-71-320.jpg)