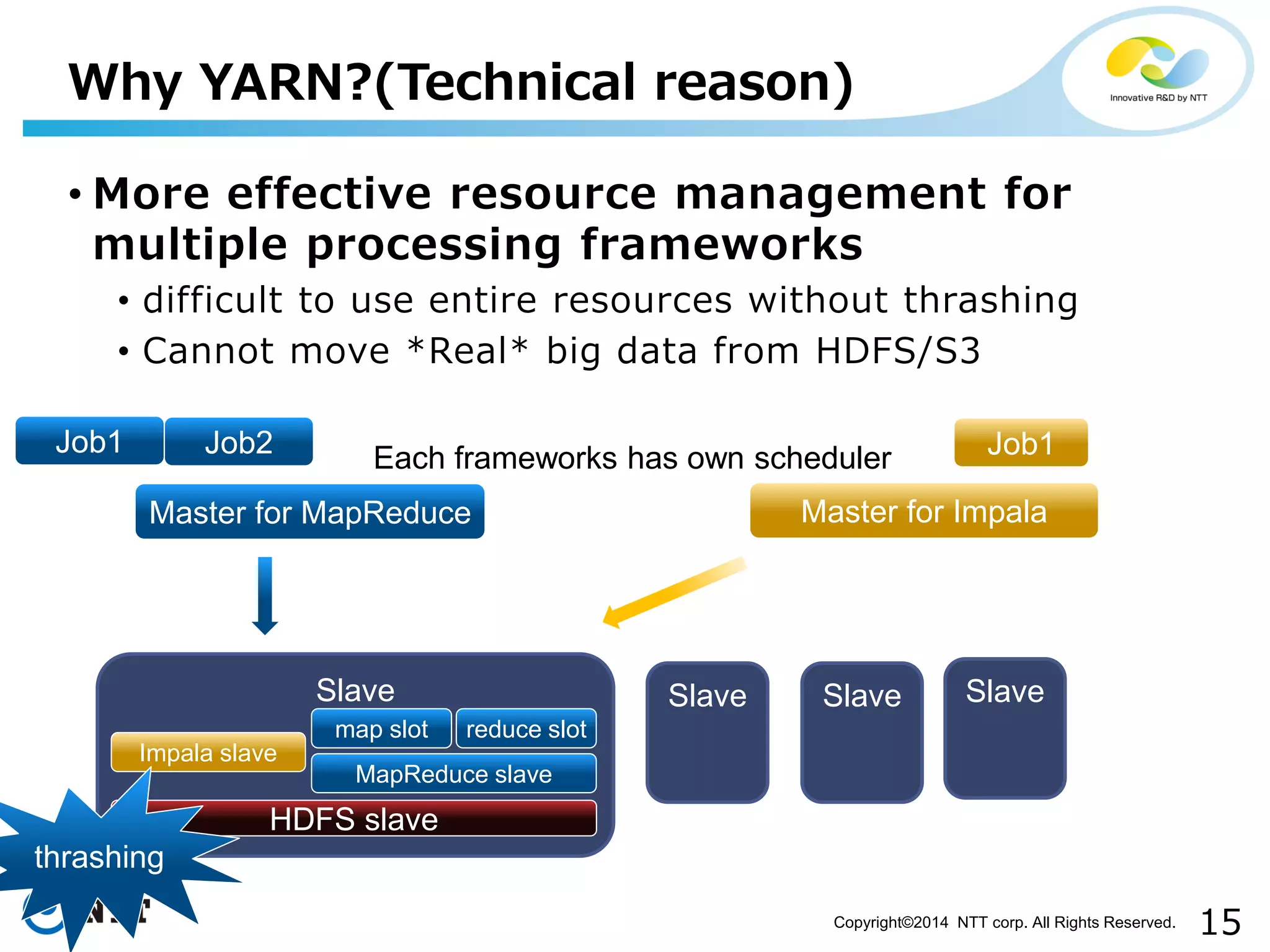

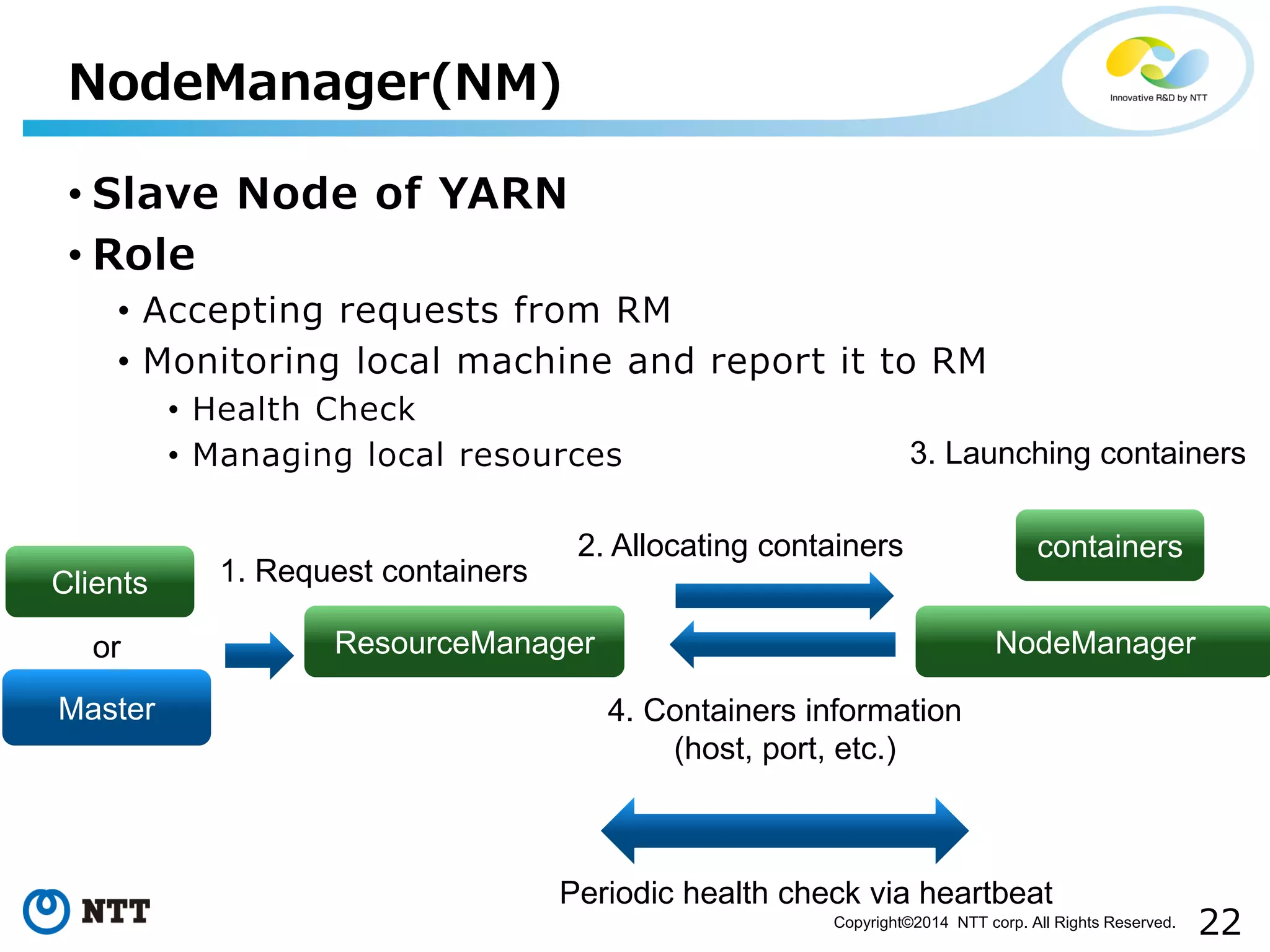

The document presents an overview of Apache Hadoop's evolution and upcoming features, particularly focusing on improvements in HDFS and YARN. Key features discussed include high availability, scalability enhancements, and the introduction of a resource management framework allowing for multiple processing engines. It highlights the rapid development of Hadoop 2 and outlines the advancements set for the 2.6 release, such as heterogeneous storage and resource allocation strategies.

![32

Copyright©2014 NTT corp. All Rights Reserved.

•HDFS-2832, HDFS-5682

•Handling various storage types in HDFS

•SSD, memory, disk, and so on.

•Setting quota per storage types

•Setting SSD quota on /home/user1 to 10 TB.

•Setting SSD quota on /home/user2 to 10 TB.

•(c) Not configuring any SSD quota on the remaining user directories (i.e. leaving it to defaults).

Heterogeneous Storages for HDFS Phase 2

<configuration>

...

<property>

<name>dfs.datanode.data.dir</name>

<value>[DISK]/mnt/sdc2/,[DISK]/mnt/sdd2,[SSD]/mnt/sde2</value>

</property>

...

</configuration>](https://image.slidesharecdn.com/dbtstokyo2014c32ntthadoop-141203014329-conversion-gate01/75/db-tech-showcase-Tokyo-2014-C32-Hadoop-by-NTT-32-2048.jpg)