Difference between logistic regression shallow neural network and deep neural network

- 1. Difference Between Logistic Regression-Shallow Neural Network and Deep Neural Network BY Chode Amarnath

- 2. Learning Objective : → Why we use supervised learning instead of unsupervised for Neural networks? → Why we use Logistic regression for Neural network?

- 4. Supervised learning → In supervised learning, we are given a data set and already know what our correct output should look like, having the idea that there is a relationship between the input and the output. → Supervised learning problems are categorized into 1) Regression. 2) Classification problems.

- 5. Classification → In a classification problem, we are instead trying to predict results in a discrete output. In other words, we are trying to map input variables into discrete categories. → The main goal of classification is to predict the target class (Yes/ No). → The classification problem is just like the regression problem, except that the values we now want to predict take on only a small number of discrete values. For now, we will focus on the binary classification problem in which y can take on only two values, 0 and 1.

- 6. Types of classification: Binary classification. When there are only two classes to predict, usually 1 or 0 values. Multi-Class Classification When there are more than two class labels to predict we call multi-classification task.

- 10. Regression → In a regression problem, we are trying to predict results within a continuous output, meaning that we are trying to map input variables to some continuous function. → In regression task , the target value is a continuously varying variable. Such as country’s GDP (or) the price of a house.

- 15. Cost Function → We can measure the accuracy of our hypothesis function by using a cost function. → It also called as Square Error Function. → Our objective is to get the best possible line. The best possible line will be such so that the average squared vertical distances of the scattered points from the line will be the least. → Ideally, the line should pass through all the points of our training data set. In such a case, the value of J(theta_0, theta_1)will be 0.

- 19. We want an efficient algorithm or a piece of software for automatically finding theta 0 and theta1.

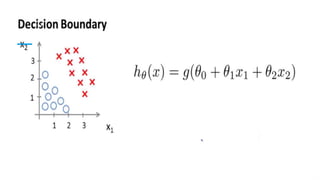

- 33. Logistic regression model → we would like our classifier to output values between 0 and 1, so we would come up with hypothesis that satisfies this property, that is predictions are between 0 and 1. → As Z goes minus infinity g(z) approaches Zero. → As g(z) approaches infinity, g(z) approaches one. → hθ(x) will give us the probability that our output is 1. For example, hθ(x)=0.7 gives us a probability of 70% that our output is 1. Our probability that our prediction is 0 is just the complement of our probability that it is 1 (e.g. if probability that it is 1 is 70%, then the probability that it is 0 is 30%).

- 47. Logistic Regression → Given an input feature vector X maybe corresponding to an image that we want to recognize as either a cat picture or not a cat picture. → we want an algorithm that can output a prediction, which is your estimate(probability of chance) of y. → X is a n dimensional vector and given that the parameter of logistic regression. → So, given an input X and the parameters W and b, how do we generate the output y(hat). → We’ll usually keep the parameter W and B separate,

- 50. Logistic Regression Cost Function → What loss function or error function we can use to measure how well your algorithm is doing. → The cost function measures, how well your parameters W and b are doing on the training set. → To train the parameters W and B of the logistic regression, we need to define a cost function. → The Loss function was defined with respect to a single training example → Cost function measures, how we’ll you’re doing an entire training set.

- 53. Gradient Descent → How can we use the gradient descent algorithm to train or learn, the parameters W and B on your training set. → Find W and B that makes the cost function as small as possible. → Height of the surface represent the Value J(w,b) at a certain point. → we to find the value of w and b that correspond to the minimum of the cost function J. → Initialize W and B to some values, denoted by this little red dot, Initialize to zero or random initialization also works.

- 57. Shallow Neural Network → In Logistic regression, we had Z followed by a calculation. → In Neural network, we just do it multiple times Z followed by a calculation. → finally compute the loss at end. → in logistic regression, we have backward calculation in order to compute devitives. → in Neural network end up doing a backward calculation

- 59. → The Circle logistic regression really represents two steps of computation → First we compute Z, → Second we compute the activation as a sigmoid function of Z. → A neural network does this a lot of times

- 65. Activation Function - When you build your neural network, one of the choice you get to make is what activation function to use in the hidden layers and as well as what is the output units of your neural network. - If you let the function g(z) = tanh(z) this almost work better than the sigmoid function, Because the values between +1 and -1, the mean of the activations that come out of the hidden layer are close to having 0 mean - the mean of the data is close to 1 rather than 0.5 - This actually makes learning for next layer a little bit easier

- 66. One of the down side of both sides of both the sigmoid and tanh function is - If Z is very large are small then the gradient or the derivative or the slope of the function is very small