EuroPython 2015 - Big Data with Python and Hadoop

- 1. 1 / 38 Big Data with Python and Hadoop Max Tepkeev 24 July 2015 Bilbao, Spain

- 2. 2 / 38 Agenda • Introduction • Big Data • Apache Hadoop & Ecosystem • HDFS / Snakebite / Hue • MapReduce • Python Streaming / MRJob / Luigi / Pydoop • Pig / UDFs / Embedded Pig • Benchmarks • Conclusions

- 3. 3 / 38 About me Max Tepkeev Russia, Moscow • python-redmine • architect • instructions https://www.github.com/maxtepkeev

- 4. 4 / 38 About us Aidata – online and offline user data collection and analysis 70 000 000 users per day http://www.aidata.me

- 5. 5 / 38 About us

- 6. 6 / 38 Big Data Big data is like teenage sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it... (Dan Ariely)

- 7. 7 / 38 Apache Hadoop • Batch processing • Linear scalability • Commodity hardware • built-in fault tolerance • expects hardware to fail • Transparent parallelism

- 8. 8 / 38 Hadoop Ecosystem • HDFS • Pig • Hive • HBase • Accumulo • Storm • Spark • MapReduce • Mahout • ZooKeeper • Flume • Avro • Kafka • Oozie

- 9. 9 / 38 HDFS • Just stores files in folders • Chunks files into blocks (64mb) • Replication of blocks (3) • Create/Read/Delete (no Update) $ hadoop fs -cat hdfs:///foo $ hadoop fs -tail hdfs:///bar

- 10. 10 / 38 Snakebite + Pure Python + Library and CLI + Communicates via Hadoop RPC - No write operations (yet) $ snakebite cat hdfs:///foo $ snakebite tail hdfs:///bar

- 11. 11 / 38 Snakebite >>> from snakebite.client import Client >>> client = Client('localhost', 8020) >>> for x in client.ls(['/']): ... print x

- 12. 12 / 38 Hue

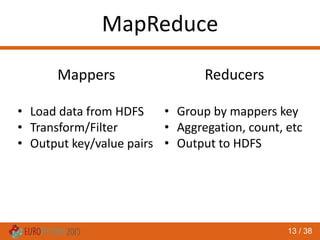

- 13. 13 / 38 MapReduce Mappers • Load data from HDFS • Transform/Filter • Output key/value pairs Reducers • Group by mappers key • Aggregation, count, etc • Output to HDFS

- 14. 14 / 38 MapReduce Python is cool Hadoop is cool Java is bad Python is cool Java is bad Hadoop is cool Python, 1 is, 1 cool, 1 Hadoop, 1 is, 1 cool, 1 Java, 1 is, 1 bad, 1 Python, [1] Hadoop, [1] Java, [1] is, [1, 1, 1] cool, [1, 1] bad, [1] Python, 1 Hadoop, 1 Java, 1 is, 3 cool, 2 bad, 1 ResultInput ReduceMapSplit

- 15. 15 / 38 Java MapReduce import java.io.BufferedReader; import java.io.FileReader; import java.io.IOException; import java.net.URI; import java.util.ArrayList; import java.util.HashSet; import java.util.List; import java.util.Set; import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.mapreduce.Counter; import org.apache.hadoop.util.GenericOptionsParser; import org.apache.hadoop.util.StringUtils; public class WordCount { public static class TokenizerMapper extends Mapper<Object, Text, Text, IntWritable>{ static enum CountersEnum { INPUT_WORDS } private final static IntWritable one = new IntWritable(1); private Text word = new Text(); private boolean caseSensitive; private Set<String> patternsToSkip = new HashSet<String>(); private Configuration conf; private BufferedReader fis; @Override public void setup(Context context) throws IOException, InterruptedException { conf = context.getConfiguration(); caseSensitive = conf.getBoolean("wordcount.case.sensitive", true); if (conf.getBoolean("wordcount.skip.patterns", true)) { URI[] patternsURIs = Job.getInstance(conf).getCacheFiles(); for (URI patternsURI : patternsURIs) { Path patternsPath = new Path(patternsURI.getPath()); String patternsFileName = patternsPath.getName().toString(); parseSkipFile(patternsFileName); } } } private void parseSkipFile(String fileName) { try { fis = new BufferedReader(new FileReader(fileName)); String pattern = null; while ((pattern = fis.readLine()) != null) { patternsToSkip.add(pattern); } } catch (IOException ioe) { System.err.println("Caught exception while parsing the cached file '" + StringUtils.stringifyException(ioe)); } } @Override public void map(Object key, Text value, Context context ) throws IOException, InterruptedException { String line = (caseSensitive) ? value.toString() : value.toString().toLowerCase(); for (String pattern : patternsToSkip) { line = line.replaceAll(pattern, ""); } StringTokenizer itr = new StringTokenizer(line); while (itr.hasMoreTokens()) { word.set(itr.nextToken()); context.write(word, one); Counter counter = context.getCounter(CountersEnum.class.getName(), CountersEnum.INPUT_WORDS.toString()); counter.increment(1); } } } public static class IntSumReducer extends Reducer<Text,IntWritable,Text,IntWritable> { private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values, Context context ) throws IOException, InterruptedException { int sum = 0; for (IntWritable val : values) { sum += val.get(); } result.set(sum); context.write(key, result); } } public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); GenericOptionsParser optionParser = new GenericOptionsParser(conf, args); String[] remainingArgs = optionParser.getRemainingArgs(); if (!(remainingArgs.length != 2 || remainingArgs.length != 4)) { System.err.println("Usage: wordcount <in> <out> [-skip skipPatternFile]"); System.exit(2); } Job job = Job.getInstance(conf, "word count"); job.setJarByClass(WordCount.class); job.setMapperClass(TokenizerMapper.class); job.setCombinerClass(IntSumReducer.class); job.setReducerClass(IntSumReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); List<String> otherArgs = new ArrayList<String>(); for (int i=0; i < remainingArgs.length; ++i) { if ("-skip".equals(remainingArgs[i])) { job.addCacheFile(new Path(remainingArgs[++i]).toUri()); job.getConfiguration().setBoolean("wordcount.skip.patterns", true); } else { otherArgs.add(remainingArgs[i]); } } FileInputFormat.addInputPath(job, new Path(otherArgs.get(0))); FileOutputFormat.setOutputPath(job, new Path(otherArgs.get(1))); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

- 16. 16 / 38 Python Streaming MapReduce Mapper #!/usr/bin/env python import sys for line in sys.stdin: words = line.strip().split(' ') for word in words: print '%st%s' % (word, 1) Reducer #!/usr/bin/env python import sys from itertools import groupby key = lambda x: x[0] data = (l.rstrip().split('t') for l in sys.stdin) for word, counts in groupby(data, key): total = sum(int(c) for _, c in counts) print '%st%s' % (word, total)

- 17. 17 / 38 Python Streaming MapReduce $ hadoop jar /path/to/hadoop-streaming.jar -mapper /path/to/mapper.py -reducer /path/to/reducer.py -file /path/to/mapper.py -file /path/to/reducer.py -input hdfs:///input -output hdfs:///output

- 18. 18 / 38 Python Streaming MapReduce $ hdfs dfs -cat hdfs:///output/* Hadoop 1 Java 1 Python 1 bad 1 cool 2 is 3

- 19. 19 / 38 Python Frameworks Overview • Dumbo • Last commit 2 years ago • 0 downloads per month on PyPI • Hadoopy • Last commit 3 years ago • ~350 downloads per month on PyPI • Pydoop • Last commit 2 days ago • ~3100 downloads per month on PyPI • Luigi • Last commit today • ~31000 downloads per month on PyPI • MRJob • Last commit 2 days ago • ~72000 downloads per month on PyPI

- 20. 20 / 38 MRJob MapReduce from mrjob.job import MRJob class MRWordCount(MRJob): def mapper(self, _, line): for word in line.strip().split(' '): yield word, 1 def reducer(self, word, counts): yield word, sum(counts) if __name__ == '__main__': MRWordCount.run()

- 21. 21 / 38 MRJob MapReduce from mrjob import job, protocol class MRWordCount(job.MRJob): INTERNAL_PROTOCOL = protocol.UltraJSONProtocol OUTPUT_PROTOCOL = protocol.UltraJSONProtocol def mapper(self, _, line): for word in line.strip().split(' '): yield word, 1 def reducer(self, word, counts): yield word, sum(counts) if __name__ == '__main__': MRWordCount.run()

- 22. 22 / 38 MRJob MapReduce from mrjob import job, protocol class MRWordCount(job.MRJob): INTERNAL_PROTOCOL = protocol.RawProtocol OUTPUT_PROTOCOL = protocol.RawProtocol def mapper(self, _, line): for word in line.strip().split(' '): yield word, '1' def reducer(self, word, counts): yield word, str(sum(int(c) for c in counts)) if __name__ == '__main__': MRWordCount.run()

- 23. 23 / 38 MRJob Pros/Cons + Superb documentation + Superb integration with Amazon’s EMR + Active development + Biggest community + Local testing without Hadoop + Automatically uploads itself into cluster + Supports multi-step jobs - Slow serialization/deserialization

- 24. 24 / 38 Luigi MapReduce import luigi import luigi.contrib.hadoop import luigi.contrib.hdfs class InputText(luigi.ExternalTask): def output(self): return luigi.contrib.hdfs.HdfsTarget('/input') class WordCount(luigi.contrib.hadoop.JobTask): def requires(self): return [InputText()] def output(self): return luigi.contrib.hdfs.HdfsTarget('/output') def mapper(self, line): for word in line.strip().split(' '): yield word, 1 def reducer(self, word, counts): yield word, sum(counts) if __name__ == '__main__': luigi.run()

- 25. 25 / 38 Luigi MapReduce import luigi import luigi.contrib.hadoop import luigi.contrib.hdfs class InputText(luigi.ExternalTask): def output(self): return luigi.contrib.hdfs.HdfsTarget('/input') class WordCount(luigi.contrib.hadoop.JobTask): data_interchange_format = 'json' def requires(self): return [InputText()] def output(self): return luigi.contrib.hdfs.HdfsTarget('/output') def mapper(self, line): for word in line.strip().split(' '): yield word, 1 def reducer(self, word, counts): yield word.encode('utf-8'), str(sum(counts)) if __name__ == '__main__': luigi.run()

- 26. 26 / 38 Luigi Pros/Cons + The only real workflow framework + Central scheduler with visuals + Task history + Active development + Big community + Automatically uploads itself into cluster + Integration with Snakebite - Not so good local testing - Really slow serialization/deserialization

- 27. 27 / 38 Pydoop MapReduce import pydoop.mapreduce.api as api import pydoop.mapreduce.pipes as pp class Mapper(api.Mapper): def map(self, context): for word in context.value.split(' '): context.emit(word, 1) class Reducer(api.Reducer): def reduce(self, context): context.emit(context.key, sum(context.values)) def __main__(): pp.run_task(pp.Factory(Mapper, Reducer))

- 28. 28 / 38 Pydoop Pros/Cons + Good documentation + Amazingly fast + Active development + HDFS API based on libhdfs + Implement record reader/writer in Python + Implement partitioner in Python - Difficult to install - Small community - Doesn’t upload itself to cluster

- 29. 29 / 38 Pig MapReduce text = LOAD '/input' AS (line:chararray); words = FOREACH text GENERATE FLATTEN(TOKENIZE(TRIM(line))) AS word; groups = GROUP words BY word; result = FOREACH groups GENERATE group, COUNT(words); STORE result INTO '/output';

- 30. 30 / 38 Pig UDFs REGISTER 'geoip.py' USING jython AS geoip; ips = LOAD '/input' AS (ip:chararray); geo = FOREACH ips GENERATE ip, geoip.getLocationByIP(ip) AS location; from java.io import File from java.net import InetAddress from com.maxmind.geoip2 import DatabaseReader from com.maxmind.geoip2.exception import AddressNotFoundException reader = DatabaseReader.Builder(File('GeoLite2-City.mmdb')).build() @outputSchema('location:chararray') def getLocationByIP(ip): try: data = reader.city(InetAddress.getByName(ip)) return '%s:%s' % ( data.getCountry().getIsoCode() or '-’, data.getCity().getGeoNameId() or '-’ ) except AddressNotFoundException: return '-:-' PIGJYTHON

- 31. 31 / 38 Embedded Pig #!/usr/bin/jython from org.apache.pig.scripting import * @outputSchema('greeting:chararray') def hello(name): return 'Hello, %s' % (name) if __name__ == '__main__': P = Pig.compile("""a = LOAD '/names' as (name:chararray); b = FOREACH a GENERATE hello(name); STORE b INTO '/output';""") result = P.bind().runSingle();

- 32. 32 / 38 Benchmarking Cluster & Software • 8 AWS c3.4xlarge • 16 vCPU • 30 GB RAM • 2x160 GB SSD • Ubuntu 12.04.5 • CDH5 (Hadoop 2.5.0) • 268 map tasks • 98 reduce tasks • Java 1.7.0_67 • Pig 0.12.0 • CPython 2.7.3 • PyPy 2.6.0 • Jython 2.7.0 • Pydoop 1.0.0 • MRJob 0.5.0-dev • Luigi 1.3.0 JOB: WordCount on Mark Lutz’s - Learning Python, 5th edition * 10000 times (35 GB)

- 34. 34 / 38 Conclusions For complex workflow organization, job chaining and HDFS manipulation use... Luigi + Snakebite

- 35. 35 / 38 Conclusions For writing lightning speed map reduce jobs and if you aren’t afraid of difficulties in the beginning use... Pydoop + Pig

- 36. 36 / 38 Conclusions For development, local testing or perfect Amazon’s EMR integration use… MRJob

- 37. 37 / 38 Conclusions image taken from http://blog.mortardata.com/post/62334142398/hadoop-python-pig-trunk

- 38. 38 / 38 Questions slides: http://slideshare.net/maxtepkeev code: https://github.com/maxtepkeev/talks github: https://github.com/maxtepkeev email: [email protected] skype: max.tepkeev company: http://www.aidata.me

![11 / 38

Snakebite

>>> from snakebite.client import Client

>>> client = Client('localhost', 8020)

>>> for x in client.ls(['/']):

... print x](https://image.slidesharecdn.com/europython2015-hadoopwithpython-150723220519-lva1-app6891/85/EuroPython-2015-Big-Data-with-Python-and-Hadoop-11-320.jpg)

![14 / 38

MapReduce

Python is cool

Hadoop is cool

Java is bad

Python is cool

Java is bad

Hadoop is cool

Python, 1

is, 1

cool, 1

Hadoop, 1

is, 1

cool, 1

Java, 1

is, 1

bad, 1

Python, [1]

Hadoop, [1]

Java, [1]

is, [1, 1, 1]

cool, [1, 1]

bad, [1]

Python, 1

Hadoop, 1

Java, 1

is, 3

cool, 2

bad, 1

ResultInput ReduceMapSplit](https://image.slidesharecdn.com/europython2015-hadoopwithpython-150723220519-lva1-app6891/85/EuroPython-2015-Big-Data-with-Python-and-Hadoop-14-320.jpg)

![15 / 38

Java MapReduce

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.net.URI;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.List;

import java.util.Set;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.Counter;

import org.apache.hadoop.util.GenericOptionsParser;

import org.apache.hadoop.util.StringUtils;

public class WordCount {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{

static enum CountersEnum { INPUT_WORDS }

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

private boolean caseSensitive;

private Set<String> patternsToSkip = new HashSet<String>();

private Configuration conf;

private BufferedReader fis;

@Override

public void setup(Context context) throws IOException,

InterruptedException {

conf = context.getConfiguration();

caseSensitive = conf.getBoolean("wordcount.case.sensitive", true);

if (conf.getBoolean("wordcount.skip.patterns", true)) {

URI[] patternsURIs = Job.getInstance(conf).getCacheFiles();

for (URI patternsURI : patternsURIs) {

Path patternsPath = new Path(patternsURI.getPath());

String patternsFileName = patternsPath.getName().toString();

parseSkipFile(patternsFileName);

}

}

}

private void parseSkipFile(String fileName) {

try {

fis = new BufferedReader(new FileReader(fileName));

String pattern = null;

while ((pattern = fis.readLine()) != null) {

patternsToSkip.add(pattern);

}

} catch (IOException ioe) {

System.err.println("Caught exception while parsing the cached file '"

+ StringUtils.stringifyException(ioe));

}

}

@Override

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

String line = (caseSensitive) ?

value.toString() : value.toString().toLowerCase();

for (String pattern : patternsToSkip) {

line = line.replaceAll(pattern, "");

}

StringTokenizer itr = new StringTokenizer(line);

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

Counter counter = context.getCounter(CountersEnum.class.getName(),

CountersEnum.INPUT_WORDS.toString());

counter.increment(1);

}

}

}

public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

GenericOptionsParser optionParser = new GenericOptionsParser(conf,

args);

String[] remainingArgs = optionParser.getRemainingArgs();

if (!(remainingArgs.length != 2 || remainingArgs.length != 4)) {

System.err.println("Usage: wordcount <in> <out> [-skip

skipPatternFile]");

System.exit(2);

}

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

List<String> otherArgs = new ArrayList<String>();

for (int i=0; i < remainingArgs.length; ++i) {

if ("-skip".equals(remainingArgs[i])) {

job.addCacheFile(new Path(remainingArgs[++i]).toUri());

job.getConfiguration().setBoolean("wordcount.skip.patterns", true);

} else {

otherArgs.add(remainingArgs[i]);

}

}

FileInputFormat.addInputPath(job, new Path(otherArgs.get(0)));

FileOutputFormat.setOutputPath(job, new Path(otherArgs.get(1)));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}](https://image.slidesharecdn.com/europython2015-hadoopwithpython-150723220519-lva1-app6891/85/EuroPython-2015-Big-Data-with-Python-and-Hadoop-15-320.jpg)

![16 / 38

Python Streaming MapReduce

Mapper

#!/usr/bin/env python

import sys

for line in sys.stdin:

words = line.strip().split(' ')

for word in words:

print '%st%s' % (word, 1)

Reducer

#!/usr/bin/env python

import sys

from itertools import groupby

key = lambda x: x[0]

data = (l.rstrip().split('t') for l in sys.stdin)

for word, counts in groupby(data, key):

total = sum(int(c) for _, c in counts)

print '%st%s' % (word, total)](https://image.slidesharecdn.com/europython2015-hadoopwithpython-150723220519-lva1-app6891/85/EuroPython-2015-Big-Data-with-Python-and-Hadoop-16-320.jpg)

![24 / 38

Luigi MapReduce

import luigi

import luigi.contrib.hadoop

import luigi.contrib.hdfs

class InputText(luigi.ExternalTask):

def output(self):

return luigi.contrib.hdfs.HdfsTarget('/input')

class WordCount(luigi.contrib.hadoop.JobTask):

def requires(self):

return [InputText()]

def output(self):

return luigi.contrib.hdfs.HdfsTarget('/output')

def mapper(self, line):

for word in line.strip().split(' '):

yield word, 1

def reducer(self, word, counts):

yield word, sum(counts)

if __name__ == '__main__':

luigi.run()](https://image.slidesharecdn.com/europython2015-hadoopwithpython-150723220519-lva1-app6891/85/EuroPython-2015-Big-Data-with-Python-and-Hadoop-24-320.jpg)

![25 / 38

Luigi MapReduce

import luigi

import luigi.contrib.hadoop

import luigi.contrib.hdfs

class InputText(luigi.ExternalTask):

def output(self):

return luigi.contrib.hdfs.HdfsTarget('/input')

class WordCount(luigi.contrib.hadoop.JobTask):

data_interchange_format = 'json'

def requires(self):

return [InputText()]

def output(self):

return luigi.contrib.hdfs.HdfsTarget('/output')

def mapper(self, line):

for word in line.strip().split(' '):

yield word, 1

def reducer(self, word, counts):

yield word.encode('utf-8'), str(sum(counts))

if __name__ == '__main__':

luigi.run()](https://image.slidesharecdn.com/europython2015-hadoopwithpython-150723220519-lva1-app6891/85/EuroPython-2015-Big-Data-with-Python-and-Hadoop-25-320.jpg)