Fluentd meetup dive into fluent plugin (outdated)

- 1. Dive into Fluent P lugin 2012 2 4

- 2. Site: repeatedly.github.com Company: Preferred Infrastructure, Inc. Love plugins: input: tail buffer: memory output: mongo 2012 2 4

- 3. What's Fluentd? The missing log collector See keynote developed by 2012 2 4

- 4. Fluentd is a buffer router collector converter aggregator etc... 2012 2 4

- 5. ... but, Fluentd doesn’t have such features as a built-in. 2012 2 4

- 6. Instead, Fluentd has flexible plugin architecture which consists of Input, Output and Buffer. 2012 2 4

- 7. We can customize Fluentd using plugins :) 2012 2 4

- 8. Agenda Yes, I talk about - an example of Fluentd plugins - Fluentd and libraries - how to develop a Fluentd plugins No, I don’t talk about - the details of each plugin - the experience of production 2012 2 4

- 9. Example based on bit.ly/fluentd-with-mongo 2012 2 4

- 10. Install Plugin name is fluent-plugin-xxx , and fluent-gem is included in Fluentd gem. 2012 2 4

- 11. Let’s type! $ fluent-gem install fluent-plugin-mongo 2012 2 4

- 12. Me! Many Many Many Plugins! 2012 2 4

- 13. fluentd.conf Input Output <source> <match mongo.**> type tail type mongo format apache database apache path /path/to/log collection access tag mongo.apache host otherhost </source> </match> 2012 2 4

- 14. Start! $ fluentd -c fluentd.conf 2012-02-04 00:00:14 +0900: starting fluentd-0.10.8 2012-02-04 00:00:14 +0900: reading config file path="fluentd.conf" 2012-02-04 00:00:14 +0900: adding source type="tail" 2012-02-04 00:00:14 +0900: adding match pattern="mongo.**" type="mongo" 2012 2 4

- 15. Attack! $ ab -n 100 -c 10 http://localhost/ 2012 2 4

- 16. $ mongo --host otherhost > use apache > db.access.find() { "type": "127.0.0.1", "method": "GET", "path": "/", "code": "200", "size": "44", "time": ISODate("2011-11-27T07:56:27Z") ... } has more... 2012 2 4

- 17. Apache I’m a log! tail write insert Fluentd event buffering Mongo 2012 2 4

- 18. Warming up 2012 2 4

- 19. Fluentd Stack Output Input Buffer Ruby MessagePack Cool.io OS 2012 2 4

- 20. Ruby ruby-lang.org 2012 2 4

- 21. Fluentd and plugins are written in Ruby. 2012 2 4

- 22. ... but note that Fluentd works on Ruby 1.9, goodbye 1.8! 2012 2 4

- 23. MessagePack msgpack.org 2012 2 4

- 24. Serialization: JSON like fast and compact format. RPC: Async and parallelism for high performance. IDL: Easy to integrate and maintain the service. 2012 2 4

- 25. Binary format, Header + Body, and Variable length. 2012 2 4

- 26. Note that Ruby version can’t handle a Time object. 2012 2 4

- 27. So, we use an Integer object instead of a Time. 2012 2 4

- 28. Source: github.com/msgpack Wiki: wiki.msgpack.org/display/MSGPACK Mailing List: groups.google.com/group/msgpack 2012 2 4

- 29. Cool.io coolio.github.com 2012 2 4

- 30. Event driven framework built on top of libev. 2012 2 4

- 31. Cool.io has Loop and Watchers with Transport wrappers. 2012 2 4

- 32. Fluentd has a default event loop. We can use @default_loop in the plugin. 2012 2 4

- 33. Configuration 2012 2 4

- 34. Fluentd loads plugins from $LOAD_PATH. 2012 2 4

- 35. Input: $LOAD_PATH/fluent/plugin/in_<type>.rb Buffer: $LOAD_PATH/fluent/plugin/buf_<type>.rb Output: $LOAD_PATH/fluent/plugin/out_<type>.rb 2012 2 4

- 36. We use ‘register_input’, ‘register_buffer’ and ‘register_output’ to register a plugin. 2012 2 4

- 37. We can load the plugin configuration using config_param and configure method. config_param set config value to @<config name> automatically. 2012 2 4

- 38. <source> type tail path /path/to/log ... </source> fluentd.conf class TailInput < Input Plugin.register_input(’tail’, self) config_param :path, :string ... end in_tail.rb 2012 2 4

- 39. One trick is here: Fluentd’s configuration module does not verify a default value. So, we can use the nil like Tribool :) config_param :tag, :string, :default => nil Fluentd does not check the type 2012 2 4

- 40. Fluentd provides some useful mixins for input and output plugins. 2012 2 4

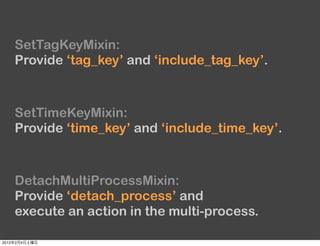

- 41. SetTagKeyMixin: Provide ‘tag_key’ and ‘include_tag_key’. SetTimeKeyMixin: Provide ‘time_key’ and ‘include_time_key’. DetachMultiProcessMixin: Provide ‘detach_process’ and execute an action in the multi-process. 2012 2 4

- 42. Mixin usage Code Flow super class MongoOutput < BufferedOutput BufferedOutput ... super include SetTagKeyMixin config_set_default SetTagKeyMixin :include_tag_key, false super ... end MongoOutput 2012 2 4

- 43. Input 2012 2 4

- 44. Available plugins Default 3rd party exec mongo_tail forward scribe http msgpack stream dstat syslog zmq tail amqp etc... etc... 2012 2 4

- 45. class NewInput < Input ... def configure(conf) # parse a configuration manually end def start # invoke action end def shutdown # cleanup resources end end 2012 2 4

- 46. In action method, we use Engine.emit to input data. tag = "app.tag" time = Engine.now Sample: record = {"key" => "value", ...} Engine.emit(tag, time, record) 2012 2 4

- 47. How to read an input in an efficient way? We use a thread and an event loop. 2012 2 4

- 48. Thread class ForwardInput < Fluent::Input ... def start ... @thread = Thread.new(&method(:run)) end def run ... end end 2012 2 4

- 49. Event loop class ForwardInput < Fluent::Input ... def start @loop = Coolio::Loop.new @lsock = listen @loop.attach(@lsock) ... end ... end 2012 2 4

- 50. Note that We must use Engine.now instead of Time.now 2012 2 4

- 51. Buffer 2012 2 4

- 52. Available plugins Default 3rd party memory file zfile (?) 2012 2 4

- 53. In most cases, Memory and File are enough. 2012 2 4

- 54. Memory type is default. It’s fast but can’t resume data. 2012 2 4

- 55. File type is persistent type. It can resume data from file. 2012 2 4

- 56. Output 2012 2 4

- 57. Available plugins Default 3rd party copy mongo exec s3 file scribe forward couch null hoop stdout splunk etc... etc... 2012 2 4

- 58. class NewOutput < BufferedOutput # configure, start and shutdown # are same as input plugin def format(tag, time, record) # convert event to raw string end def write(chunk) # write chunk to target # chunk has multiple formatted data end end 2012 2 4

- 59. Output has 3 buffering modes. None Buffered Time sliced 2012 2 4

- 60. Buffering type Buffered Time sliced from in Buffer has an internal chunk map to manage a chunk. A key is tag in Buffered, chunk queue but a key is time slice in limit chunk limit TimeSliced buffer. go out def write(chunk) chunk # chunk.key is time slice end 2012 2 4

- 61. How to write an output in an efficient way? We can use multi-process (input too). See: DetachMultiProcessMixin with detach_multi_process 2012 2 4

- 62. Test 2012 2 4

- 63. Input: Fluent::Test::InputTestDriver Buffer: Fluent::Test::BufferedOutputTestDriver Output: Fluent::Test::OutputTestDriver 2012 2 4

- 64. class MongoOutputTest < Test::Unit::TestCase def setup Fluent::Test.setup require 'fluent/plugin/out_mongo' end def create_driver(conf = CONFIG) Fluent::Test::BufferedOutputTestDriver.new (Fluent::MongoOutput) { def start # prevent external access super end ... }.configure(conf) end 2012 2 4

- 65. ... def test_format # test format using emit and expect_format end def test_write d = create_driver t = emit_documents(d) # return a result of write method collection_name, documents = d.run assert_equal([{...}, {...}, ...], documents) assert_equal('test', collection_name) end ... end 2012 2 4

- 66. It’s a weak point in Fluentd... right? 2012 2 4

- 67. Release 2012 2 4

- 68. Gem Structure Plugin root |-- lib/ | |-- fluent/ | |-- plugin/ | |- out_<name>.rb |- Gemfile |- fluent-plugin-<name>.gemspec |- Rakefile |- README.md(rdoc) |- VERSION 2012 2 4

- 69. Bundle with git $ edit lib/fluent/plugin/out_<name>.rb $ git add / commit $ cat VERSION 0.1.0 $ bunlde exec rake release See: rubygems.org/gems/fluent-plugin-<name> 2012 2 4

- 70. See released plugins for more details about each file. 2012 2 4

- 71. Lastly... 2012 2 4

- 72. Help! 2012 2 4

- 73. Question? 2012 2 4

![...

def test_format

# test format using emit and expect_format

end

def test_write

d = create_driver

t = emit_documents(d)

# return a result of write method

collection_name, documents = d.run

assert_equal([{...}, {...}, ...], documents)

assert_equal('test', collection_name)

end

...

end

2012 2 4](https://image.slidesharecdn.com/fluentdmeetup-diveintofluentplugin-120203210125-phpapp02/85/Fluentd-meetup-dive-into-fluent-plugin-outdated-65-320.jpg)