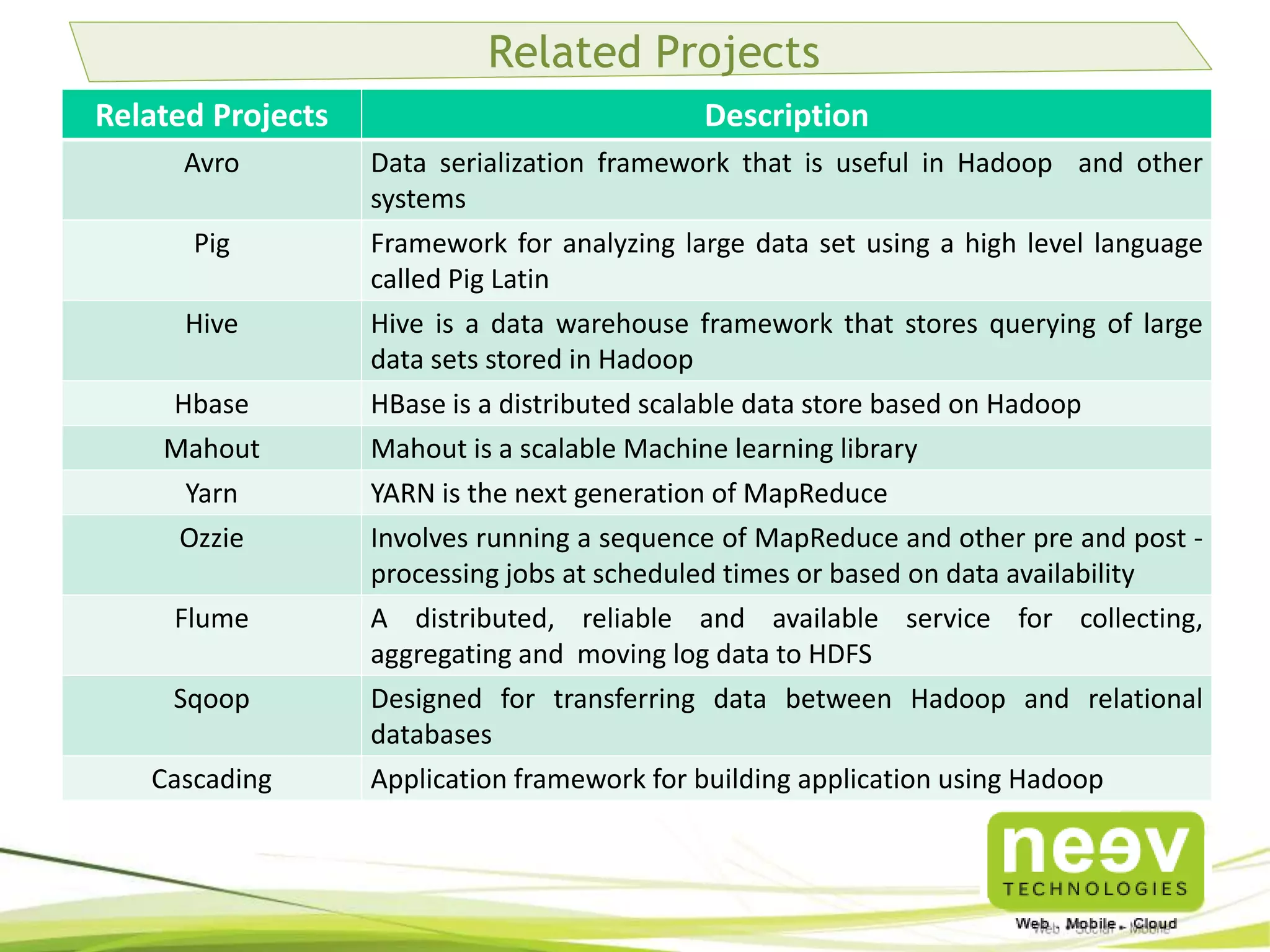

The document provides an overview of the Hadoop ecosystem, highlighting its significance in processing large data sets through a scalable distributed framework. It details core components such as HDFS and MapReduce, along with various distributions and related technologies. Additionally, it mentions Neev Technologies, a company with extensive expertise in managing offshore development and providing cloud services.