Java clients for elasticsearch

5 likes2,818 views

Slides for a talk on Java clients for elasticsearch for Singapore JUG. Covers the TransportClient, RestClient, Jest and Spring Data Elasticsearch

1 of 56

Downloaded 37 times

![Indexing

curl -XPOST "http://localhost:9200/food/dish" -d'

{

"food": "Hainanese Chicken Rice",

"tags": ["chicken", "rice"],

"favorite": {

"location": "Tian Tian",

"price": 5.00

}

}'](https://image.slidesharecdn.com/java-clients-elasticsearch-161110043101/85/Java-clients-for-elasticsearch-8-320.jpg)

![Indexing

curl -XPOST "http://localhost:9200/food/dish" -d'

{

"food": "Ayam Penyet",

"tags": ["chicken", "indonesian"],

"spicy": true

}'](https://image.slidesharecdn.com/java-clients-elasticsearch-161110043101/85/Java-clients-for-elasticsearch-9-320.jpg)

![Search

curl -XGET

"http://localhost:9200/food/dish/_search?q=chicken"

...

{"total":2,"max_score":0.3666863,"hits":

[{"_index":"food","_type":"dish","_id":"AVg9cMwARrBlrY9

tYBqX","_score":0.3666863,"_source":

{

"food": "Hainanese Chicken Rice",

"tags": ["chicken", "rice"],

"favorite": {

"location": "Tian Tian",

"price": 5.00

}

}},

...](https://image.slidesharecdn.com/java-clients-elasticsearch-161110043101/85/Java-clients-for-elasticsearch-10-320.jpg)

![Indexing

XContentBuilder builder = jsonBuilder()

.startObject()

.field("food", "Roti Prata")

.array("tags", new String [] {"curry"})

.startObject("favorite")

.field("location", "Tiong Bahru")

.field("price", 2.00)

.endObject()

.endObject();

IndexResponse resp = client.prepareIndex("food","dish")

.setSource(builder)

.execute()

.actionGet();](https://image.slidesharecdn.com/java-clients-elasticsearch-161110043101/85/Java-clients-for-elasticsearch-21-320.jpg)

Ad

Recommended

Introduction to elasticsearch

Introduction to elasticsearchFlorian Hopf Introduction to several aspects of elasticsearch: Full text search, Scaling, Aggregations and centralized logging.

Talk for an internal meetup at a bank in Singapore at 18.11.2016.

Elasticsearch und die Java-Welt

Elasticsearch und die Java-WeltFlorian Hopf Elasticsearch is an open source search and analytics engine that is distributed, horizontally scalable, reliable, and easy to manage. The document discusses how to install and interact with Elasticsearch using various Java clients and frameworks. It covers using the standard Java client directly, the Jest HTTP client, and Spring Data Elasticsearch which provides abstractions and dynamic repositories.

Elastic Search

Elastic SearchNexThoughts Technologies Elasticsearch is a highly scalable open-source full-text search and analytics engine. It allows you to store, search, and analyze big volumes of data quickly and in near real time.

Elasticsearch 5.0

Elasticsearch 5.0Matias Cascallares What's new in Elasticsearch 5.0? Take a look at all the new cool features we introduced in version 5.

Side by Side with Elasticsearch and Solr

Side by Side with Elasticsearch and SolrSematext Group, Inc. This document summarizes a presentation comparing Solr and Elasticsearch. It outlines the main topics covered, including documents, queries, mapping, indexing, aggregations, percolations, scaling, searches, and tools. Examples of specific features like bool queries, facets, nesting aggregations, and backups are demonstrated for both Solr and Elasticsearch. The presentation concludes by noting most projects work well with either system and to choose based on your use case.

Александр Сергиенко, Senior Android Developer, DataArt

Александр Сергиенко, Senior Android Developer, DataArtAlina Vilk This document discusses HTTP caching tips and tricks. It explains that caching can decrease traffic, increase loading speed, require less code, and allow offline use. It provides code to enable caching and describes headers for content validation, cache checking, and cache management. These techniques can help optimize performance by leveraging cached responses when possible.

ElasticSearch - Introduction to Aggregations

ElasticSearch - Introduction to Aggregationsenterprisesearchmeetup This document provides examples of using aggregations in Elasticsearch to calculate statistics and group documents. It shows terms, range, and histogram facets/aggregations to group documents by fields like state or population range and calculate statistics like average density. It also demonstrates nesting aggregations to first group by one field like state and then further group and calculate stats within each state group. Finally it lists the built-in aggregation bucketizers and calculators available in Elasticsearch.

Webinar: Architecting Secure and Compliant Applications with MongoDB

Webinar: Architecting Secure and Compliant Applications with MongoDBMongoDB High-profile security breaches have become embarrassingly common, but ultimately avoidable. Now more than ever, database security is a critical component of any production application. In this talk you'll learn to secure your deployment in accordance with best practices and compliance regulations. We'll explore the MongoDB Enterprise features which ensure HIPAA and PCI compliance, and protect you against attack, data exposure and a damaged reputation.

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...Voxxed Athens This document discusses the evolution of Elasticsearch from versions 5.0 to 8.0. It covers topics like strict bootstrap checks, rolling upgrades, floodstage watermarks, sequence numbers, mapping types removal, automatic queue resizing, adaptive replica selection, shard shrinking and splitting. The document provides demos of some of these features and recommends benchmarks and meetups for further learning.

Journée DevOps : Des dashboards pour tous avec ElasticSearch, Logstash et Kibana

Journée DevOps : Des dashboards pour tous avec ElasticSearch, Logstash et KibanaPublicis Sapient Engineering Vous n'avez pas pu assister à la journée DevOps by Xebia ? Voici la présentation de Vincent Spiewak (Xebia) à propos d'ElasticSearch, Logstash et Kibana.

«Scrapy internals» Александр Сибиряков, Scrapinghub

«Scrapy internals» Александр Сибиряков, Scrapinghubit-people - Scrapy is a framework for web scraping that allows for extraction of structured data from HTML/XML through selectors like CSS and XPath. It provides features like an interactive shell, feed exports, encoding support, and more.

- Scrapy is built on top of the Twisted asynchronous networking framework, which provides an event loop and deferreds. It handles protocols and transports like TCP, HTTP, and more across platforms.

- Scrapy architecture includes components like the downloader, scraper, and item pipelines that communicate internally. Flow control is needed between these to limit memory usage and scheduling through techniques like concurrent item limits, memory limits, and delays between calls.

Mobile Analytics mit Elasticsearch und Kibana

Mobile Analytics mit Elasticsearch und Kibanainovex GmbH Speaker: Dominik Helleberg, inovex

MobileTech Conference 2015

September 2015

Mehr Vorträge: https://www.inovex.de/de/content-pool/vortraege/

Null Bachaav - May 07 Attack Monitoring workshop.

Null Bachaav - May 07 Attack Monitoring workshop.Prajal Kulkarni This document provides an overview and instructions for setting up the ELK stack (Elasticsearch, Logstash, Kibana) for attack monitoring. It discusses the components, architecture, and configuration of ELK. It also covers installing and configuring Filebeat for centralized logging, using Kibana dashboards for visualization, and integrating osquery for internal alerting and attack monitoring.

Elasticsearch Distributed search & analytics on BigData made easy

Elasticsearch Distributed search & analytics on BigData made easyItamar Elasticsearch is a cloud-ready, super scalable search engine which is gaining a lot of popularity lately. It is mostly known for being extremely easy to setup and integrate with any technology stack.In this talk we will introduce Elasticdearch, and start by looking at some of its basic capabilities. We will demonstrate how it can be used for document search and even log analytics for DevOps and distributed debugging, and peek into more advanced usages like the real-time aggregations and percolation. Obviously, we will make sure to demonstrate how Elasticsearch can be scaled out easily to work on a distributed architecture and handle pretty much any load.

Elasticsearch quick Intro (English)

Elasticsearch quick Intro (English)Federico Panini Elasticsearch what is it ? How can I use it in my stack ? I will explain how to set up a working environment with Elasticsearch. The slides are in English.

Building a CRM on top of ElasticSearch

Building a CRM on top of ElasticSearchMark Greene How EverTrue is building a donor CRM on top of ElasticSearch. We cover some of the issues around scaling ElasticSearch and which aspects of ElasticSearch we are using to deliver value to our customers.

How ElasticSearch lives in my DevOps life

How ElasticSearch lives in my DevOps life琛琳 饶 ElasticSearch is a flexible and powerful open source, distributed real-time search and analytics engine for the cloud. It is JSON-oriented, uses a RESTful API, and has a schema-free design. Logstash is a tool for collecting, parsing, and storing logs and events in ElasticSearch for later use and analysis. It has many input, filter, and output plugins to collect data from various sources, parse it, and send it to destinations like ElasticSearch. Kibana works with ElasticSearch to visualize and explore stored logs and data.

Elk stack

Elk stackJilles van Gurp Jilles van Gurp presents on the ELK stack and how it is used at Linko to analyze logs from applications servers, Nginx, and Collectd. The ELK stack consists of Elasticsearch for storage and search, Logstash for processing and transporting logs, and Kibana for visualization. At Linko, Logstash collects logs and sends them to Elasticsearch for storage and search. Logs are filtered and parsed by Logstash using grok patterns before being sent to Elasticsearch. Kibana dashboards then allow users to explore and analyze logs in real-time from Elasticsearch. While the ELK stack is powerful, there are some operational gotchas to watch out for like node restarts impacting availability and field data caching

mongoDB Performance

mongoDB PerformanceMoshe Kaplan The document discusses MongoDB performance optimization strategies presented by Moshe Kaplan at a VP R&D Open Seminar. It covers topics like sharding, in-memory databases, MapReduce, profiling, indexes, server stats, schema design, and locking in MongoDB. Slides include information on tuning configuration parameters, analyzing profiling results, explain plans, index management, and database stats.

Attack monitoring using ElasticSearch Logstash and Kibana

Attack monitoring using ElasticSearch Logstash and KibanaPrajal Kulkarni This document discusses using the ELK stack (Elasticsearch, Logstash, Kibana) for attack monitoring. It provides an overview of each component, describes how to set up ELK and configure Logstash for log collection and parsing. It also demonstrates log forwarding using Logstash Forwarder, and shows how to configure alerts and dashboards in Kibana for attack monitoring. Examples are given for parsing Apache logs and syslog using Grok filters in Logstash.

Docker Monitoring Webinar

Docker Monitoring WebinarSematext Group, Inc. For the Docker users out there, Sematext's DevOps Evangelist, Stefan Thies, goes through a number of different Docker monitoring options, points out their pros and cons, and offers solutions for Docker monitoring. Webinar contains actionable content, diagrams and how-to steps.

ELK Stack

ELK StackPhuc Nguyen The document provides an introduction to the ELK stack, which is a collection of three open source products: Elasticsearch, Logstash, and Kibana. It describes each component, including that Elasticsearch is a search and analytics engine, Logstash is used to collect, parse, and store logs, and Kibana is used to visualize data with charts and graphs. It also provides examples of how each component works together in processing and analyzing log data.

Eagle6 Enterprise Situational Awareness

Eagle6 Enterprise Situational AwarenessMongoDB Eagle6 is a product that use system artifacts to create a replica model that represents a near real-time view of system architecture. Eagle6 was built to collect system data (log files, application source code, etc.) and to link system behaviors in such a way that the user is able to quickly identify risks associated with unknown or unwanted behavioral events that may result in unknown impacts to seemingly unrelated down-stream systems. This session is designed to present the capabilities of the Eagle6 modeling product and how we are using MongoDB to support near-real-time analysis of large disparate datasets.

MySQL Slow Query log Monitoring using Beats & ELK

MySQL Slow Query log Monitoring using Beats & ELKYoungHeon (Roy) Kim This document provides instructions for using Filebeat, Logstash, Elasticsearch, and Kibana to monitor and visualize MySQL slow query logs. It describes installing and configuring each component on appropriate servers to ship MySQL slow logs from database servers to Logstash for processing, indexing to Elasticsearch for search and analysis, and visualization of slow query trends and details in Kibana dashboards and graphs.

Introduction to Elasticsearch

Introduction to ElasticsearchJason Austin Elasticsearch is a distributed, RESTful search and analytics engine that allows for fast searching, filtering, and analysis of large volumes of data. It is document-based and stores structured and unstructured data in JSON documents within configurable indices. Documents can be queried using a simple query string syntax or more complex queries using the domain-specific query language. Elasticsearch also supports analytics through aggregations that can perform metrics and bucketing operations on document fields.

Concurrency Patterns with MongoDB

Concurrency Patterns with MongoDBYann Cluchey This document discusses concurrency patterns for MongoDB, including optimistic concurrency control. It provides examples of using findAndModify to perform consistent updates in MongoDB, even when updating subdocuments or performing independent updates with upserts. While operators can reduce the need for concurrency control, findAndModify allows atomic updates along with returning the previous or updated document, enabling patterns like optimistic concurrency control to ensure consistency when updates could conflict.

Unity Makes Strength

Unity Makes StrengthXavier Mertens How to improve your security by interconnecting devices/applications to increase their knowledge, detection/protection capabilities.

Workshop: Learning Elasticsearch

Workshop: Learning ElasticsearchAnurag Patel Elasticsearch is a distributed, open source search and analytics engine. It allows storing and searching of documents of any schema in real-time. Documents are organized into indices which can contain multiple types of documents. Indices are partitioned into shards and replicas to allow horizontal scaling and high availability. The document consists of a JSON object which is indexed and can be queried using a RESTful API.

Speak Easy, Achieve More!

Speak Easy, Achieve More!Dr Nahin Mamun The more upward you move, the less people will understand your words. Speak easy and millions will understand. http://www.nahinmamun.com/

More Related Content

What's hot (20)

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...

Voxxed Athens 2018 - Elasticsearch (R)Evolution — You Know, for Search...Voxxed Athens This document discusses the evolution of Elasticsearch from versions 5.0 to 8.0. It covers topics like strict bootstrap checks, rolling upgrades, floodstage watermarks, sequence numbers, mapping types removal, automatic queue resizing, adaptive replica selection, shard shrinking and splitting. The document provides demos of some of these features and recommends benchmarks and meetups for further learning.

Journée DevOps : Des dashboards pour tous avec ElasticSearch, Logstash et Kibana

Journée DevOps : Des dashboards pour tous avec ElasticSearch, Logstash et KibanaPublicis Sapient Engineering Vous n'avez pas pu assister à la journée DevOps by Xebia ? Voici la présentation de Vincent Spiewak (Xebia) à propos d'ElasticSearch, Logstash et Kibana.

«Scrapy internals» Александр Сибиряков, Scrapinghub

«Scrapy internals» Александр Сибиряков, Scrapinghubit-people - Scrapy is a framework for web scraping that allows for extraction of structured data from HTML/XML through selectors like CSS and XPath. It provides features like an interactive shell, feed exports, encoding support, and more.

- Scrapy is built on top of the Twisted asynchronous networking framework, which provides an event loop and deferreds. It handles protocols and transports like TCP, HTTP, and more across platforms.

- Scrapy architecture includes components like the downloader, scraper, and item pipelines that communicate internally. Flow control is needed between these to limit memory usage and scheduling through techniques like concurrent item limits, memory limits, and delays between calls.

Mobile Analytics mit Elasticsearch und Kibana

Mobile Analytics mit Elasticsearch und Kibanainovex GmbH Speaker: Dominik Helleberg, inovex

MobileTech Conference 2015

September 2015

Mehr Vorträge: https://www.inovex.de/de/content-pool/vortraege/

Null Bachaav - May 07 Attack Monitoring workshop.

Null Bachaav - May 07 Attack Monitoring workshop.Prajal Kulkarni This document provides an overview and instructions for setting up the ELK stack (Elasticsearch, Logstash, Kibana) for attack monitoring. It discusses the components, architecture, and configuration of ELK. It also covers installing and configuring Filebeat for centralized logging, using Kibana dashboards for visualization, and integrating osquery for internal alerting and attack monitoring.

Elasticsearch Distributed search & analytics on BigData made easy

Elasticsearch Distributed search & analytics on BigData made easyItamar Elasticsearch is a cloud-ready, super scalable search engine which is gaining a lot of popularity lately. It is mostly known for being extremely easy to setup and integrate with any technology stack.In this talk we will introduce Elasticdearch, and start by looking at some of its basic capabilities. We will demonstrate how it can be used for document search and even log analytics for DevOps and distributed debugging, and peek into more advanced usages like the real-time aggregations and percolation. Obviously, we will make sure to demonstrate how Elasticsearch can be scaled out easily to work on a distributed architecture and handle pretty much any load.

Elasticsearch quick Intro (English)

Elasticsearch quick Intro (English)Federico Panini Elasticsearch what is it ? How can I use it in my stack ? I will explain how to set up a working environment with Elasticsearch. The slides are in English.

Building a CRM on top of ElasticSearch

Building a CRM on top of ElasticSearchMark Greene How EverTrue is building a donor CRM on top of ElasticSearch. We cover some of the issues around scaling ElasticSearch and which aspects of ElasticSearch we are using to deliver value to our customers.

How ElasticSearch lives in my DevOps life

How ElasticSearch lives in my DevOps life琛琳 饶 ElasticSearch is a flexible and powerful open source, distributed real-time search and analytics engine for the cloud. It is JSON-oriented, uses a RESTful API, and has a schema-free design. Logstash is a tool for collecting, parsing, and storing logs and events in ElasticSearch for later use and analysis. It has many input, filter, and output plugins to collect data from various sources, parse it, and send it to destinations like ElasticSearch. Kibana works with ElasticSearch to visualize and explore stored logs and data.

Elk stack

Elk stackJilles van Gurp Jilles van Gurp presents on the ELK stack and how it is used at Linko to analyze logs from applications servers, Nginx, and Collectd. The ELK stack consists of Elasticsearch for storage and search, Logstash for processing and transporting logs, and Kibana for visualization. At Linko, Logstash collects logs and sends them to Elasticsearch for storage and search. Logs are filtered and parsed by Logstash using grok patterns before being sent to Elasticsearch. Kibana dashboards then allow users to explore and analyze logs in real-time from Elasticsearch. While the ELK stack is powerful, there are some operational gotchas to watch out for like node restarts impacting availability and field data caching

mongoDB Performance

mongoDB PerformanceMoshe Kaplan The document discusses MongoDB performance optimization strategies presented by Moshe Kaplan at a VP R&D Open Seminar. It covers topics like sharding, in-memory databases, MapReduce, profiling, indexes, server stats, schema design, and locking in MongoDB. Slides include information on tuning configuration parameters, analyzing profiling results, explain plans, index management, and database stats.

Attack monitoring using ElasticSearch Logstash and Kibana

Attack monitoring using ElasticSearch Logstash and KibanaPrajal Kulkarni This document discusses using the ELK stack (Elasticsearch, Logstash, Kibana) for attack monitoring. It provides an overview of each component, describes how to set up ELK and configure Logstash for log collection and parsing. It also demonstrates log forwarding using Logstash Forwarder, and shows how to configure alerts and dashboards in Kibana for attack monitoring. Examples are given for parsing Apache logs and syslog using Grok filters in Logstash.

Docker Monitoring Webinar

Docker Monitoring WebinarSematext Group, Inc. For the Docker users out there, Sematext's DevOps Evangelist, Stefan Thies, goes through a number of different Docker monitoring options, points out their pros and cons, and offers solutions for Docker monitoring. Webinar contains actionable content, diagrams and how-to steps.

ELK Stack

ELK StackPhuc Nguyen The document provides an introduction to the ELK stack, which is a collection of three open source products: Elasticsearch, Logstash, and Kibana. It describes each component, including that Elasticsearch is a search and analytics engine, Logstash is used to collect, parse, and store logs, and Kibana is used to visualize data with charts and graphs. It also provides examples of how each component works together in processing and analyzing log data.

Eagle6 Enterprise Situational Awareness

Eagle6 Enterprise Situational AwarenessMongoDB Eagle6 is a product that use system artifacts to create a replica model that represents a near real-time view of system architecture. Eagle6 was built to collect system data (log files, application source code, etc.) and to link system behaviors in such a way that the user is able to quickly identify risks associated with unknown or unwanted behavioral events that may result in unknown impacts to seemingly unrelated down-stream systems. This session is designed to present the capabilities of the Eagle6 modeling product and how we are using MongoDB to support near-real-time analysis of large disparate datasets.

MySQL Slow Query log Monitoring using Beats & ELK

MySQL Slow Query log Monitoring using Beats & ELKYoungHeon (Roy) Kim This document provides instructions for using Filebeat, Logstash, Elasticsearch, and Kibana to monitor and visualize MySQL slow query logs. It describes installing and configuring each component on appropriate servers to ship MySQL slow logs from database servers to Logstash for processing, indexing to Elasticsearch for search and analysis, and visualization of slow query trends and details in Kibana dashboards and graphs.

Introduction to Elasticsearch

Introduction to ElasticsearchJason Austin Elasticsearch is a distributed, RESTful search and analytics engine that allows for fast searching, filtering, and analysis of large volumes of data. It is document-based and stores structured and unstructured data in JSON documents within configurable indices. Documents can be queried using a simple query string syntax or more complex queries using the domain-specific query language. Elasticsearch also supports analytics through aggregations that can perform metrics and bucketing operations on document fields.

Concurrency Patterns with MongoDB

Concurrency Patterns with MongoDBYann Cluchey This document discusses concurrency patterns for MongoDB, including optimistic concurrency control. It provides examples of using findAndModify to perform consistent updates in MongoDB, even when updating subdocuments or performing independent updates with upserts. While operators can reduce the need for concurrency control, findAndModify allows atomic updates along with returning the previous or updated document, enabling patterns like optimistic concurrency control to ensure consistency when updates could conflict.

Unity Makes Strength

Unity Makes StrengthXavier Mertens How to improve your security by interconnecting devices/applications to increase their knowledge, detection/protection capabilities.

Workshop: Learning Elasticsearch

Workshop: Learning ElasticsearchAnurag Patel Elasticsearch is a distributed, open source search and analytics engine. It allows storing and searching of documents of any schema in real-time. Documents are organized into indices which can contain multiple types of documents. Indices are partitioned into shards and replicas to allow horizontal scaling and high availability. The document consists of a JSON object which is indexed and can be queried using a RESTful API.

Journée DevOps : Des dashboards pour tous avec ElasticSearch, Logstash et Kibana

Journée DevOps : Des dashboards pour tous avec ElasticSearch, Logstash et KibanaPublicis Sapient Engineering

Viewers also liked (20)

Speak Easy, Achieve More!

Speak Easy, Achieve More!Dr Nahin Mamun The more upward you move, the less people will understand your words. Speak easy and millions will understand. http://www.nahinmamun.com/

Job Search Simulation

Job Search SimulationОлег Еремин Simulation of interviews during which students act both as candidates, searching for jobs, and as hiring managers

Performance Indicators for Bangladeshi People

Performance Indicators for Bangladeshi PeopleDr Nahin Mamun A presentation on what is performance and what is not considered as performance. http://www.nahinmamun.com/

26. TCI Cost of Living Spotlight Report 2011

26. TCI Cost of Living Spotlight Report 2011Richard Plumpton The carbon price is expected to cause a 0.6% increase in the cost of living in 2012-13, or around 60 cents for every $100 spent. This impact is smaller than previous economic reforms like the GST and spikes in global oil prices. The average household will see weekly expenses rise by $9.10 before receiving government assistance. Assistance measures will offset costs for many households, with some groups like low-income families expected to be better off. The carbon price provides incentives for businesses to cut emissions and invest in cleaner technologies.

How video views are counted on Facebook, Snapchat and other Social Networks

How video views are counted on Facebook, Snapchat and other Social NetworksPressboard A visual guide to understanding how platforms like Facebook, Snapchat, YouTube, Twitter, Vine and Instagram count video views.

Adj new

Adj newannalouie1 This document provides information about a lesson on adjectives, including:

1. The objectives of identifying types of adjectives, constructing sentences using adjectives, and participating in class recitation.

2. Details about different types of adjectives like articles, proper adjectives, and predicate adjectives.

3. Information on the degrees of comparison for adjectives including positive, comparative, and superlative forms.

4. Exercises for students to practice using adjectives in sentences and a quiz to identify adjectives and their forms of comparison.

Where We End

Where We EndDr Nahin Mamun I have thought a lot and see that where my life will end, someone has already started life from there. Where the life of millions of people will end, millions of people are starting life from there. Thus my thinking is outdated to many who are starting from where I will end. http://www.nahinmamun.com/

Wo tcommunity proposal

Wo tcommunity proposalCampus Tang Tristan Tang presented a proposal for improving World of Tanks community management in Asia. The current situation finds 60 million users worldwide, with 2 million in Asia and high concurrent players from Taiwan. The strategy involves making World of Tanks a phenomenon by expanding the user base, increasing engagement, and driving more purchases. Tactics include getting content to spread virally, integrating online community resources, and establishing a public center for user-generated content. The goals are to provide more localized materials and frequent activities in the short-term, make unofficial fansites official and focus on clans in the mid-term, and hold big public events and expand into Facebook in the long-term.

04. TCI Future Prosperity 2050 (2)

04. TCI Future Prosperity 2050 (2)Richard Plumpton The Climate Institute is seeking additional funding to continue and expand its work creating a resilient zero-carbon Australia. It focuses on economic transformation, international accountability, and societal leadership. Support can be provided as unrestricted core funding through the "50 for 5 for 2050" program, by supporting specific focus areas, or by funding strategic or tactical projects. Examples of recent projects include reports on climate change attitudes in Australia and global climate leadership. The document outlines various ways individuals and organizations can support the Climate Institute's vision and invites interested parties to discuss opportunities for partnership or sponsorship.

Embarazo precoz

Embarazo precozMerlehty Corona de Angulo El documento describe un caso de embarazo precoz en la escuela U. E. Urquia en Venezuela. Se presentan varios casos de niñas entre 12 y 17 años que están embarazadas. El documento también describe las consecuencias físicas, psicológicas y sociales del embarazo precoz y la necesidad de implementar programas educativos sobre salud sexual y reproductiva para prevenir este problema.

TCI_DangerousDegrees_print

TCI_DangerousDegrees_printRichard Plumpton The document discusses the impacts of climate change caused by rising levels of greenhouse gases in the atmosphere. It notes that carbon dioxide levels are now higher than at any point in the last 800,000 years, and a rise in global temperatures of 4-6°C is projected by 2100 if emissions continue unchecked. Such warming would have severely detrimental effects on global agriculture, coastlines, health, and ecosystems. The precise risks and impacts are difficult to predict but would challenge human and environmental resilience.

Sandrine debetaz

Sandrine debetazAmit Agrawal This document provides an analysis of the environment of mobile marketing using short message service (SMS). It begins with defining mobile marketing and classifying different types. It then discusses how mobile marketing fits into the broader marketing mix and how it can impact customer relationships. It outlines various design aspects of mobile marketing strategies including technical, content, and privacy factors. The document then analyzes the mobile marketing environment by examining the market, actors, and key issues. It looks at applications, adoption rates, different players in the value chain, and social, technological, economic, and geographic issues. The goal is to provide recommendations to help the development of mobile marketing while ensuring user privacy.

Leadership Templates

Leadership TemplatesDr Nahin Mamun The document provides strategies for political speeches. It recommends:

1. Using vague, generalized language about important ideas and organizations without specifics.

2. Criticizing opponents by focusing on minor details rather than substantial issues.

3. Concluding speeches by threatening opponents and cautioning those against the speaker's position.

What time is it

What time is itEuge Sombra JUEGO PARA INTERTRIBUS.

MODO DE USO, SE UTILIZA REPRODUCIENDOLE EN LA PARED. LUEGO SE LLAMAN A DOS REPRESENTANTES. UNO DE CADA BANDO. CADA PARTICIPANTE RESPONDE A LA PREGUNTA WHAT TIME IS IT?. EL GRUPO QUE LO HACE MEJOR, GANA EL JUEGO.

Who am I?

Who am I?Dr Nahin Mamun A presentation on who we are. http://www.nahinmamun.com/

Aemfi and the microfinance sector

Aemfi and the microfinance sectorshree kant kumar AEMFI is a network of 31 microfinance institutions in Ethiopia established in 1999 with a vision to build an inclusive financial system. Its mission is to enhance the capacity of MFIs to provide financial services through technical assistance, training, research, and advocacy. It aims to promote transparency, equity, accountability, and social responsibility among its member institutions. AEMFI helps build capacity of MFIs, improve the policy environment, facilitate collaboration and information sharing between MFIs, and conduct research on the microfinance industry in Ethiopia. Over the years, MFI outreach and savings have grown significantly, though operational challenges remain around capital availability, capacity, and serving excluded groups like women and pastoralists.

Streaming architecture zx_dec2015

Streaming architecture zx_dec2015Zhenzhong Xu A talk I gave on OpenSourceChina conference in Dec 2015. The talk is about how netflix builds its data pipeline platform to handle hundreds of billions of events a day. How everybody should leverage the same streaming architecture to build their apps.

Ad

Similar to Java clients for elasticsearch (20)

Elasticsearch in 15 Minutes

Elasticsearch in 15 MinutesKarel Minarik The document provides an overview of using Elasticsearch. It demonstrates how to install Elasticsearch, index and update documents, perform searches, add nodes to the cluster, and configure shards and clusters. It also covers JSON and HTTP usage, different search types (terms, phrases, wildcards), filtering and boosting searches, and the JSON query DSL. Faceted searching is demonstrated using terms and terms_stats facets. Advanced topics like mapping, analyzing, and features above the basic search capabilities are also briefly mentioned.

Elasticsearch Quick Introduction

Elasticsearch Quick Introductionimotov The document provides an introduction to Elasticsearch including information about the company, basic concepts, downloading and installing Elasticsearch, communicating with Elasticsearch using APIs, indexing and searching documents, and customizing mappings. It also advertises an upcoming hackathon and meetup and mentions the company is hiring.

Hopper Elasticsearch Hackathon

Hopper Elasticsearch Hackathonimotov The document provides an introduction to Elasticsearch, describing that it is a real-time search and analytics engine that is distributed, scalable, and API-driven. It then covers basic concepts like clusters, nodes, indexes, and documents, and how to download, install, and configure Elasticsearch including indexing, searching, and analyzing text.

Elastic pivorak

Elastic pivorakPivorak MeetUp The document provides an overview of Elasticsearch and how it can be used to make software smarter. It discusses how Elasticsearch works and its advantages over other search technologies like SQL and Sphinx. The document also includes case studies of four projects that used Elasticsearch for tasks like search, recommendations, and parsing classified ads. It covers how to install and configure Elasticsearch, as well as how to query an Elasticsearch index through its RESTful API.

ElasticSearch Basics

ElasticSearch Basics Satya Mohapatra This document provides an overview of Elasticsearch, including why search engines are useful, what Elasticsearch is, how it works, and some key concepts. Elasticsearch is an open source, distributed, real-time search and analytics engine. It facilitates full-text search across numerous data types and returns results based on relevance. It stores data in JSON documents and uses inverted indexes to enable fast full-text search. Documents are analyzed and tokenized to build the indexes. Elasticsearch can be queried using RESTful APIs or the query DSL to perform complex searches and return highlighted results.

Elasticsearch Introduction

Elasticsearch IntroductionRoopendra Vishwakarma Elasticsearch is a distributed, open source search and analytics engine built on Apache Lucene. It allows storing and searching of documents of any schema in JSON format. Documents are organized into indexes which can have multiple shards and replicas for scalability and high availability. Elasticsearch provides a RESTful API and can be easily extended with plugins. It is widely used for full-text search, structured search, analytics and more in applications requiring real-time search and analytics of large volumes of data.

Elasticsearch & "PeopleSearch"

Elasticsearch & "PeopleSearch"George Stathis Presented on 10/11/12 at the Boston Elasticsearch meetup held at the Microsoft New England Research & Development Center. This talk gave a very high-level overview of Elasticsearch to newcomers and explained why ES is a good fit for Traackr's use case.

Elasticsearch, Logstash, Kibana. Cool search, analytics, data mining and more...

Elasticsearch, Logstash, Kibana. Cool search, analytics, data mining and more...Oleksiy Panchenko In the age of information and big data, ability to quickly and easily find a needle in a haystack is extremely important. Elasticsearch is a distributed and scalable search engine which provides rich and flexible search capabilities. Social networks (Facebook, LinkedIn), media services (Netflix, SoundCloud), Q&A sites (StackOverflow, Quora, StackExchange) and even GitHub - they all find data for you using Elasticsearch. In conjunction with Logstash and Kibana, Elasticsearch becomes a powerful log engine which allows to process, store, analyze, search through and visualize your logs.

Video: https://www.youtube.com/watch?v=GL7xC5kpb-c

Scripts for the Demo: https://github.com/opanchenko/morning-at-lohika-ELK

Anwendungsfälle für Elasticsearch JAX 2015

Anwendungsfälle für Elasticsearch JAX 2015Florian Hopf The document discusses various use cases for Elasticsearch including document storage, full text search, geo search, log file analysis, and analytics. It provides examples of installing Elasticsearch, indexing and retrieving documents, performing searches using query DSL, filtering and sorting results, and aggregating data. Popular users mentioned include GitHub, Stack Overflow, and Microsoft. The presentation aims to demonstrate the flexibility and power of Elasticsearch beyond just full text search.

Elasticsearch presentation 1

Elasticsearch presentation 1Maruf Hassan This document provides an introduction and overview of Elasticsearch. It discusses installing Elasticsearch and configuring it through the elasticsearch.yml file. It describes tools like Marvel and Sense that can be used for monitoring Elasticsearch. Key terms used in Elasticsearch like nodes, clusters, indices, and documents are explained. The document outlines how to index and retrieve data from Elasticsearch through its RESTful API using either search lite queries or the query DSL.

ElasticSearch - Suche im Zeitalter der Clouds

ElasticSearch - Suche im Zeitalter der Cloudsinovex GmbH Eine performante Suche mit relevanten Ergebnissen in großen Datenbeständen ist inzwischen für uns alle immer und überall selbstverständlich. Suche wird nicht mehr nur in klassischen Szenarien wie Enterprise Search und Web Search eingesetzt, sondern organisiert den Zugriff auf Daten und Informationen in verschiedensten Anwendungen (Stichwort: Search-based Applications). Ein Großteil der gebräuchlichen Suchtechnologien basiert hierbei auf dem Apache-Lucene-Projekt. Im Bereich der Suchserver auf Lucene-Basis gibt es nun neben Apache Solr einen neuen Star in der Open-Soruce-Szene: ElasticSearch. Dieser Vortrag stellt ElasticSearch und die Einsatzszenarien eingehend vor und grenzt die Möglichkeiten gegenüber Lucene und Solr insbesondere im Bereich großer Datenmengen ab.

Elasticsearch, a distributed search engine with real-time analytics

Elasticsearch, a distributed search engine with real-time analyticsTiziano Fagni An overview of Elasticsearch: main features, architecture, limitations. It includes also a description on how to query data both using REST API and using elastic4s library, with also a specific interest into integration of the search engine with Apache Spark.

Anwendungsfälle für Elasticsearch JavaLand 2015

Anwendungsfälle für Elasticsearch JavaLand 2015Florian Hopf The document discusses Elasticsearch and provides examples of how to use it for document storage, full text search, analytics, and log file analysis. It demonstrates how to install Elasticsearch, index and retrieve documents, perform queries using the query DSL, add geospatial data and filtering, aggregate data, and visualize analytics using Kibana. Real-world use cases are also presented, such as by GitHub, Stack Overflow, and log analysis platforms.

Introduction to Elasticsearch

Introduction to ElasticsearchRuslan Zavacky ElasticSearch introduction talk. Overview of the API, functionality, use cases. What can be achieved, how to scale? What is Kibana, how it can benefit your business.

Elasticsearch and Spark

Elasticsearch and SparkAudible, Inc. Elasticsearch and Spark is a presentation about integrating Elasticsearch and Spark for text searching and analysis. It introduces Elasticsearch and Spark, how they can be used together, and the benefits they provide for full-text searching, indexing, and analyzing large amounts of textual data.

Dev nexus 2017

Dev nexus 2017Roy Russo Elasticsearch is a distributed, RESTful search and analytics engine that can be used for processing big data with Apache Spark. It allows ingesting large volumes of data in near real-time for search, analytics, and machine learning applications like feature generation. Elasticsearch is schema-free, supports dynamic queries, and integrates with Spark, making it a good fit for ingesting streaming data from Spark jobs. It must be deployed with consideration for fast reads, writes, and dynamic querying to support large-scale predictive analytics workloads.

06 integrate elasticsearch

06 integrate elasticsearchErhwen Kuo 在這個大數據的時代, 使用者最常使用的功能之一就是檢索。而在一般的網頁應用程式的設計中最容易拖慢速度的不是檢索(Search)就是產生report(尤其是Summary或是Aggregation)。

如何在幾TB的數據量下面而又能快速反應使用者對檢索(Search)或summary/aggregation reporting的需求, 我們需要能夠快速檢索的引擎。想知道如何輕輕鬆鬆地整合一個檢索的引擎, 而且是能夠很容易擴展的幾十個Nodes的檢索clusters嗎?想知道Stackoverflow或GitHub這些擁有幾十上百TB資料的網路大神如何提供檢索的功能嗎?在這個最終篇的課程中,讓我們一起登頂吧 (這是一本跑歩熱血小說中, 男主角對其它組員的勉勵slogan~~嗯…有點冷!!)

Elk presentation1#3

Elk presentation1#3uzzal basak The document describes the ELK stack, which consists of three open source projects - Elasticsearch, Logstash, and Kibana. Elasticsearch is a search and analytics engine. Logstash is a data processing pipeline that ingests data from multiple sources and sends it to Elasticsearch. Kibana lets users visualize data from Elasticsearch with charts and graphs. Beats ship data from their sources to Logstash or Elasticsearch. The Elastic Stack is the evolved version of the ELK stack.

ElasticSearch AJUG 2013

ElasticSearch AJUG 2013Roy Russo This document provides an introduction to Elasticsearch, covering the basics, concepts, data structure, inverted index, REST API, bulk API, percolator, Java integration, and topics not covered. It discusses how Elasticsearch is a document-oriented search engine that allows indexing and searching of JSON documents without schemas. Documents are distributed across shards and replicas for horizontal scaling and high availability. The REST API and query DSL allow full-text search and filtering of documents.

Anwendungsfaelle für Elasticsearch

Anwendungsfaelle für ElasticsearchFlorian Hopf The document discusses Elasticsearch and provides an overview of its capabilities and use cases. It covers how to install and access Elasticsearch, store and retrieve documents, perform full-text search using queries and filters, analyze text using mappings, handle large datasets with sharding, and use Elasticsearch for applications like logging, analytics and more. Real-world examples of companies using Elasticsearch are also provided.

Ad

More from Florian Hopf (9)

Modern Java Features

Modern Java Features Florian Hopf This document discusses several modern Java features including try-with-resources for automatic resource management, Optional for handling null values, lambda expressions, streams for functional operations on collections, and the new Date and Time API. It provides examples and explanations of how to use each feature to improve code quality by preventing exceptions, making code more concise and readable, and allowing functional-style processing of data.

Einführung in Elasticsearch

Einführung in ElasticsearchFlorian Hopf Vortrag über Elasticsearch bei den IT-Tagen 2016 in Frankfurt

Einfuehrung in Elasticsearch

Einfuehrung in ElasticsearchFlorian Hopf Vortrag über Elasticsearch bei der JUG Münster am 04.11.2015

Data modeling for Elasticsearch

Data modeling for ElasticsearchFlorian Hopf Describes the basics of data storage and design decisions when building applications with Elasticsearch.

Einführung in Elasticsearch

Einführung in ElasticsearchFlorian Hopf Einführung in unterschiedliche Aspekte von Elasticsearch: Suche, Verteilung, Java-Integration, Aggregationen und zentralisiertes Logging. Java User Group Switzerland am 07.10. in Bern

Search Evolution - Von Lucene zu Solr und ElasticSearch (Majug 20.06.2013)

Search Evolution - Von Lucene zu Solr und ElasticSearch (Majug 20.06.2013)Florian Hopf Slides zum Vortrag über Lucene, Solr und ElasticSearch bei der Java User Group Mannheim am 20.06.2013

Search Evolution - Von Lucene zu Solr und ElasticSearch

Search Evolution - Von Lucene zu Solr und ElasticSearchFlorian Hopf 1. Lucene is a search library that provides indexing and search capabilities. Solr and Elasticsearch build on Lucene to provide additional features like full-text search, hit highlighting, faceted search, geo-location support, and distributed capabilities.

2. The document discusses the evolution from Lucene to Solr and Elasticsearch. It covers indexing, searching, faceting and distributed capabilities in Solr and Elasticsearch.

3. Key components in Lucene, Solr and Elasticsearch include analyzers, inverted indexes, query syntax, scoring, and the ability to customize schemas and configurations.

Akka Presentation Schule@synyx

Akka Presentation Schule@synyxFlorian Hopf This document summarizes key aspects of the actor model framework Akka:

1. It allows concurrent processing using message passing between actors that can scale up using multiple threads on a single machine.

2. It can scale out by deploying actor instances across multiple remote machines.

3. Actors provide fault tolerance through hierarchical supervision where errors are handled by restarting or resuming actors from their parent.

Lucene Solr talk at Java User Group Karlsruhe

Lucene Solr talk at Java User Group KarlsruheFlorian Hopf German slides introducing Lucene and Solr.

Recently uploaded (20)

Data Virtualization: Bringing the Power of FME to Any Application

Data Virtualization: Bringing the Power of FME to Any ApplicationSafe Software Imagine building web applications or dashboards on top of all your systems. With FME’s new Data Virtualization feature, you can deliver the full CRUD (create, read, update, and delete) capabilities on top of all your data that exploit the full power of FME’s all data, any AI capabilities. Data Virtualization enables you to build OpenAPI compliant API endpoints using FME Form’s no-code development platform.

In this webinar, you’ll see how easy it is to turn complex data into real-time, usable REST API based services. We’ll walk through a real example of building a map-based app using FME’s Data Virtualization, and show you how to get started in your own environment – no dev team required.

What you’ll take away:

-How to build live applications and dashboards with federated data

-Ways to control what’s exposed: filter, transform, and secure responses

-How to scale access with caching, asynchronous web call support, with API endpoint level security.

-Where this fits in your stack: from web apps, to AI, to automation

Whether you’re building internal tools, public portals, or powering automation – this webinar is your starting point to real-time data delivery.

LSNIF: Locally-Subdivided Neural Intersection Function

LSNIF: Locally-Subdivided Neural Intersection FunctionTakahiro Harada Neural representations have shown the potential to accelerate ray casting in a conventional ray-tracing-based rendering pipeline. We introduce a novel approach called Locally-Subdivided Neural Intersection Function (LSNIF) that replaces bottom-level BVHs used as traditional geometric representations with a neural network. Our method introduces a sparse hash grid encoding scheme incorporating geometry voxelization, a scene-agnostic training data collection, and a tailored loss function. It enables the network to output not only visibility but also hit-point information and material indices. LSNIF can be trained offline for a single object, allowing us to use LSNIF as a replacement for its corresponding BVH. With these designs, the network can handle hit-point queries from any arbitrary viewpoint, supporting all types of rays in the rendering pipeline. We demonstrate that LSNIF can render a variety of scenes, including real-world scenes designed for other path tracers, while achieving a memory footprint reduction of up to 106.2x compared to a compressed BVH.

https://arxiv.org/abs/2504.21627

Co-Constructing Explanations for AI Systems using Provenance

Co-Constructing Explanations for AI Systems using ProvenancePaul Groth Explanation is not a one off - it's a process where people and systems work together to gain understanding. This idea of co-constructing explanations or explanation by exploration is powerful way to frame the problem of explanation. In this talk, I discuss our first experiments with this approach for explaining complex AI systems by using provenance. Importantly, I discuss the difficulty of evaluation and discuss some of our first approaches to evaluating these systems at scale. Finally, I touch on the importance of explanation to the comprehensive evaluation of AI systems.

Jeremy Millul - A Talented Software Developer

Jeremy Millul - A Talented Software DeveloperJeremy Millul Jeremy Millul is a talented software developer based in NYC, known for leading impactful projects such as a Community Engagement Platform and a Hiking Trail Finder. Using React, MongoDB, and geolocation tools, Jeremy delivers intuitive applications that foster engagement and usability. A graduate of NYU’s Computer Science program, he brings creativity and technical expertise to every project, ensuring seamless user experiences and meaningful results in software development.

Droidal: AI Agents Revolutionizing Healthcare

Droidal: AI Agents Revolutionizing HealthcareDroidal LLC Droidal’s AI Agents are transforming healthcare by bringing intelligence, speed, and efficiency to key areas such as Revenue Cycle Management (RCM), clinical operations, and patient engagement. Built specifically for the needs of U.S. hospitals and clinics, Droidal's solutions are designed to improve outcomes and reduce administrative burden.

Through simple visuals and clear examples, the presentation explains how AI Agents can support medical coding, streamline claims processing, manage denials, ensure compliance, and enhance communication between providers and patients. By integrating seamlessly with existing systems, these agents act as digital coworkers that deliver faster reimbursements, reduce errors, and enable teams to focus more on patient care.

Droidal's AI technology is more than just automation — it's a shift toward intelligent healthcare operations that are scalable, secure, and cost-effective. The presentation also offers insights into future developments in AI-driven healthcare, including how continuous learning and agent autonomy will redefine daily workflows.

Whether you're a healthcare administrator, a tech leader, or a provider looking for smarter solutions, this presentation offers a compelling overview of how Droidal’s AI Agents can help your organization achieve operational excellence and better patient outcomes.

A free demo trial is available for those interested in experiencing Droidal’s AI Agents firsthand. Our team will walk you through a live demo tailored to your specific workflows, helping you understand the immediate value and long-term impact of adopting AI in your healthcare environment.

To request a free trial or learn more:

https://droidal.com/

Agentic AI - The New Era of Intelligence

Agentic AI - The New Era of IntelligenceMuzammil Shah This presentation is specifically designed to introduce final-year university students to the foundational principles of Agentic Artificial Intelligence (AI). It aims to provide a clear understanding of how Agentic AI systems function, their key components, and the underlying technologies that empower them. By exploring real-world applications and emerging trends, the session will equip students with essential knowledge to engage with this rapidly evolving area of AI, preparing them for further study or professional work in the field.

Dev Dives: System-to-system integration with UiPath API Workflows

Dev Dives: System-to-system integration with UiPath API WorkflowsUiPathCommunity Join the next Dev Dives webinar on May 29 for a first contact with UiPath API Workflows, a powerful tool purpose-fit for API integration and data manipulation!

This session will guide you through the technical aspects of automating communication between applications, systems and data sources using API workflows.

📕 We'll delve into:

- How this feature delivers API integration as a first-party concept of the UiPath Platform.

- How to design, implement, and debug API workflows to integrate with your existing systems seamlessly and securely.

- How to optimize your API integrations with runtime built for speed and scalability.

This session is ideal for developers looking to solve API integration use cases with the power of the UiPath Platform.

👨🏫 Speakers:

Gunter De Souter, Sr. Director, Product Manager @UiPath

Ramsay Grove, Product Manager @UiPath

This session streamed live on May 29, 2025, 16:00 CET.

Check out all our upcoming UiPath Dev Dives sessions:

👉 https://community.uipath.com/dev-dives-automation-developer-2025/

AI Emotional Actors: “When Machines Learn to Feel and Perform"

AI Emotional Actors: “When Machines Learn to Feel and Perform"AkashKumar809858 Welcome to the era of AI Emotional Actors.

The entertainment landscape is undergoing a seismic transformation. What started as motion capture and CGI enhancements has evolved into a full-blown revolution: synthetic beings not only perform but express, emote, and adapt in real time.

For reading further follow this link -

https://akash97.gumroad.com/l/meioex

Fortinet Certified Associate in Cybersecurity

Fortinet Certified Associate in CybersecurityVICTOR MAESTRE RAMIREZ Fortinet Certified Associate in Cybersecurity

UiPath Community Zurich: Release Management and Build Pipelines

UiPath Community Zurich: Release Management and Build PipelinesUiPathCommunity Ensuring robust, reliable, and repeatable delivery processes is more critical than ever - it's a success factor for your automations and for automation programmes as a whole. In this session, we’ll dive into modern best practices for release management and explore how tools like the UiPathCLI can streamline your CI/CD pipelines. Whether you’re just starting with automation or scaling enterprise-grade deployments, our event promises to deliver helpful insights to you. This topic is relevant for both on-premise and cloud users - as well as for automation developers and software testers alike.

📕 Agenda:

- Best Practices for Release Management

- What it is and why it matters

- UiPath Build Pipelines Deep Dive

- Exploring CI/CD workflows, the UiPathCLI and showcasing scenarios for both on-premise and cloud

- Discussion, Q&A

👨🏫 Speakers

Roman Tobler, CEO@ Routinuum

Johans Brink, CTO@ MvR Digital Workforce

We look forward to bringing best practices and showcasing build pipelines to you - and to having interesting discussions on this important topic!

If you have any questions or inputs prior to the event, don't hesitate to reach out to us.

This event streamed live on May 27, 16:00 pm CET.

Check out all our upcoming UiPath Community sessions at:

👉 https://community.uipath.com/events/

Join UiPath Community Zurich chapter:

👉 https://community.uipath.com/zurich/

ECS25 - The adventures of a Microsoft 365 Platform Owner - Website.pptx

ECS25 - The adventures of a Microsoft 365 Platform Owner - Website.pptxJasper Oosterveld My slides for ECS 2025.

Contributing to WordPress With & Without Code.pptx

Contributing to WordPress With & Without Code.pptxPatrick Lumumba Contributing to WordPress: Making an Impact on the Test Team—With or Without Coding Skills

WordPress survives on collaboration, and the Test Team plays a very important role in ensuring the CMS is stable, user-friendly, and accessible to everyone.

This talk aims to deconstruct the myth that one has to be a developer to contribute to WordPress. In this session, I will share with the audience how to get involved with the WordPress Team, whether a coder or not.

We’ll explore practical ways to contribute, from testing new features, and patches, to reporting bugs. By the end of this talk, the audience will have the tools and confidence to make a meaningful impact on WordPress—no matter the skill set.

Multistream in SIP and NoSIP @ OpenSIPS Summit 2025

Multistream in SIP and NoSIP @ OpenSIPS Summit 2025Lorenzo Miniero Slides for my "Multistream support in the Janus SIP and NoSIP plugins" presentation at the OpenSIPS Summit 2025 event.

They describe my efforts refactoring the Janus SIP and NoSIP plugins to allow for the gatewaying of an arbitrary number of audio/video streams per call (thus breaking the current 1-audio/1-video limitation), plus some additional considerations on what this could mean when dealing with application protocols negotiated via SIP as well.

Offshore IT Support: Balancing In-House and Offshore Help Desk Technicians

Offshore IT Support: Balancing In-House and Offshore Help Desk Techniciansjohn823664 In today's always-on digital environment, businesses must deliver seamless IT support across time zones, devices, and departments. This SlideShare explores how companies can strategically combine in-house expertise with offshore talent to build a high-performing, cost-efficient help desk operation.

From the benefits and challenges of offshore support to practical models for integrating global teams, this presentation offers insights, real-world examples, and key metrics for success. Whether you're scaling a startup or optimizing enterprise support, discover how to balance cost, quality, and responsiveness with a hybrid IT support strategy.

Perfect for IT managers, operations leads, and business owners considering global help desk solutions.

Supercharge Your AI Development with Local LLMs

Supercharge Your AI Development with Local LLMsFrancesco Corti In today's AI development landscape, developers face significant challenges when building applications that leverage powerful large language models (LLMs) through SaaS platforms like ChatGPT, Gemini, and others. While these services offer impressive capabilities, they come with substantial costs that can quickly escalate especially during the development lifecycle. Additionally, the inherent latency of web-based APIs creates frustrating bottlenecks during the critical testing and iteration phases of development, slowing down innovation and frustrating developers.

This talk will introduce the transformative approach of integrating local LLMs directly into their development environments. By bringing these models closer to where the code lives, developers can dramatically accelerate development lifecycles while maintaining complete control over model selection and configuration. This methodology effectively reduces costs to zero by eliminating dependency on pay-per-use SaaS services, while opening new possibilities for comprehensive integration testing, rapid prototyping, and specialized use cases.

Cyber Security Legal Framework in Nepal.pptx

Cyber Security Legal Framework in Nepal.pptxGhimire B.R. The presentation is about the review of existing legal framework on Cyber Security in Nepal. The strength and weakness highlights of the major acts and policies so far. Further it highlights the needs of data protection act .

New Ways to Reduce Database Costs with ScyllaDB

New Ways to Reduce Database Costs with ScyllaDBScyllaDB How ScyllaDB’s latest capabilities can reduce your infrastructure costs

ScyllaDB has been obsessed with price-performance from day 1. Our core database is architected with low-level engineering optimizations that squeeze every ounce of power from the underlying infrastructure. And we just completed a multi-year effort to introduce a set of new capabilities for additional savings.

Join this webinar to learn about these new capabilities: the underlying challenges we wanted to address, the workloads that will benefit most from each, and how to get started. We’ll cover ways to:

- Avoid overprovisioning with “just-in-time” scaling

- Safely operate at up to ~90% storage utilization

- Cut network costs with new compression strategies and file-based streaming

We’ll also highlight a “hidden gem” capability that lets you safely balance multiple workloads in a single cluster. To conclude, we will share the efficiency-focused capabilities on our short-term and long-term roadmaps.

Nix(OS) for Python Developers - PyCon 25 (Bologna, Italia)

Nix(OS) for Python Developers - PyCon 25 (Bologna, Italia)Peter Bittner How do you onboard new colleagues in 2025? How long does it take? Would you love a standardized setup under version control that everyone can customize for themselves? A stable desktop setup, reinstalled in just minutes. It can be done.

This talk was given in Italian, 29 May 2025, at PyCon 25, Bologna, Italy. All slides are provided in English.

Original slides at https://slides.com/bittner/pycon25-nixos-for-python-developers

European Accessibility Act & Integrated Accessibility Testing

European Accessibility Act & Integrated Accessibility TestingJulia Undeutsch Emma Dawson will guide you through two important topics in this session.

Firstly, she will prepare you for the European Accessibility Act (EAA), which comes into effect on 28 June 2025, and show you how development teams can prepare for it.

In the second part of the webinar, Emma Dawson will explore with you various integrated testing methods and tools that will help you improve accessibility during the development cycle, such as Linters, Storybook, Playwright, just to name a few.

Focus: European Accessibility Act, Integrated Testing tools and methods (e.g. Linters, Storybook, Playwright)

Target audience: Everyone, Developers, Testers

Java clients for elasticsearch

- 1. Java Clients for Florian Hopf @fhopf

- 3. Elasticsearch? „Elasticsearch is a distributed, JSON-based search and analytics engine, designed for horizontal scalability, maximum reliability, and easy management.“

- 4. Elasticsearch? „Elasticsearch is a distributed, JSON-based search and analytics engine, designed for horizontal scalability, maximum reliability, and easy management.“

- 6. Installation # download archive wget https://artifacts.elastic.co/downloads/ elasticsearch/elasticsearch-5.0.0.zip unzip elasticsearch-5.0.0.zip # on windows: elasticsearch.bat elasticsearch-5.0.0/bin/elasticsearch

- 7. Accessing Elaticsearch curl -XGET "http://localhost:9200" { "name" : "LI8ZN-t", "cluster_name" : "elasticsearch", "cluster_uuid" : "UvbMAoJ8TieUqugCGw7Xrw", "version" : { "number" : "5.0.0", "build_hash" : "253032b", "build_date" : "2016-10-26T04:37:51.531Z", "build_snapshot" : false, "lucene_version" : "6.2.0" }, "tagline" : "You Know, for Search" }

- 8. Indexing curl -XPOST "http://localhost:9200/food/dish" -d' { "food": "Hainanese Chicken Rice", "tags": ["chicken", "rice"], "favorite": { "location": "Tian Tian", "price": 5.00 } }'

- 9. Indexing curl -XPOST "http://localhost:9200/food/dish" -d' { "food": "Ayam Penyet", "tags": ["chicken", "indonesian"], "spicy": true }'

- 10. Search curl -XGET "http://localhost:9200/food/dish/_search?q=chicken" ... {"total":2,"max_score":0.3666863,"hits": [{"_index":"food","_type":"dish","_id":"AVg9cMwARrBlrY9 tYBqX","_score":0.3666863,"_source": { "food": "Hainanese Chicken Rice", "tags": ["chicken", "rice"], "favorite": { "location": "Tian Tian", "price": 5.00 } }}, ...

- 11. Search using Query DSL curl -XPOST "http://localhost:9200/food/dish/_search" -d' { "query": { "bool": { "must": { "match": { "_all": "rice" } }, "filter": { "term": { "tags.keyword": "chicken" } } } } }'

- 12. Elasticsearch? „Elasticsearch is a distributed, JSON-based search and analytics engine, designed for horizontal scalability, maximum reliability, and easy management.“

- 13. Distributed

- 14. Distributed

- 15. Recap ● Java based search server ● HTTP and JSON ● Search and Filtering Query-DSL ● Faceting, Highlighting, Suggestions, … ● Nodes can form a cluster

- 16. Transport-Client

- 17. Transport-Client dependencies { compile group: 'org.elasticsearch.client', name: 'transport', version: '5.0.0' }

- 18. Transport-Client TransportAddress address = new InetSocketTransportAddress( InetAddress.getByName("localhost"), 9300); Client client = new PreBuiltTransportClient(Settings.EMPTY) addTransportAddress(address);

- 19. Search using Query DSL curl -XPOST "http://localhost:9200/food/dish/_search" -d' { "query": { "bool": { "must": { "match": { "_all": "rice" } }, "filter": { "term": { "tags.keyword": "chicken" } } } } }'

- 20. Search using Search Builders SearchResponse searchResponse = client .prepareSearch("food") .setQuery( boolQuery(). must(matchQuery("_all", "rice")). filter( termQuery("tags.keyword", "chicken"))) .execute().actionGet(); assertEquals(1, searchResponse.getHits().getTotalHits()); SearchHit hit = searchResponse.getHits().getAt(0); String food = hit.getSource().get("food").toString();

- 21. Indexing XContentBuilder builder = jsonBuilder() .startObject() .field("food", "Roti Prata") .array("tags", new String [] {"curry"}) .startObject("favorite") .field("location", "Tiong Bahru") .field("price", 2.00) .endObject() .endObject(); IndexResponse resp = client.prepareIndex("food","dish") .setSource(builder) .execute() .actionGet();

- 22. Indexing

- 23. Transport-Client ● Connects to an existing cluster ● Uses the binary protocol also used for inter cluster communication

- 24. Transport-Client

- 25. Sniffing

- 26. Sniffing ● State of the cluster can be requested from elasticsearch ● Client side loadbalancing TransportAddress address = new InetSocketTransportAddress( InetAddress.getByName("localhost"), 9300); Settings settings = Settings.builder() .put("client.transport.sniff", true) .build(); Client client = new PreBuiltTransportClient(settings) addTransportAddress(address);

- 27. Transport-Client ● Full API-Support ● Efficient communication ● Client side loadbalancing

- 28. Transport-Client Gotchas ● Compatibility between elasticsearch versions ● Elasticsearch dependency

- 29. REST-Client

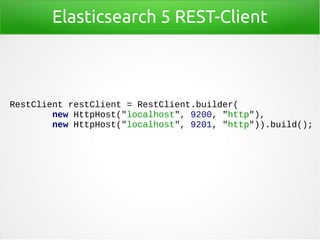

- 31. Elasticsearch 5 REST-Client dependencies { compile group: 'org.elasticsearch.client', name: 'rest', version: '5.0.0' }

- 32. Elasticsearch 5 REST-Client +--- org.apache.httpcomponents:httpclient:4.5.2 +--- org.apache.httpcomponents:httpcore:4.4.5 +--- org.apache.httpcomponents:httpasyncclient:4.1.2 +--- org.apache.httpcomponents:httpcore-nio:4.4.5 +--- commons-codec:commons-codec:1.10 --- commons-logging:commons-logging:1.1.3

- 33. Elasticsearch 5 REST-Client RestClient restClient = RestClient.builder( new HttpHost("localhost", 9200, "http"), new HttpHost("localhost", 9201, "http")).build();

- 34. Elasticsearch 5 REST-Client HttpEntity entity = new NStringEntity( "{ "query": { "match_all": {}}}", ContentType.APPLICATION_JSON); // alternative: performRequestAsync Response response = restClient.performRequest("POST", "/_search", emptyMap(), entity); String json = toString(response.getEntity()); // ...

- 35. Elasticsearch 5 REST-Client ● Less dependent on elasticsearch version ● Clean separation network application/cluster ● Minimal dependency ● Sniffing ● Error handling, timeout config, basic auth, headers, … ● No query support (for now)

- 36. Jest – Http Client

- 37. Jest dependencies { compile group: 'io.searchbox', name: 'jest', version: '2.0.0' }

- 38. Client JestClientFactory factory = new JestClientFactory(); factory.setHttpClientConfig(new HttpClientConfig .Builder("http://localhost:9200") .multiThreaded(true) .build()); JestClient client = factory.getObject();

- 39. Searching Jest String query = jsonStringThatMagicallyAppears; Search search = new Search.Builder(query) .addIndex("library") .build(); SearchResult result = client.execute(search); assertEquals(Integer.valueOf(1), result.getTotal());

- 40. Searching Jest JsonObject jsonObject = result.getJsonObject(); JsonObject hitsObj = jsonObject.getAsJsonObject("hits"); JsonArray hits = hitsObj.getAsJsonArray("hits"); JsonObject hit = hits.get(0).getAsJsonObject(); // ... more boring code

- 41. Searching Jest public class Dish { private String food; private List<String> tags; private Favorite favorite; @JestId private String id; // ... getters and setters }

- 42. Suche mit Jest Dish dish = result.getFirstHit(Dish.class).source; assertEquals("Roti Prata", dish.getFood());

- 43. Jest ● Alternative HTTP implementation ● Queries as Strings or via Elasticsearch-Builder ● Indexing and searching Java beans ● Node Discovery

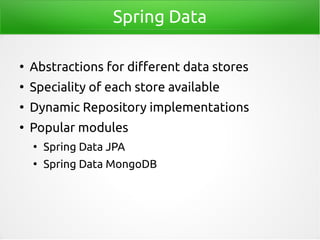

- 45. Spring Data ● Abstractions for different data stores ● Speciality of each store available ● Dynamic Repository implementations ● Popular modules ● Spring Data JPA ● Spring Data MongoDB

- 46. Dependency dependencies { compile group: 'org.springframework.data', name: 'spring-data-elasticsearch', version: '2.0.4.RELEASE' }

- 47. Entity @Document(indexName = "spring_dish") public class Dish { @Id private String id; private String food; private List<String> tags; private Favorite favorite; // more code }

- 48. Repository public interface DishRepository extends ElasticsearchCrudRepository<Dish, String> { }

- 49. Configuration <elasticsearch:transport-client id="client" /> <bean name="elasticsearchTemplate" class="o.s.d.elasticsearch.core.ElasticsearchTemplate"> <constructor-arg name="client" ref="client"/> </bean> <elasticsearch:repositories base-package="de.fhopf.elasticsearch.springdata" />

- 50. Indexing Dish mie = new Dish(); mie.setId("hokkien-prawn-mie"); mie.setFood("Hokkien Prawn Mie"); mie.setTags(Arrays.asList("noodles", "prawn")); repository.save(Arrays.asList(hokkienPrawnMie));

- 51. Searching Iterable<Dish> dishes = repository.findAll(); Dish dish = repository.findOne("hokkien-prawn-mie");

- 52. Repository public interface DishRepository extends ElasticsearchCrudRepository<Dish, String> { List<Dish> findByFood(String food); List<Dish> findByTagsAndFavoriteLocation(String tag, String location); List<Dish> findByFavoritePriceLessThan(Double price); @Query("{"query": {"match_all": {}}}") List<Dish> customFindAll(); }

- 53. Recap ● High level abstraction ● Entity beans ● dynamic repository implementation ● HTTP support in the making ● Slower feature development

- 54. Recap ● Transport-Client ● Full API support ● Elasticsearch dependency ● REST-Client ● Uses HTTP ● currently lacking search API

- 55. Recap ● Jest ● Http client ● Support for Java beans ● Spring-Data-Elasticsearch ● High level abstraction ● Dynamic repositories