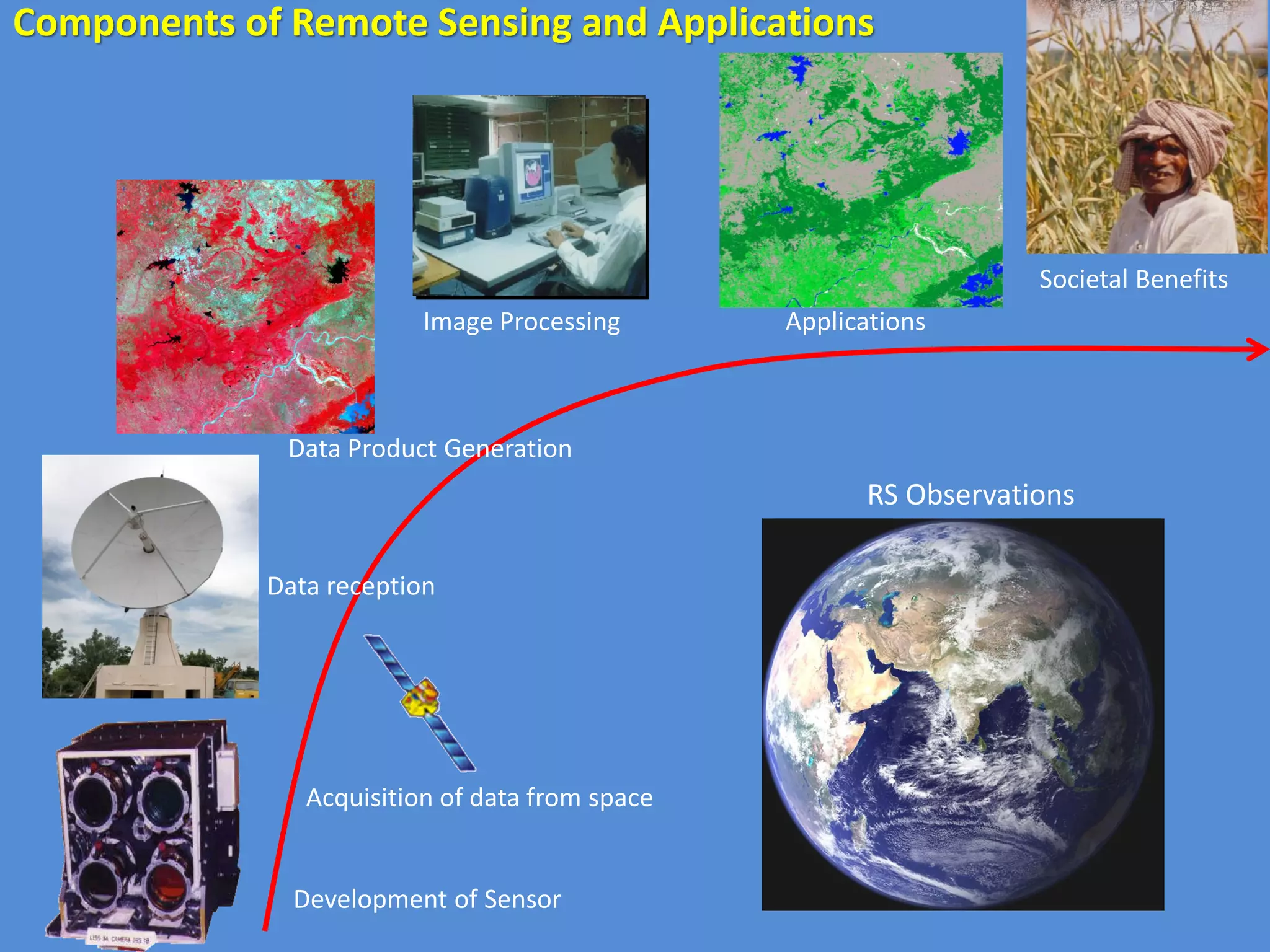

Remote sensing involves acquiring data about objects through measurements made at a distance without direct contact. It uses sensors on platforms like satellites and aircraft to measure electromagnetic radiation reflected or emitted from the Earth's surface. There are various sensor types that measure different portions of the electromagnetic spectrum. Image processing involves enhancing images and extracting information through techniques like pre-processing, classification, and change detection. Pre-processing corrects errors and artifacts in raw images through steps like radiometric correction, geometric correction, and atmospheric correction. Classification involves categorizing pixels into land cover classes using methods like supervised classification, which relies on user-defined training data, and unsupervised classification, which groups pixels automatically.