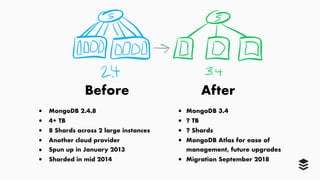

This document summarizes Dan Farrelly's experience migrating Buffer's production database from MongoDB 2.4 to MongoDB Atlas. Some key steps included building indexes on large collections, migrating small collections using mongodump/mongorestore, and using bulk operations and delta migrations for largest collections. Important considerations included driver/version compatibility, validating indexes, and stopping the balancer during shard migrations. The migration reduced storage usage by over 3 TB and improved query performance.

![Double & triple check indexes have been built

! Validate your indexes have been built

! 1 or 2 indexes failing to build could mean hours of headaches for you

! Don’t end up with a collScan on a collection with 1B+ records!

function createAll(){

db.coll.createIndexes([…])

db.other.createIndex({…})

}

createAll()](https://image.slidesharecdn.com/murrayhillday1415pmmdb-world-2019-farrelly1-190701161151/85/MongoDB-World-2019-Lessons-Learned-Migrating-Buffer-s-Production-Database-to-MongoDB-Atlas-from-MongoDB-2-4-12-320.jpg)