Principal component analysis and matrix factorizations for learning (part 1) ding - icml 2005 tutorial - 2005

- 1. Principal Component Analysis and Matrix Factorizations for Learning Chris Ding Lawrence Berkeley National Laboratory Supported by Office of Science, U.S. Dept. of Energy PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 1

- 2. Many unsupervised learning methods are closely related in a simple way PCA NMF K-means Spectral clustering Clustering Semi-supervised classification Indicator Matrix Semi-supervised clustering Quadratic Clustering Outlier detection PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 2

- 3. Part 1.A. Principal Component Analysis (PCA) and Singular Value Decomposition (SVD) • Widely used in large number of different fields • Most widely known as PCA (multivariate statistics) • SVD is the theoretical basis for PCA PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 3

- 4. Brief history • PCA – Draw a plane closest to data points (Pearson, 1901) – Retain most variance (Hotelling, 1933) • SVD – Low-rank approximation (Eckart-Young, 1936) – Practical application/Efficient Computation (Golub- Kahan, 1965) • Many generalizations PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 4

- 5. PCA and SVD Data: n points in p-dim: X = ( x1 , x2 ,L, xn ) p Covariance C = XX T = ∑ λk uk uk T k =1 r Gram (kernel) matrix XTX = ∑ k =1 λk v k v k T Principal directions: u k Principal components: k v (Principal axis,subspace) (projection on the subspace) p Underlying basis: SVD X = ∑k =1 σ k uk vk = UΣV T T PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 5

- 6. Further Developments SVD/PCA • Principal Curves • Independent Component Analysis • Sparse SVD/PCA (many approaches) • Mixture of Probabilistic PCA • Generalization to exponential familty, max-margin • Connection to K-means clustering Kernel (inner-product) • Kernel PCA PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 6

- 7. Methods of PCA Utilization Principal components X = ( x1 , x2 ,L, xn ) (uncorrelated random variables): uk = uk (1) ⋅ X 1 + L + uk (d ) ⋅ X d p Dimension reduction: X= ∑ k =1 σ k uk vk = UΣV T T Projection to low-dim ~ U = (u1 ,L, uk ) X =UT X subspace Sphereing the data ~ X = C −1 / 2 X = UΣ −1U T X Transform data to N(0,1) PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 7

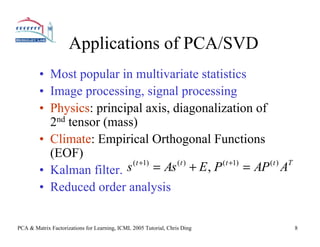

- 8. Applications of PCA/SVD • Most popular in multivariate statistics • Image processing, signal processing • Physics: principal axis, diagonalization of 2nd tensor (mass) • Climate: Empirical Orthogonal Functions (EOF) • Kalman filter. s ( t +1) = As ( t ) + E , P ( t +1) = AP (t ) AT • Reduced order analysis PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 8

- 9. Applications of PCA/SVD • PCA/SVD is as widely as Fast Fourier Transforms – Both are spectral expansions – FFT is more on Partial Differential Equations – PCA/SVD is more on discrete (data) analysis – PCA/SVD surpass FFT as computational sciences further advance • PCA/SVD – Select combination of variables – Dimension reduction • An image has 104 pixels. True dimension is 20 ! PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 9

- 10. PCA is a Matrix Factorization (spectral/eigen decomposition) Principal directions: U = (u1 , u2 ,L, uk ) Principal components: V = (v1 , v2 ,L, vk ) p Covariance C = XX T = ∑k =1 λk uk uk =UΛU T T r Kernel matrix XTX = ∑k =1 λk vk vk = VΛV T T p Underlying basis: SVD X = ∑k =1 σ k uk vk = UΣV T T PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 10

- 11. From PCA to spectral clustering using generalized eigenvectors Consider the kernel matrix: Wij = φ ( xi ),φ ( x j ) In Kernel PCA we compute eigenvector: Wv = λv Generalized Eigenvector: Wq = λDq D = diag (d1,L, dn ) di = ∑w j ij This leads to Spectral Clustering ! PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 11

- 12. Scale PCA ⇒ Spectral Clustering PCA: W= ∑ k vk λk vT k ∑ 1 ~ 1 Scaled PCA: W = D W D2 = D 2 qk λk qT D k k =1 ~ −1 −1 ~ W = D WD , wij = wij /(did j) 2 2 1/ 2 −1 qk = D vk scaled principal component 2 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 12

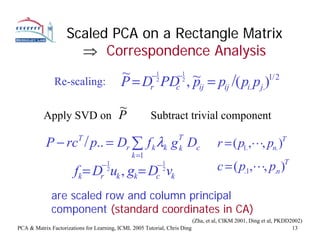

- 13. Scaled PCA on a Rectangle Matrix ⇒ Correspondence Analysis ~ −1 −1 ~ Re-scaling: P = Dr 2 PD 2 , pij = pij /( pi. pj.)1/ 2 c ~ Apply SVD on P Subtract trivial component P − rc / p.. = Dr ∑ f k λk g Dc T T k r = ( p1.,L, pn. ) T k =1 −1 fk = D u , gk = D v 2 −1 2 c = ( p.1,L, p.n ) T r k c k are scaled row and column principal component (standard coordinates in CA) (Zha, et al, CIKM 2001, Ding et al, PKDD2002) PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 13

- 14. Nonnegative Matrix Factorization Data Matrix: n points in p-dim: xi is an image, X = ( x1 , x2 ,L, xn ) document, webpage, etc Decomposition (low-rank approximation) X ≈ FG T Nonnegative Matrices X ij ≥ 0, Fij ≥ 0, Gij ≥ 0 F = ( f1 , f 2 , L, f k ) G = ( g1 , g 2 ,L, g k ) PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 14

- 15. Solving NMF with multiplicative updating J =|| X − FGT ||2 , F ≥ 0, G ≥ 0 Fix F, solve for G; Fix G, solve for F Lee & Seung ( 2000) propose ( XG )ik ( X T F ) jk Fik ← Fik G jk ← G jk ( FGT G )ik (GF T F ) jk PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 15

- 16. Matrix Factorization Summary Symmetric Rectangle Matrix (kernel matrix, graph) (contigency table, bipartite graph) PCA: W = VΛV T X = UΣV T Scaled PCA: 1 1 ~ 1 ~ 1 W = D W D = D QΛQ D 2 2 T X = Dr X Dc2 = Dr FΛG T Dc 2 NMF: W ≈ QQ T X ≈ FG T PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 16

- 17. Indicator Matrix Quadratic Clustering Unsigned Cluster indicator Matrix H=(h1, …, hK) Kernel K-means clustering: max Tr( H T WH ), s.t. H T H = I , H ≥ 0 H K-means: W = XT X; Kernel K-means W = (< φ ( xi ),φ ( x j ) >) Spectral clustering (normalized cut) max Tr( H T WH ), s.t. H T DH = I , H ≥ 0 H Difference between the two is the orthogonality of H PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 17

- 18. Indicator Matrix Quadratic Clustering Additional features: Semi-suerpvised classification: max Tr( H T WH + C T H ) H Semi-supervised clustering: (A) must-link and (B) cannot-link constraints max Tr( H T WH + αH T AH − βH T BH ) H Outlier Detection: max Tr( H T WH ) allowing zero rows in H H Nonnegative Lagrangian Relaxation: (WH )ik + Cik / 2 H ik ← H ik , α = H T WH + H T C. ( Hα )ik PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 18

- 19. Tutorial Outline • PCA – Recent developments on PCA/SVD – Equivalence to K-means clustering • Scaled PCA – Laplacian matrix – Spectral clustering – Spectral ordering • Nonnegative Matrix Factorization – Equivalence to K-means clustering – Holistic vs. Parts-based • Indicator Matrix Quadratic Clustering – Use Nonnegative Lagrangian Relaxtion – Includes • K-means and Spectral Clustering • semi-supervised classification • Semi-supervised clustering • Outlier detection PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding 19

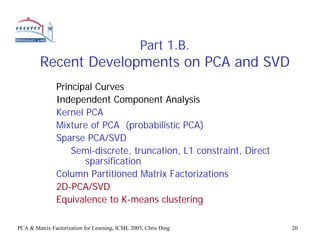

- 20. Part 1.B. Recent Developments on PCA and SVD Principal Curves Independent Component Analysis Kernel PCA Mixture of PCA (probabilistic PCA) Sparse PCA/SVD Semi-discrete, truncation, L1 constraint, Direct sparsification Column Partitioned Matrix Factorizations 2D-PCA/SVD Equivalence to K-means clustering PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 20

- 21. PCA and SVD Data Matrix: X = ( x1 , x2 ,L, xn ) p Covariance C = XX T = ∑ λk uk uk T k =1 r Gram (kernel) matrix XTX = ∑ k =1 λk v k v k T Principal directions: u k Principal components: k v (Principal axis,subspace) (projection on the subspace) p Underlying basis: SVD X = ∑σ u v T k k k k =1 PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 21

- 22. Kernel PCA xi → φ ( xi ) Kernel K ij = φ ( xi ),φ ( x j ) PCA Component v Feature extraction v, φ ( x ) = ∑ i vi φ ( xi ),φ ( x) Indefinite Kernels Generalization to graphs with nonnegative weights (Scholkopf, Smola, Muller, 1996) PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 22

- 23. Mixture of PCA • Data has local structures. – Global PCA on all data is not useful • Clustering PCA (Hinton et al): – Using clustering to cluster data into clusters – Perform PCA in each cluster – No explicit generative model • Probabilistic PCA (Tipping & Bishop) – Latent variables – Generative model (Gaussian) – Mixture of Gaussians ⇒ mixture of PCA – Adding Markov dynamics for latent variables (Linear Gaussian Models) PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 23

- 24. Probabilistic PCA Linear Gaussian Model Latent variables S = ( s1 ,L, sn ) xi = Wsi + μ + ε , ε ~ N (0,σ ε I ) 2 Gaussian prior P( s) ~ N ( s0 , σ s I ) 2 x ~ N (Ws0 ,σ ε I + σ sWW ) 2 T Linear Gaussian Model si +1 = Asi + η , xi = Wsi + ε , (Tipping & Bishop, 1995; Roweis & Ghahramani, 1999) PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 24

- 25. Sparse PCA • Compute a factorization X ≈ UV T – U or V is sparse or both are sparse • Why sparse? – Variable selection (sparse U) – When n >> d – Storage saving – Other new reasons? • L1 and L2 constraints PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 25

- 26. Sparse PCA: Truncation and Discretization X ≈ UΣV T • Sparsified SVD U = (u1 Luk ) V = (v1 Lvk ) – Compute {uk,vk} one at a time, truncate those entries below a threshold. – Recursively compute all pairs using deflation. – (Zhang, Zha, Simon, 2002) X ← X − σ uvT • Semi-discrete decomposition – U, V only contains {-1, 0, 1} – Iterative algorithm to compute U,V using deflation – (Kolda & O’leary, 1999) PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 26

- 27. Sparse PCA: L1 constraint • LASSO (Tibshirani, 1996) min || y − X T β ||2 , || β ||1 ≤ t • SCoTLASS (Joliffe & Uddin, 2003) max u T ( XX T )u T , || u ||1 ≤ t , u T uh = 0 • Least Angle Regression (Efron, et al 2004) • Sparse PCA (Zou, Hastie, Tibshirani,2004) n k k min α ,β ∑ i =1 || xi − α β T xi ||2 +λ ∑ j =1 || β j ||2 + ∑ j =1 λ1, j || β j ||1 , α T α = I v j = β j / || β j || PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 27

- 28. Sparse PCA: Direct Sparsification • Sparse SVD with explicit sparsification min || X − udvT ||F + nnz(u ) + nnz(v) u ,v – rank-one approximation (Zhang, Zha, Simon 2003) – Minimize a bound – deflation • Direct sparse PCA, on covariance matrix S u = max u T Su = max Tr( Suu T ) = max Tr( SU ) s.t. Tr(U ) = 1, nnz(U ) ≤ k 2 , U f 0, rank(U ) = 1 (D’Aspremont, Gharoui, Jordan,Lancriet, 2004) PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 28

- 29. Sparse PCA Summary • Many different approaches – Truncation, discretization – L1 Constraint – Direct sparsification – Other approaches • Sparse Matrix factorization in general – L1 constraint • Many questions – Orthogonality – Unique solution, global solution PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 29

- 30. PCA: Further Generalizations • Generalization to Exponential Family – (Collins, Dasgupta, Schapire, 2001) • Maximum Margin Factorization (Srebro, Rennie, Jaakkola, 2004) – Collaborative filtering – Input Y is binary – Hard margin Yia X ia ≥ 1, ∀ia ∈ S – Soft margin min || X ||Σ + c ∑ max(0,1 − Y ia∈S ia X ia ) X = UV T , || X ||= 1 (|| U ||2 + || V ||2 ) 2 Fro Fro PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 30

- 31. Column Partitioned Matrix Factorizations n n n 6 7 8 64748 4 14 2 64748 4k 4 n1 + L + nk = n X = ( x1,L xn ) = ( x1 L xn1 , xn1 +1 L xn2 , L, xnk −1 +1 L xn ) (Zhang & Zha, 2001) • Column Partitioned Data Matrix • Partitions are generate by clustering (Dhillon & Modha, 2001) • Centroid matrix U = (u1 Luk ) (Park, Jeon & Rosen, 2003) – uk is centroid – Fix U, compute V min || X − UV T ||2 F V = X T U (U T U ) −1 • Represent each partition by a SVD. k k 6 74 41 8 4l 8 6 74 – Pick leading Us to form U U = (U1,LU l ) = (u11) Luk1) , L, u1l ) Lukl ) ) ( ( ( ( – Fix U, compute V 1 l (Castelli, Thomasian & Li 2003) • Several other variations (Zeimpekis & Gallopoulos, 2004) PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 31

- 32. Two-dimensional SVD • Large number of data objects are 2-D: images, maps • Standard method: – convert (re-order) each image as a 1D vector – collect all 1D vectors into a single (big) matrix – apply SVD on the big matrix • 2D-SVD is developed for 2D objects – Extension of standard SVD – Keeping the 2D characteristics – Improves quality of low-dimensional approximation – Reduces computation, storage PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 32

- 33. Linearize a 2D object into 1D object ⎡0.0⎤ ⎢ 0.5⎥ ⎢ ⎥ ⎢0.7⎥ ⎢10 ⎥ ⎢. ⎥ ⎢M ⎥ ⎢ ⎥ ⎢0.8⎥ ⎢0.2⎥ ⎢ ⎥ ⎣0.0⎦ Pixel vector PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 33

- 34. SVD and 2D-SVD SVD X = ( x1 , x2 ,L, xn ) Eigenvectors of XX T and X T X X = UΣV T Σ =UT X V 2D-SVD { A} = { A1 , A2 ,L, An } Eigenvectors of F= ∑ i ( Ai − A )( Ai − A )T row-row covariance G= ∑ i ( Ai − A )T ( Ai − A ) column-column cov Ai = UM iV T M i = U Ai V T PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 34

- 35. 2D-SVD { A} = { A1 , A2 ,L, An } assume A =0 F = ∑ Ai Ai = ∑ λ u u T T row-row cov: k k k i G = ∑ Ai Ai = ∑ ζ k uk u T T col-col cov: k i k =1 Bilinear U = (u1 , u2 ,L, uk ) subspace V = (v1 , v2 ,L, vk ) M i = U Ai V T Ai = UM iV , i = 1,L, n T Ai ∈ ℜr×c ,U ∈ ℜr×k ,V ∈ ℜc×k , M i ∈ ℜk×k PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 35

- 36. 2D-SVD Error Analysis p SVD: min || X − UΣV T ||2 = ∑ σ i2 i = k +1 Ai ≈ LM i RT , Ai ∈ R r×c , L ∈ R r×k , R ∈ R c×k , M i ∈ R k×k n c min J1 = ∑i =1 || Ai − LM i ||2 = ∑ζ j = k +1 j n r min J 2 = ∑i =1 || Ai − M i RT ||2 = ∑λ j = k +1 j n r c min J 3 = ∑i =1 || Ai − LM i RT ||2 ≅ ∑ λ + ∑ζ j = k +1 j j = k +1 j n r min J 4 = ∑i =1 || Ai − LM i LT ||2 ≅ 2 ∑λ j = k +1 j PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 36

- 37. Temperature maps (January over 100 years) Reconstruction Errors SVD/2DSVD=1.1 Storages SVD/2DSVD=8 PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 37

- 38. Reconstructed image SVD 2dSVD SVD (K=15), storage 160560 2DSVD (K=15), storage 93060 PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 38

- 39. 2D-SVD Summary • 2DSVD is extension of standard SVD • Provides optimal solution for 4 representations for 2D images/maps • Substantial improvements in storage, computation, quality of reconstruction • Capture 2D characteristics PCA & Matrix Factorization for Learning, ICML 2005, Chris Ding 39

- 40. Part 1.C. K-means Clustering ⇔ Principal Component Analysis (Equivalence between PCA and K-means) 40 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 41. K-means clustering • Also called “isodata”, “vector quantization” • Developed in 1960’s (Lloyd, MacQueen, Hatigan, etc) • Computationally Efficient (order-mN) • Widely used in practice – Benchmark to evaluate other algorithms Given n points in m-dim: X = ( x1 , x2 ,L, xn ) T K K-means objective min J K = ∑∑ k =1 i∈C k || xi − ck ||2 41 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

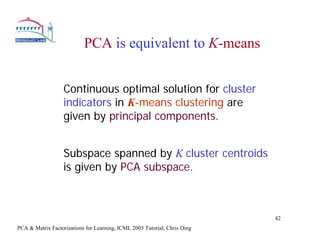

- 42. PCA is equivalent to K-means Continuous optimal solution for cluster indicators in K-means clustering are given by principal components. Subspace spanned by K cluster centroids is given by PCA subspace. 42 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

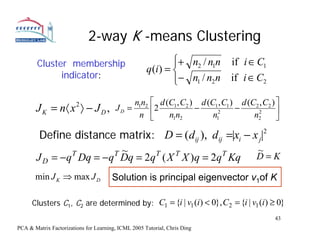

- 43. 2-way K -means Clustering Cluster membership ⎧+ n2 / n1n ⎪ if i ∈ C1 q (i ) = ⎨ indicator: ⎪− n1 / n2 n if i ∈ C2 ⎩ nn ⎡ d (C1 , C2 ) d (C1 , C1 ) d (C2 , C2 ) ⎤ J K = n〈 x 〉 − J D , 2 JD = 1 2 ⎢2 n n − 2 − 2 ⎥ n ⎣ 1 2 n1 n2 ⎦ Define distance matrix: D = (dij ), dij =|xi − x j|2 T ~ ~ J D = −q Dq = −q Dq = 2q ( X X )q = 2q Kq D = K T T T T min J K ⇒ max J D Solution is principal eigenvector v1of K Clusters C1, C2 are determined by: C1 = {i | v1 (i ) < 0}, C2 = {i | v1 (i ) ≥ 0} 43 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 44. A simple illustration 44 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 45. DNA Gene Expression File for Leukemia Using v1 , tissue samples separated into 2 clusters, 3 errors Do one more K- means, reduce to 1 error 45 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 46. Multi-way K-means Clustering Unsigned Cluster membership indicators h1, …, hK: C1 C2 C3 ⎡1 0 0⎤ ⎢1 ⎥ 0 0⎥ ⎢ = (h1 , h2 , h3 ) ⎢0 1 0⎥ ⎢ ⎥ ⎣0 0 1⎦ 46 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 47. Multi-way K-means Clustering K K ∑ ∑ ∑ ∑ ∑ 1 JK = xi − 2 xiT x j = xi2 − hk X T Xhk T i n i , j∈C k i k =1 k k =1 (Unsigned) Cluster indicators H=(h1, …, hK) J K = ∑ xi2 − Tr ( H k X T XH k ) T i K Regularized Relaxation Redundancy: ∑ k =1 n1/ 2 hk = e k Transform h1, …, hK to q1 - qk via orthogonal matrix T (q1 ,..., qk ) = (h1 ,L, hk )T Qk = H kT q1 = e /n1/2 47 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 48. Multi-way K-means Clustering maxTr[Qk −1 ( X T X )Qk −1 ] T Qk −1 = (q2 ,..., qk ) Optimal solutions of q2 … qk are given by principal components v2 … vk. JK is bounded below by total variance minus sum of K eigenvalues of covariance: K −1 nx2 − ∑ k =1 λk < min J K < n x 2 48 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 49. Consistency: 2-way and K-way approaches ⎛ n2 / n − n1 / n ⎞ Orthogonal Transform: T =⎜ ⎟ ⎜ n /n n2 / n ⎟ ⎝ 1 ⎠ T transforms (h1, h2) to (q1,q2): h1 = (1L1,0L0) , h2 = (0L0,1L1) T T a= n2 n1n q2 = (a,L, a,−b,L,−b) T n1 q1 = (1L1) , T b= n2 n Recover the original 2-way cluster indicator 49 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 50. Test of Lower bounds of K-means clustering | J opt − J LB | J opt Lower bound is within 0.6-1.5% of the optimal value 50 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 51. Cluster Subspace (spanned by K centroids) = PCA Subspace Given a data point x, P= ∑k T ck c k project x into the cluster subspace Centroid is given by ck = ∑ h (i) x = Xh k k i k P= ∑c c k T k k =X ∑h h k T k k XT = X ∑v v k T k k XT = ∑λ u u k T k k k PK −means = ∑k λk u k u k T ⇔ ∑ k uk uk ≡ PPCA T PCA automatically project into cluster subspace PCA is unsupervised version of LDA 51 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 52. Effectiveness of PCA Dimension Reduction 52 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 53. Kernel K-means Clustering Kernal K-means objective: xi → φ ( xi ) K φ min J K = ∑∑ k =1 i∈Ck || φ ( xi ) − φ (ck ) ||2 K ∑ ∑ ∑ 1 = | φ ( xi ) |2 − φ ( xi )T φ ( x j ) i n k =1 k i , j∈Ck 1 K Kernal K-means max J K = ∑ ∑ φ ( xi ),φ ( x j ) φ k =1 nk i , j∈Ck 53 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

- 54. Kernel K-means clustering is equivalent to Kernal PCA Continuous optimal solution for cluster indicators are given by Kernal PCA components Subspace spanned by K cluster centroids are given by Kernal PCA principal subspace 54 PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding

![Multi-way K-means Clustering

maxTr[Qk −1 ( X T X )Qk −1 ]

T

Qk −1 = (q2 ,..., qk )

Optimal solutions of q2 … qk are given by

principal components v2 … vk.

JK is bounded below by total variance minus

sum of K eigenvalues of covariance:

K −1

nx2 − ∑

k =1

λk < min J K < n x 2

48

PCA & Matrix Factorizations for Learning, ICML 2005 Tutorial, Chris Ding](https://image.slidesharecdn.com/principalcomponentanalysisandmatrixfactorizationsforlearningpart1-ding-icml2005tutorial-2005-110506072541-phpapp01/85/Principal-component-analysis-and-matrix-factorizations-for-learning-part-1-ding-icml-2005-tutorial-2005-48-320.jpg)