Step-by-Step Introduction to Apache Flink

- 1. Step-By-Step Introduction to Apache Flink [Setup, Configure, Run, Tools] Slim Baltagi @SlimBaltagi Washington DC Area Apache Flink Meetup November 19th , 2015

- 2. 2 For an overview of Apache Flink, see slides at http://www.slideshare.net/sbaltagi Gelly Table ML SAMOA DataSet (Java/Scala/Python) Batch Processing DataStream (Java/Scala) Stream Processing HadoopM/R Local Single JVM Embedded Docker Cluster Standalone YARN, Tez, Mesos (WIP) Cloud Google’s GCE Amazon’s EC2 IBM Docker Cloud, … GoogleDataflow Dataflow(WiP) MRQL Table Cascading Runtime Distributed Streaming Dataflow Zeppelin DEPLOYSYSTEMAPIs&LIBRARIESSTORAGE Files Local HDFS S3 Tachyon Databases MongoDB HBase SQL … Streams Flume Kafka RabbitMQ … Batch Optimizer Stream Builder

- 3. 3 Agenda 1. How to setup and configure your Apache Flink environment? 2. How to use Apache Flink tools? 3. How to run the examples in the Apache Flink bundle? 4. How to set up your IDE (IntelliJ IDEA or Eclipse) for Apache Flink? 5. How to write your Apache Flink program in an IDE?

- 4. 4 1. How to setup and configure your Apache Flink environment? 1.1 Local (on a single machine) 1.2 Flink in a VM image (on a single machine) 1.3 Flink on Docker 1.4 Standalone Cluster 1.5 Flink on a YARN Cluster 1.6 Flink on the Cloud

- 5. 5 1.1 Local (on a single machine) Flink runs on Linux, OS X and Windows. In order to execute a program on a running Flink instance (and not from within your IDE) you need to install Flink on your machine. The following steps will be detailed for both Unix-Like (Linux, Mac OS X) as well as Windows environments: 1.1.1 Verify requirements 1.1.2 Download the Flink binary package 1.1.3 Unpack the downloaded archive 1.1.4 Configure 1.1.5 Start a local Flink instance 1.1.6 Validate Flink is running 1.1.7 Run a Flink example 1.1.8 Stop the local Flink instance

- 6. 6 1.1 Local (on a single machine) 1.1.1 Verify requirements The machine that Flink will run on, must have Java 1.7.x or higher installed. To check the Java version installed issue the command: java –version. The out of the box configuration will use your default Java installation. Optional: If you want to manually override the Java runtime to use, set the JAVA_HOME environment variable in Unix-like environment. To check if JAVA_HOME is set, issue the command: echo $JAVA_HOME. If needed, follow the instructions for installing Java and setting JAVA_HOME on a Unix system here: https ://docs.oracle.com/cd/E19182-01/820-7851/inst_set_jdk_korn_bash_t/ index.html

- 7. 7 1.1 Local (on a single machine) In Windows environment, check the correct installation of Java by issuing the following command: java –version. The bin folder of your Java Runtime Environment must be included in Window’s %PATH% variable. If needed, follow this guide to add Java to the path variable. http://www.java.com/en/download/help/path.xml If needed, follow the instructions for installing Java and setting JAVA_HOME on a Windows system here: https://docs.oracle.com/cd/E19182-01/820-7851/inst_set_jdk_windows_t/ind

- 8. 8 1.1 Local (on a single machine) 1.1.2 Download the Flink binary package The latest stable release of Apache Flink can be downloaded from http://flink.apache.org/downloads.html For example: In Linux-Like environment, run the following command: wget https://www.apache.org/dist/flink/flink-0.10.0/flink- 0.10.0-bin-hadoop1-scala_2.10.tgz Which version to pick? • You don’t have to install Hadoop to use Flink. • But if you plan to use Flink with data stored in Hadoop, pick the version matching your installed Hadoop version. • If you don’t want to do this, pick the Hadoop 1 version.

- 9. 9 1.1 Local (on a single machine) 1.1.3 Unpack the downloaded .tgz archive Example: $ cd ~/Downloads # Go to download directory $ tar -xvzf flink-*.tgz # Unpack the downloaded archive $ cd flink-0.10.0 $ ls –l

- 10. 10 1.1 Local (on a single machine) 1.1.4. Configure The resulting folder contains a Flink setup that can be locally executed without any further configuration. flink-conf.yaml under flink-0.10.0/conf contains the default configuration parameters that allow Flink to run out-of-the-box in single node setups.

- 11. 11 1.1 Local (on a single machine) 1.1.5. Start a local Flink instance: • Given that you have a local Flink installation, you can start a Flink instance that runs a master and a worker process on your local machine in a single JVM. • This execution mode is useful for local testing. • On UNIX-Like system you can start a Flink instance as follows: cd /to/your/flink/installation ./bin/start-local.sh

- 12. 12 1.1 Local (on a single machine) 1.1.5. Start a local Flink instance: On Windows you can either start with: • Windows Batch Files by running the following commands cd C:toyourflinkinstallation .binstart-local.bat • or with Cygwin and Unix Scripts: start the Cygwin terminal, navigate to your Flink directory and run the start-local.sh script $ cd /cydrive/c cd flink $ bin/start-local.sh

- 13. 13 1.1 Local (on a single machine) The JobManager (the master of the distributed system) automatically starts a web interface to observe program execution. It runs on port 8081 by default (configured in conf/flink-config.yml). http://localhost:8081/ 1.1.6 Validate that Flink is running You can validate that a local Flink instance is running by: • Issuing the following command: $ jps jps: java virtual machine process status tool • Looking at the log files in ./log/ $ tail log/flink-*-jobmanager-*.log • Opening the JobManager’s web interface at $ open http://localhost:8081

- 14. 14 1.1 Local (on a single machine) 1.1.7 Run a Flink example • On UNIX-Like system you can run a Flink example as follows: cd /to/your/flink/installation ./bin/flink run ./examples/WordCount.jar • On Windows Batch Files, open a second terminal and run the following commands” cd C:toyourflinkinstallation .binflink.bat run .examplesWordCount.jar 1.1.8 Stop local Flink instance •On UNIX you call ./bin/stop-local.sh •On Windows you quit the running process with Ctrl+C

- 15. 15 1.2 VM image (on a single machine)

- 16. 16 1.2 VM image (on a single machine) Please send me an email to [email protected] for a link from which you can download a Flink Virtual Machine. The Flink VM, which is approximately 4 GB, is from data Artisans http://data-artisans.com/ It currently has Flink 0.10.0, Kafka, IDEs (IntelliJ, Eclipse), Firefox, … It will contain soon the FREE training from data Artisans for Flink 0.10.0 Meanwhile, an older version, based on 0.9.1, of this FREE training is is available from http ://dataartisans.github.io/flink-training/

- 17. 17 1.3 Docker Docker can be used for local development. Container based virtualization advantages: lightweight and portable; build once run anywhere ease of packaging applications automated and scripted isolated Often resource requirements on data processing clusters exhibit high variation. Elastic deployments reduce TCO (Total Cost of Ownership).

- 18. 18 1.3 Flink on Docker Apache Flink cluster deployment on Docker using Docker-Compose by Simons Laws from IBM. Talk at the Flink Forward in Berlin on October 12, 2015. Slides: http://www.slideshare.net/FlinkForward/simon-laws-apache-flink-cluster-d dockercompose Video recording (40’:49): https://www.youtube.com/watch?v=CaObaAv9tLE The talk: • Introduces the basic concepts of container isolation exemplified on Docker • Explain how Apache Flink is made elastic using Docker-Compose. • Show how to push the cluster to the cloud exemplified on the IBM Docker Cloud.

- 19. 19 1.3 Flink on Docker Apache Flink dockerized: This is a set of scripts to create a local multi-node Flink cluster, each node inside a docker container. https://hub.docker.com/r/gustavonalle/flink/ Using docker to setup a development environment that is reproducible. Apache Flink cluster deployment on Docker using Docker-Compose https://github.com/apache/flink/tree/master/flink-contrib/d Web resources to learn more about Docker http://www.flinkbigdata.com/component/tags/tag/47-dock

- 20. 20 1.4 Standalone Cluster See quick start - Cluster https://ci.apache.org/projects/flink/flink-docs-rele setup See instructions on how to run Flink in a fully distributed fashion on a static (possibly heterogeneous) cluster. This involves two steps: • Installing and configuring Flink • Installing and configuring the Hadoop Distributed File System (HDFS) https://ci.apache.org/projects/flink/flink-docs-master/setup/cluster_setup.ht

- 21. 21 1.5 Flink on a YARN Cluster You can easily deploy Flink on your existing YARN cluster: 1. Download the Flink Hadoop2 package: Flink with Hadoop 2 2. Make sure your HADOOP_HOME (or YARN_CONF_DIR or HADOOP_CONF_DIR) environment variable is set to read your YARN and HDFS configuration. – Run the YARN client with: ./bin/yarn-session.sh You can run the client with options -n 10 -tm 8192 to allocate 10 TaskManagers with 8GB of memory each. For more detailed instructions, please check out the documentation: https://ci.apache.org/projects/flink/flink-docs-master/setu yarn_setup.html

- 22. 22 1.6 Flink on the Cloud 1.6.1 Google Compute Engine (GCE) 1.6.2 Amazon EMR

- 23. 23 1.6 Cloud 1.6.1 Google Compute Engine Free trial for Google Cloud Engine: https://cloud.google.com/free-trial/ Enjoy your $300 in GCE for 60 days! Now, how to setup Flink with Hadoop 1 or Hadoop 2 on top of a Google Compute Engine cluster? Google’s bdutil starts a cluster and deploys Flink with Hadoop. To get started, just follow the steps here: https ://ci.apache.org/projects/flink/flink-docs-master/se

- 24. 24 1.6 Cloud 1.6.2 Amazon EMR Amazon Elastic MapReduce (Amazon EMR) is a web service providing a managed Hadoop framework. • http://aws.amazon.com/elasticmapreduce/ • http://docs.aws.amazon.com/ElasticMapReduce/latest/DeveloperGuide/emr-what-is emr.html Example: Use Stratosphere with Amazon Elastic MapReduce, February 18, 2014 by Robert Metzger https://flink.apache.org/news/2014/02/18/amazon-elastic-mapreduce-cloud-yarn.html Use pre-defined cluster definition to deploy Apache Flink using Karamel web app http://www.karamel.io/ Getting Started – Installing Apache Flink on Amazon EC2 by Kamel Hakimzadeh. Published on October 12, 2015 https://www.youtube.com/watch?v=tCIA8_2dR14

- 25. 25 Agenda 1. How to setup and configure your Apache Flink environment? 2. How to use Apache Flink tools? 3. How to run the examples in the Apache Flink bundle? 4. How to set up your IDE (IntelliJ IDEA or Eclipse) for Apache Flink? 5. How to write your Apache Flink program in an IDE?

- 26. 26 2. How to use Apache Flink tools? 2.1 Command-Line Interface (CLI) 2.2 Web Submission Client 2.3 Job Manager Web Interface 2.4 Interactive Scala Shell 2.5 Apache Zeppelin Notebook

- 27. 27 2.1 Command-Line Interface (CLI) Flink provides a CLI to run programs that are packaged as JAR files, and control their execution. bin/flink has 4 major actions • run #runs a program. • info #displays information about a program. • list #lists scheduled and running jobs • cancel #cancels a running job. Example: ./bin/flink info ./examples/KMeans.jar See CLI usage and related examples: https://ci.apache.org/projects/flink/flink-docs-master/apis/cli.html

- 28. 28 2.2 Web Submission Client

- 29. 29 2.2 Web Submission Client Flink provides a web interface to: • Upload programs • Execute programs • Inspect their execution plans • Showcase programs • Debug execution plans • Demonstrate the system as a whole The web interface runs on port 8080 by default. To specify a custom port set the webclient.port property in the ./conf/flink.yaml configuration file.

- 30. 30 2.2 Web Submission Client Start the web interface by executing: ./bin/start-webclient.sh Stop the web interface by executing: ./bin/stop-webclient.sh • Jobs are submitted to the JobManager specified by jobmanager.rpc.address and jobmanager.rpc.port • For more details and further configuration options, please consult this webpage: https://ci.apache.org/projects/flink/flink-docs-release-0.10/setup/config.html# webclient

- 31. 31 2.3 Web Submission Client The JobManager (the master of the distributed system) starts a web interface to observe program execution. It runs on port 8081 by default (configured in conf/flink-config.yml). Open the JobManager’s web interface at http://localhost:8081 • jobmanager.rpc.port 6123 • jobmanager.web.port 8081

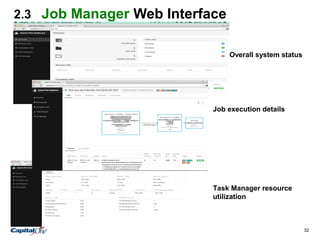

- 32. 32 2.3 Job Manager Web Interface Overall system status Job execution details Task Manager resource utilization

- 41. 41 Agenda 1. How to setup and configure your Apache Flink environment? 2. How to use Apache Flink tools? 3. How to run the examples in the Apache Flink bundle? 4. How to set up your IDE (IntelliJ IDEA or Eclipse) for Apache Flink? 5. How to write your Apache Flink program in an IDE?

- 46. 46 3.1 How to run the examples in the Apache Flink bundle? 3.1.2 Where are the related source codes? If you don't want to import the whole Flink project just for playing around with the examples, you can: • Create an empty maven project. This script will automatically set everything up for you: $ curl http://flink.apache.org/q/quickstart.sh | bash • Import the "quickstart" project into Eclipse or IntelliJ. It will download all dependencies and package everything correctly. • If you want to use an example there, just copy the Java file into the "quickstart" project.

- 49. 49 Run K-Means example 1. Generate Input Data Flink contains a data generator for K-Means that has the following arguments (arguments in [] are optional): -points <num> -k <num clusters> [-output <output-path>] [- stddev <relative stddev>] [-range <centroid range>] [-seed <seed>] Go to the Flink root installation: $ cd flink-0.10.0 Create a new directory that will contains the data: $ mkdir kmeans $ cd kmeans

- 50. 50 Run K-Means example Create some data using Flink's tool: java -cp ../examples/KMeans.jar:../lib/flink-dist-0.10.0.jar org.apache.flink.examples.java.clustering.util.KMeansD ataGenerator -points 500 -k 10 -stddev 0.08 -output `pwd` The directory should now contain the files "centers" and "points".

- 51. 51 Run K-Means example Continue following the instructions on Quick Start: Run K-Means Example as outlined here: https://ci.apache.org/projects/flink/flink-docs-releas Happy Flinking!

- 52. 52 Agenda 1. How to setup and configure your Apache Flink environment? 2. How to use Apache Flink tools? 3. How to run the examples in the Apache Flink bundle? 4. How to set up your IDE (IntelliJ IDEA or Eclipse) for Apache Flink? 5. How to write your Apache Flink program in an IDE?

- 53. 53 4. How to set up your IDE (IntelliJ IDEA or Eclipse) for Apache Flink? 4.1 How to set up your IDE (IntelliJ IDEA)? 4.2 How to setup your IDE (Eclipse)? Flink uses mixed Scala/Java projects, which pose a challenge to some IDEs Minimal requirements for an IDE are: • Support for Java and Scala (also mixed projects) • Support for Maven with Java and Scala

- 54. 54 4.1 How to set up your IDE (IntelliJ IDEA)?IntelliJ IDEA supports Maven out of the box and offers a plugin for Scala development. IntelliJ IDEA Download https ://www.jetbrains.com/idea/download/ IntelliJ Scala Plugin http://plugins.jetbrains.com/plugin/?id=1347 Check out Setting up IntelliJ IDEA guide for details https://github.com/apache/flink/blob/master/docs/internals/ide_setup.md#intel Screencast: Run Apache Flink WordCount from IntelliJ https://www.youtube.com/watch?v=JIV_rX- OIQM

- 55. 55 4.2 How to setup your IDE (Eclipse)? • For Eclipse users, Apache Flink committers recommend using Scala IDE 3.0.3, based on Eclipse Kepler. • While this is a slightly older version, they found it to be the version that works most robustly for a complex project like Flink. One restriction is, though, that it works only with Java 7, not with Java 8. • Check out how to setup Eclipse docs: https://github.com/apache/flink/blob/master/docs/internals/ide_setup.md #eclipse

- 56. 56 Agenda 1. How to setup and configure your Apache Flink environment? 2. How to use Apache Flink tools? 3. How to run the examples in the Apache Flink bundle? 4. How to set up your IDE (IntelliJ IDEA or Eclipse) for Apache Flink? 5. How to write your Apache Flink program in an IDE?

- 57. 57 5. How to write your Apache Flink program in an IDE? 5.1 How to write a Flink program in an IDE? 5.2 How to generate a Flink project with Maven? 5.3 How to import the Flink Maven project into IDE 5.4 How to use logging? 5.5 FAQs and best practices related to coding

- 58. 58 5.1 How to write a Flink program in an IDE? The easiest way to get a working setup to develop (and locally execute) Flink programs is to follow the Quick Start guide: https://ci.apache.org/projects/flink/flink-docs-master/quickstart/java_api_quickstart.html https://ci.apache.org/projects/flink/flink-docs-master/quickstart/scala_api_quickstart.html It uses Maven archetype to configure and generate a Flink Maven project. This will save you time dealing with transitive dependencies! This Maven project can be imported into your IDE.

- 59. 59 5.2. How to generate a skeleton Flink project with Maven? Generate a skeleton flink-quickstart-java Maven project to get started with no need to manually download any .tgz or .jar files Option 1: $ curl http://flink.apache.org/q/quickstart.sh | bash A sample quickstart Flink Job will be created: • Switch into the directory using: cd quickstart • Import the project there using your favorite IDE (Import it as a maven project) • Build a jar inside the directory using: mvn clean package • You will find the runnable jar in quickstart/target

- 60. 60 5.2. How to generate a skeleton Flink project with Maven? Option 2: Type the command below to create a flink- quickstart-java or flink-quickstart-scala project and specify Flink version mvn archetype:generate / -DarchetypeGroupId=org.apache.flink / -DarchetypeArtifactId=flink-quickstart-java / -DarchetypeVersion=0.10.0 you can also put “quickstart- scala” here you can also put “quickstart- scala” here or “0.1.0-SNAPSHOT”or “0.1.0-SNAPSHOT”

- 61. 61 5.2. How to generate a skeleton Flink project with Maven? The generated projects are located in a folder called flink-java-project or flink-scala-project. In order to test the generated projects and to download all required dependencies run the following commands (change flink-java-project to flink-scala-project for Scala projects) • cd flink-java-project • mvn clean package Maven will now start to download all required dependencies and build the Flink quickstart project.

- 62. 62 5.3 How to import the Flink Maven project into IDE The generated Maven project needs to be imported into your IDE: IntelliJ: • Select “File” -> “Import Project” • Select root folder of your project • Select “Import project from external model”, select “Maven” • Leave default options and finish the import Eclipse: • Select “File” -> “Import” -> “Maven” -> “Existing Maven Project” • Follow the import instructions

- 63. 63 5.4 How to use logging? The logging in Flink is implemented using the slf4j logging interface. log4j is used as underlying logging framework. Log4j is controlled using property file usually called log4j.properties. You can pass to the JVM the filename and location of this file using the Dlog4j.configuration= parameter. The loggers using slf4j are created by calling import org.slf4j.LoggerFactory import org.slf4j.Logger Logger LOG = LoggerFactory.getLogger(Foobar.class) You can also use logback instead of log4j. https://ci.apache.org/projects/flink/flink-docs-release-0.9/internals/logging.html

- 64. 64 5.5 FAQs & best practices related to coding Errors http://flink.apache.org/faq.html#errors Usage http://flink.apache.org/faq.html#usage Flink APIs Best Practices https://ci.apache.org/projects/flink/flink-docs- master/apis/best_practices.html Thanks!

Editor's Notes

- #6: The following steps assume a UNIX-like environment. For Windows, see Flink on Windows: https://ci.apache.org/projects/flink/flink-docs-master/setup/local_setup.html#flink-on-windows

- #7: The following steps assume a UNIX-like environment. For Windows, see Flink on Windows: https://ci.apache.org/projects/flink/flink-docs-master/setup/local_setup.html#flink-on-windows

- #8: For Windows, see Flink on Windows: https://ci.apache.org/projects/flink/flink-docs-master/setup/local_setup.html#flink-on-windows

- #9: For Windows, see Flink on Windows: https://ci.apache.org/projects/flink/flink-docs-master/setup/local_setup.html#flink-on-windows

- #60: This is Slide 5 of http://www.slideshare.net/robertmetzger1/apache-flink-hands-on

- #61: This is Slide 5 of http://www.slideshare.net/robertmetzger1/apache-flink-hands-on

- #63: We pass the filename and location of this file using the -Dlog4j.configuration= parameter to the JVM.

- #64: We pass the filename and location of this file using the -Dlog4j.configuration= parameter to the JVM.

![Step-By-Step Introduction to

Apache Flink

[Setup, Configure, Run, Tools]

Slim Baltagi @SlimBaltagi

Washington DC Area Apache Flink Meetup

November 19th

, 2015](https://image.slidesharecdn.com/step-by-step-introduction-to-apache-flink-by-slim-baltagi-151120114353-lva1-app6892/85/Step-by-Step-Introduction-to-Apache-Flink-1-320.jpg)

![49

Run K-Means example

1. Generate Input Data

Flink contains a data generator for K-Means that has the

following arguments (arguments in [] are optional):

-points <num> -k <num clusters> [-output <output-path>] [-

stddev <relative stddev>] [-range <centroid range>] [-seed

<seed>]

Go to the Flink root installation:

$ cd flink-0.10.0

Create a new directory that will contains the data:

$ mkdir kmeans

$ cd kmeans](https://image.slidesharecdn.com/step-by-step-introduction-to-apache-flink-by-slim-baltagi-151120114353-lva1-app6892/85/Step-by-Step-Introduction-to-Apache-Flink-49-320.jpg)