LDA入門

- 1. Latent Dirichlet Allocation入門 @tokyotextmining 坪坂 正志

- 2. 内容 • NLPで用いられるトピックモデルの代表である LDA(Latent Dirichlet Allocation)について紹介 する • 機械学習ライブラリmalletを使って、LDAを使 う方法について紹介する

- 3. Why LDA • 白鵬が単独首位 琴欧洲敗れる

- 4. Why LDA • 白鵬が単独首位 琴欧洲敗れる • 人は上の文を見て相撲に関係する文である ことを理解できる – 文中に相撲という単語は出てこないのにもかか わらず

- 5. Why LDA • 白鵬が単独首位 琴欧洲敗れる • 人は上の文を見て相撲に関係する文である ことを理解できる – 文中に相撲という単語は出てこないのにもかか わらず • 単語は独立に出現しているのではなく、潜在 的なトピックを持ち、同じトピックを持つ単語 は同じ文章に出現しやすい

- 6. Why LDA • 文章/単語のトピックを推定すると何の役にた つか? • 文章分類 • 次元削減 • 言語モデル – 情報検索[Wei and Croft 2006]

- 7. 概要 • LDA – 確率モデル – 推論アルゴリズム – ハイパーパラメータの調整 – 並列化 – 高速化 • Mallet – Malletを使ったLDA

- 8. 文章生成モデル • トピック数Tはあらかじめ決める • 各トピックごとに単語出現確率 を生成 – ディリクレ分布から生成 ~Dir() • 文章ごとにトピック確率 を生成する – ディリクレ分布から生成 ~Dir() – 各文章において以下のように単語を生成していく • ~Multi( )と単語のトピックを生成する • ~Multi( )とトピックに応じた確率で単語を生成

- 9. パラメータは未知 • 実際にはパラメータは推定する必要がある [Steyvers and Griffiths 2007]より

- 10. 推論アルゴリズム • Bleiの元論文[Blei+ 2003]では変分ベイズを 使ったパラメータ推定を行っている • しかし、実用的には後にでたGibbs samplerを 使ったパラメータ推定法がよく用いられる [Griffiths and Steyvers 2004] – 一回当たりの計算が軽い – 数式が簡単(実装コストが低い) • 他にもcollapsed変分ベイズという方法もある [Teh+ 2007, Asuncion+ 2009]

- 11. Collapsed Gibbs sampler • 文章中の各単語に対して、初期状態としてランダムなトピック を割り当てる • 各単語に関してトピックを逐次更新する – 直感的には(文章中でのトピックtの割合) * (その単語におけるトピッ クtの割合) – 一つの文章では同じトピックがでやすい – 単語ごとに出やすいトピックと出にくいトピックがある | : 文章dに出現するトピックtに属する単語の合計 | : トピックtに属する単語wの合計 ⋅| : トピックtに属する単語の合計

- 12. ハイパーパラメータの影響 • 多くの論文では, を天下り的に設定するこ とが多い – 実際は, の値によってPerplexityなど変わってく る [Asuncion+ 2009] • また、 = 0.1などと各トピックの事前パラメー タはすべて同じにすることが多い

- 13. でも実際は • の値をトピックごとに差を設けることにより、 トピックの出やすさを表現できる [Wallach+ 2009]より

- 14. 他のストップワードへの対応方法 [Wilson and Chew 2010] • サンプリングの際の| などの計算で単語ご とに異なる重みづけを用いて計算する – 重み付けにはtf-idf, PMIなどを用いる

- 15. どうやって推定するか [Wallach+ 2009]より • 階層的なモデルを立てる • 一定間隔でハイパーパラメータを最適化する – 実用上はこちらが階層的モデルと比べ精度も変 わらず、高速 – Malletではこちらを採用

- 16. 並列化 • マルチコアとかグリッドとか流行ってる • LDAにおいても並列化しようという話はいくつ かある – マルチコア[Newman+ 07] – グリッド (Mapreduce/MPIを使う)[Wang+ 2009] – GPGPU [Yan+ 2009]

- 17. 並列化 • 更新式

- 18. 並列化 • 更新式 文章ごとに独立 • 文章を各プロセッサに分散して配置する • | , .| は各プロセッサで独立に持つ – 定期的にグローバルな値を計算し直し、同期する – グローバルな値をmemcachedに持つという話も ある[Smola and Narayanamurthy 2010]

- 19. 並列化 • 1プロセッサあたりの時間/空間計算量 – プロセッサの数をPとする – 各プロセッサには文章が均一に割り当てられて いるとする 時間計算量 空間計算量 LDA O(NT) O(N + (D + W) * T) PLDA O(NT/P) O(N / P + (D / P + W) * T)

- 20. 高速化 • トピック数Tが多くなっていくとナイーブな実装 だと、一回のGibbs samplerにかかる時間が O(T)であるため、計算にかかるコストが大きい • 効率的なサンプリング方法が存在[Yao+ 2009]

- 22. サンプリングの高速化 • 一様分布 ∼ ( + + )から乱数を生成 • 経験的に90%以上がの部分に落ちる • トピック-単語カウントに相当する項に関して 高速なサンプリングができればよい

- 23. サンプリングの高速化 • 上の式に従って高速にサンプリングするため に| の降順でトピックからサンプリングされ るかどうかを見ていく

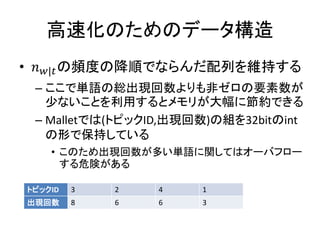

- 24. 高速化のためのデータ構造 • | の頻度の降順でならんだ配列を維持する – ここで単語の総出現回数よりも非ゼロの要素数が 少ないことを利用するとメモリが大幅に節約できる – Malletでは(トピックID,出現回数)の組を32bitのint の形で保持している • このため出現回数が多い単語に関してはオーバフロー する危険がある トピックID 3 2 4 1 出現回数 8 6 6 3

- 25. 高速化の効果 データセットにはNIPS Dataset(1500文章, 約190万トークン) を使用 plda(code.google.com/p/plda)とmalletでトピック数を変えたときの 実行時間を測定 反復回数は両方ともに100回とした 3500 3000 2500 2000 実行時間(sec) plda 1500 mallet 1000 500 0 0 200 400 600 800 1000 1200 # of topics

- 26. 高速化の効果 • 前のグラフを対数領域でプロットしたもの 10000 1000 plda 100 mallet 10 1 1 10 100 1000

- 27. Malletとは • MAchine Learning for LanguagE Toolkit • Javaベースの統計的自然言語処理、文章分 類、トピックモデリングなどのパッケージ • Andrew McCallumを中心として開発が行われ ている

- 28. Instance • Malletにおいてデータ一つ一つはInstanceと いうオブジェクトで表現される – 文章集合中の文章に相当する • 4つのメンバ変数を持つ – Name (Instanceの名前 今回は使わない) – Data (入力データ) – Target/Label (出力 今回は使わない) – Source (Instanceのソース)

- 29. Iterator • InstanceにはIteratorを経由して、アクセスされ る – XML,ディレクトリ配下のファイルなど様々な入力 形式に対応できる • 大抵の場合はCsvIteratorが使える

- 30. CsvIterator CsvIterator(String fileName, Pattern lineRegex, int dataGroup, int targetGroup, int uriGroup) • 対象のfileNameの各行に対してlineRegexで マッチを行い、data,target,uri(name)に指定さ れたグループの値を代入する • Ex: name label dataと空白区切りで並んでい るデータから読み取る場合 – new CsvIterator(fileName, "(¥¥w+)¥¥s+(¥¥w+)¥¥s+(.*)", 3, 2, 1)

- 31. Pipe • CsvIteratorで読んだInstanceに入っているdata は単なるString – Malletで扱う形式に変換する必要がある • Instance Pipe.pipe(Instance inst) – 与えられたInstanceを別のInstanceに変換して返す – TokenSequence2FeatureSequenceなど様々な変換 用のPipeが存在する • SerialPipe(List<Pipe>) – 指定されたlist中のpipeを順番に適応するPipe

- 32. InstanceList • Instanceのリストを表現する • InstanceList(Pipe pipe) – InstanceListに入ってくるInstanceを処理するpipe を指定する • void InstanceList.addThruPipe(Iterator inst) – InstanceListにpipeを通しながらIterator経由で データをロードする

- 33. データのロード • PLDA形式のデータを読み込む a 2 is 1 character 1 a 2 is 1 b 1 character 1 after 1 class MyPipe extends Pipe{ static InstanceList load(String fileName) { @Override ArrayList<Pipe> pipeList = new ArrayList<Pipe>(); public Instance pipe(Instance inst) { pipeList.add(new MyPipe()); String data = (String)inst.getData(); pipeList.add(new TokenSequence2FeatureSequence()); String array[] = data.split("¥¥s+"); InstanceList list = TokenSequence ret = new TokenSequence(); new InstanceList(new SerialPipes(pipeList)); for(int i = 0 ; i < array.length ; i += 2){ CsvIterator it = new CsvIterator(fileName, "(.*)",1, 0,0); String word = array[i]; list.addThruPipe(it); int freq = Integer.parseInt(array[i + 1]); return list; for(int f = 0 ; f < freq; ++f){ } ret.add(new Token(word)); } } inst.setData(ret); return inst; } }

- 34. ParallelTopicModel • マルチスレッドなLDA実装 static ParallelTopicModel train(int numberOfTopics , InstanceList training) { ParallelTopicModel ptm = new ParallelTopicModel(numberOfTopics); ptm.addInstances(training); ptm.estimate(); return ptm; } • 訓練後のデータの保存にはwrite(File f)を使う。 – シリアライズされたParallelTopicModelが保存される – static ParallelTopicModel read(File f)で読み込める

- 35. マルチスレッドのパフォーマンス • UCI Datasetのnytimesデータを使用 – 30万文章, 10万単語 – 約1億トークン – トピック数500, 反復回数 500回 1スレッド 276min 33sec 4スレッド 179min 45sec Core i7 920 , JDK 1.6.16 (-server –Xmx1500m)

- 36. トピックの代表的単語の抽出 • printTopWordsを使う 0 0.1847 algorithm learning function gradient convergence parameter error iteration vector 1 0.03452 map dominance ocular development pattern mapping organizing kohonen eye 2 0.01327 hint return data cost market stock prediction load subscriber 3 0.71807 case term result form consider general defined order paper 4 0.02225 face images recognition image faces representation hand video facial 5 0.42392 values line order point number high step result factor 6 0.01545 disparity gamma game play player partition games board operator 7 0.09096 local point region surface contour segment data field path 8 0.04591 prediction series error network predict training road predictor committee 9 0.12844 vector matrix linear space component dimensional point data transformation ...

- 37. 新規文章に関する推論 • getInferencer()を呼びTopicInferencerを取得 する • TopicInferencerのgetSampledDistributionを使 うと各トピックに帰属する確率を取得できる

- 38. 参考文献 • [Asuncion+ 2009] On smoothing and inference for topic models, UAI • [Blei+ 2003] Latent Dirichlet allocation, JMLR • [Griffiths and Steyvers 2004] Finding scientific topics, PNAS • [Newman+ 2007] Distributed inference for latent Dirichlet allocation, NIPS • [Smola and Narayanamurthy 2010] An architecture for parallel topic models, VLDB • [Steyvers and Griffiths 2007] Probabilistic topic models, In Handbook of Latent Semantic Analysis • [Teh+ 2007] A collapsed variational Bayesian inference algorithm for latent Dirichlet allocation, NIPS • [Wallach+ 2009] Rethinking LDA: Why Priors Matter, NIPS • [Wang+ 2009] PLDA: Parallel Latent Dirichlet Allocation for Large-scale Applications, AAIM • [Wilson and Chew 2010] Term Weighting Schemes for Latent Dirichlet Allocation, ACL • [Yan+ 2009] Parallel Inference for Latent Dirichlet Allocation on Graphics Processing Units, NIPS • [Yao+ 2009] Efficient methods for topic model inference on streaming document collections, SIGKDD

- 39. 参考文献2 • [Bao and Chang 2010] AdHeat: an influence-based diffusion model for propagating hints to match ads • [Chen+ 2009] Collaborative filtering for Orkut communities : discovery of user latent behavior • [Lau+ 2010] Best topic word selection for topic labelling, Colling • [Phan+ 2008] Learning to classify short and sparse text & web with hidden topics from large-scale data collections • [Wei and Croft 2006] LDA-based document models for ad-hoc retrieval, SIGIR

![Why LDA

• 文章/単語のトピックを推定すると何の役にた

つか?

• 文章分類

• 次元削減

• 言語モデル

– 情報検索[Wei and Croft 2006]](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-6-320.jpg)

![パラメータは未知

• 実際にはパラメータは推定する必要がある

[Steyvers and Griffiths 2007]より](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-9-320.jpg)

![推論アルゴリズム

• Bleiの元論文[Blei+ 2003]では変分ベイズを

使ったパラメータ推定を行っている

• しかし、実用的には後にでたGibbs samplerを

使ったパラメータ推定法がよく用いられる

[Griffiths and Steyvers 2004]

– 一回当たりの計算が軽い

– 数式が簡単(実装コストが低い)

• 他にもcollapsed変分ベイズという方法もある

[Teh+ 2007, Asuncion+ 2009]](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-10-320.jpg)

![ハイパーパラメータの影響

• 多くの論文では, を天下り的に設定するこ

とが多い

– 実際は, の値によってPerplexityなど変わってく

る [Asuncion+ 2009]

• また、 = 0.1などと各トピックの事前パラメー

タはすべて同じにすることが多い](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-12-320.jpg)

![でも実際は

• の値をトピックごとに差を設けることにより、

トピックの出やすさを表現できる

[Wallach+ 2009]より](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-13-320.jpg)

![他のストップワードへの対応方法

[Wilson and Chew 2010]

• サンプリングの際の| などの計算で単語ご

とに異なる重みづけを用いて計算する

– 重み付けにはtf-idf, PMIなどを用いる](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-14-320.jpg)

![どうやって推定するか

[Wallach+ 2009]より

• 階層的なモデルを立てる

• 一定間隔でハイパーパラメータを最適化する

– 実用上はこちらが階層的モデルと比べ精度も変

わらず、高速

– Malletではこちらを採用](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-15-320.jpg)

![並列化

• マルチコアとかグリッドとか流行ってる

• LDAにおいても並列化しようという話はいくつ

かある

– マルチコア[Newman+ 07]

– グリッド (Mapreduce/MPIを使う)[Wang+ 2009]

– GPGPU [Yan+ 2009]](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-16-320.jpg)

![並列化

• 更新式

文章ごとに独立

• 文章を各プロセッサに分散して配置する

• | , .| は各プロセッサで独立に持つ

– 定期的にグローバルな値を計算し直し、同期する

– グローバルな値をmemcachedに持つという話も

ある[Smola and Narayanamurthy 2010]](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-18-320.jpg)

![高速化

• トピック数Tが多くなっていくとナイーブな実装

だと、一回のGibbs samplerにかかる時間が

O(T)であるため、計算にかかるコストが大きい

• 効率的なサンプリング方法が存在[Yao+ 2009]](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-20-320.jpg)

![データのロード

• PLDA形式のデータを読み込む

a 2 is 1 character 1

a 2 is 1 b 1 character 1 after 1

class MyPipe extends Pipe{ static InstanceList load(String fileName) {

@Override ArrayList<Pipe> pipeList = new ArrayList<Pipe>();

public Instance pipe(Instance inst) { pipeList.add(new MyPipe());

String data = (String)inst.getData(); pipeList.add(new TokenSequence2FeatureSequence());

String array[] = data.split("¥¥s+"); InstanceList list =

TokenSequence ret = new TokenSequence(); new InstanceList(new SerialPipes(pipeList));

for(int i = 0 ; i < array.length ; i += 2){ CsvIterator it = new CsvIterator(fileName, "(.*)",1, 0,0);

String word = array[i]; list.addThruPipe(it);

int freq = Integer.parseInt(array[i + 1]); return list;

for(int f = 0 ; f < freq; ++f){ }

ret.add(new Token(word));

}

}

inst.setData(ret);

return inst;

}

}](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-33-320.jpg)

![参考文献

• [Asuncion+ 2009] On smoothing and inference for topic models, UAI

• [Blei+ 2003] Latent Dirichlet allocation, JMLR

• [Griffiths and Steyvers 2004] Finding scientific topics, PNAS

• [Newman+ 2007] Distributed inference for latent Dirichlet allocation, NIPS

• [Smola and Narayanamurthy 2010] An architecture for parallel topic models, VLDB

• [Steyvers and Griffiths 2007] Probabilistic topic models, In Handbook of Latent

Semantic Analysis

• [Teh+ 2007] A collapsed variational Bayesian inference algorithm for latent

Dirichlet allocation, NIPS

• [Wallach+ 2009] Rethinking LDA: Why Priors Matter, NIPS

• [Wang+ 2009] PLDA: Parallel Latent Dirichlet Allocation for Large-scale Applications,

AAIM

• [Wilson and Chew 2010] Term Weighting Schemes for Latent Dirichlet Allocation,

ACL

• [Yan+ 2009] Parallel Inference for Latent Dirichlet Allocation on Graphics

Processing Units, NIPS

• [Yao+ 2009] Efficient methods for topic model inference on streaming document

collections, SIGKDD](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-38-320.jpg)

![参考文献2

• [Bao and Chang 2010] AdHeat: an influence-based

diffusion model for propagating hints to match ads

• [Chen+ 2009] Collaborative filtering for Orkut

communities : discovery of user latent behavior

• [Lau+ 2010] Best topic word selection for topic

labelling, Colling

• [Phan+ 2008] Learning to classify short and sparse text

& web with hidden topics from large-scale data

collections

• [Wei and Croft 2006] LDA-based document models for

ad-hoc retrieval, SIGIR](https://image.slidesharecdn.com/lda-intro-100925090934-phpapp02/85/LDA-39-320.jpg)

![[DL輪読会]Flow-based Deep Generative Models](https://cdn.slidesharecdn.com/ss_thumbnails/20190307-190328024744-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]The Neural Process Family−Neural Processes関連の実装を読んで動かしてみる−](https://cdn.slidesharecdn.com/ss_thumbnails/20190415dlhacks-190422075753-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets](https://cdn.slidesharecdn.com/ss_thumbnails/20220325okimura-220405024717-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]ICLR2020の分布外検知速報](https://cdn.slidesharecdn.com/ss_thumbnails/iclr2020ood-190927011524-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Set Transformer: A Framework for Attention-based Permutation-Invariant...](https://cdn.slidesharecdn.com/ss_thumbnails/20200221settransformer-200221020423-thumbnail.jpg?width=560&fit=bounds)

![[Kim+ ICML2012] Dirichlet Process with Mixed Random Measures : A Nonparametri...](https://cdn.slidesharecdn.com/ss_thumbnails/dp-mrmkimicml2012-120727233419-phpapp01-thumbnail.jpg?width=560&fit=bounds)