Using R on High Performance Computers

Download as pptx, pdf0 likes89 views

This document provides an overview of using R and high performance computers (HPC). It discusses why HPC is useful when data becomes too large for a local machine, and strategies like moving to more powerful hardware, using parallel packages, or rewriting code. It also covers topics like accessing HPC resources through batch jobs, setting up the R environment, profiling code, and using packages like purrr and foreach to parallelize workflows. The overall message is that HPC can scale up R analyses, but developers must adapt their code for parallel and distributed processing.

1 of 20

Download to read offline

Ad

Recommended

Bpf performance tools chapter 4 bcc

Bpf performance tools chapter 4 bccViller Hsiao Chapter 4 of the BPF Performance Tools discusses the BPF Compiler Collection (bcc), a toolkit designed for efficient kernel tracing and manipulation. It outlines how to install bcc, develop programs using it, and provides examples of its functionality, specifically focusing on task switching and data retrieval. The chapter also covers alternative profiling tools and offers additional resources for programming with bcc.

Make Your Containers Faster: Linux Container Performance Tools

Make Your Containers Faster: Linux Container Performance ToolsKernel TLV The document discusses techniques for analyzing Linux container performance, emphasizing resource utilization retrieval and deployment strategies for performance tools. It highlights challenges, such as symbol resolution and the need for privileged access, while incorporating tools like 'perf' and BPF for deeper analysis. Additionally, it provides resources and references for further learning in container performance analysis.

20141111 파이썬으로 Hadoop MR프로그래밍

20141111 파이썬으로 Hadoop MR프로그래밍Tae Young Lee The document discusses using Python for MapReduce development with Hadoop Streaming. It explains that Hadoop Streaming allows any language to be used as long as mapper and reducer functions are defined that use standard input/output. Examples of Python mapper and reducer code are provided that count word frequencies in a text file using Hadoop Streaming.

Kernel Recipes 2017: Using Linux perf at Netflix

Kernel Recipes 2017: Using Linux perf at NetflixBrendan Gregg This document discusses using the Linux perf profiling tool at Netflix. It begins with an overview of why Netflix needs Linux profiling to understand CPU usage quickly and completely. It then provides an introduction to the perf tool, covering its basic workflow and commands. The document discusses profiling CPU usage with perf, including potential issues like JIT runtimes and missing symbols. It provides several examples of perf commands for listing, counting, and recording events. The overall summary is that perf allows Netflix to quickly and accurately profile CPU usage across the entire software stack, from applications to libraries to the kernel, to optimize performance.

Performance Profiling in Rust

Performance Profiling in RustInfluxData The document discusses various techniques for profiling CPU and memory performance in Rust programs, including:

- Using the flamegraph tool to profile CPU usage by sampling a running process and generating flame graphs.

- Integrating pprof profiling into Rust programs to expose profiles over HTTP similar to how it works in Go.

- Profiling heap usage by integrating jemalloc profiling and generating heap profiles on program exit.

- Some challenges with profiling asynchronous Rust programs due to the lack of backtraces.

The key takeaways are that there are crates like pprof-rs and techniques like jemalloc integration that allow collecting CPU and memory profiles from Rust programs, but profiling asynchronous programs

Debugging node in prod

Debugging node in prodYunong Xiao - The document discusses debugging Node.js applications in production environments at Netflix, which has strict uptime requirements. It describes techniques used such as collecting stack traces from running processes using perf and visualizing them in flame graphs to identify performance bottlenecks. It also covers configuring Node.js to dump core files on errors to enable post-mortem debugging without affecting uptime. The techniques help Netflix reduce latency, increase throughput, and fix runtime crashes and memory leaks in production Node.js applications.

In-core compression: how to shrink your database size in several times

In-core compression: how to shrink your database size in several timesAleksander Alekseev The document discusses techniques for compressing database size in Postgres, including:

1. Using in-core block-level compression as a feature of Postgres Pro EE to shrink database size by several times.

2. The ZSON extension provides transparent JSONB compression by replacing common strings with 16-bit codes and compressing the data.

3. Various schema optimizations like proper data types, column ordering, and packing data can reduce size by improving storage layout and enabling TOAST compression.

Introduction to SLURM

Introduction to SLURMCSUC - Consorci de Serveis Universitaris de Catalunya The document provides an introduction to SLURM, a job scheduling system used in Linux clusters for managing resource allocation, job execution, and monitoring. It outlines SLURM's capabilities, including job scheduling mechanisms such as FIFO and backfill scheduling, and provides commands for users to interact with the system, like submitting jobs and checking resource availability. Additionally, it offers batch job submission examples and best practices for resource management.

Advanced backup methods (Postgres@CERN)

Advanced backup methods (Postgres@CERN)Anastasia Lubennikova The document discusses advanced PostgreSQL backup and recovery methods, outlining the necessity of backups, different types of backup tools such as Barman, pgBackRest, pg_probackup, and wal-g, and their respective features. It highlights the importance of aspects like convenience, performance, incremental backups, and cloud support when selecting a backup tool. The document also includes detailed information about backup management, wal archive management, streaming backups, and restoring processes.

Porting and Optimization of Numerical Libraries for ARM SVE

Porting and Optimization of Numerical Libraries for ARM SVELinaro Scalasca is an open-source performance analysis toolset for parallel applications that uses MPI and OpenMP, supporting various architectures including ARM64. The latest stable version is 2.3.1, with ongoing developments such as sampling mode and additional plugins for performance analysis. It integrates with the Score-P instrumentation and measurement libraries, providing detailed reports and optimizations for analyzed applications.

Cascalog internal dsl_preso

Cascalog internal dsl_presoHadoop User Group Cascalog is an internal DSL for Clojure that allows defining MapReduce workflows for Hadoop. It provides helper functions, a way to define custom functions analogous to UDFs, and functions to programmatically generate all possible data aggregations from an input based on business requirements. The workflows can be unit tested and executed on Hadoop. Cascalog abstracts away lower-level MapReduce details and allows defining the entire workflow within a single language.

2017 meetup-apache-kafka-nov

2017 meetup-apache-kafka-novFlorian Hussonnois The document discusses several key improvements and changes in Apache Kafka 1.0, including:

1) Tolerating single disk failures in brokers so they remain available.

2) Cleanup and improvements to the Kafka Streams builder API and addition of new classes to view topology and task details.

3) Enhancements to the print and writeAsText methods for debugging streams applications.

4) Addition of exception handlers to Kafka Streams to control behavior on deserialization errors.

E bpf and dynamic tracing for mariadb db as (mariadb day during fosdem 2020)

E bpf and dynamic tracing for mariadb db as (mariadb day during fosdem 2020)Valeriy Kravchuk The document discusses the use of eBPF and dynamic tracing tools for MariaDB DBAs, highlighting tools like ftrace, bcc, and bpftrace for performance profiling. It outlines the limitations of the performance schema, offers suggestions for modern tracing methods, and discusses the different types of tracing events and frontends. The presentation also provides practical examples of using bcc tools in action with MariaDB, emphasizing the advantages of eBPF in modern Linux environments.

More on bpftrace for MariaDB DBAs and Developers - FOSDEM 2022 MariaDB Devroom

More on bpftrace for MariaDB DBAs and Developers - FOSDEM 2022 MariaDB DevroomValeriy Kravchuk The document discusses the use of bpftrace, an eBPF-based tracing tool, specifically for MariaDB developers and DBAs, covering its installation, advanced usage, and challenges such as code coverage testing. It highlights issues encountered during building from source on Ubuntu 20.04, including those related to LLVM versions, and offers insights on accessing complex structures and local variables within the MariaDB server code. Additionally, it emphasizes the potential for developers to leverage bpftrace for efficient debugging and analysis of MariaDB functionalities.

OSBConf 2015 | Backups with rdiff backup and rsnapshot by christoph mitasch &...

OSBConf 2015 | Backups with rdiff backup and rsnapshot by christoph mitasch &...NETWAYS The document provides a detailed overview of two open-source backup tools, rsnapshot and rdiff-backup, discussing their features, benefits, and how to use them effectively. It includes practical examples of configuration, backup procedures, and restoration processes, as well as maintenance tips. Additionally, it touches on automation and monitoring aspects to help users efficiently manage their backup strategies.

Introduction to Parallelization ans performance optimization

Introduction to Parallelization ans performance optimizationCSUC - Consorci de Serveis Universitaris de Catalunya This document provides an overview of parallelization and performance optimization techniques for improving code efficiency. It emphasizes the importance of identifying bottlenecks using profilers, analyzing code structure, and applying appropriate parallel programming paradigms such as MPI and OpenMP. Additionally, it outlines practical programming tips for enhancing memory usage and execution speed in parallel computing environments.

The TCP/IP Stack in the Linux Kernel

The TCP/IP Stack in the Linux KernelDivye Kapoor The document discusses various data structures and functions related to network packet processing in the Linux kernel socket layer. It describes the sk_buff structure that is used to pass packets between layers. It also explains the net_device structure that represents a network interface in the kernel. When a packet is received, the interrupt handler will raise a soft IRQ for processing. The packet will then traverse various protocol layers like IP and TCP to be eventually delivered to a socket and read by a userspace application.

LXC on Ganeti

LXC on Ganetikawamuray This document discusses improvements made to LXC container support in Ganeti through a Google Summer of Code project. Key areas worked on included fixing existing LXC integration, adding unit tests, setting up quality assurance, and adding features like migration. The project was mentored by Hrvoje Ribicic. Future work may include two-level isolation of containers within VMs and live migration of LXC instances.

HCQC : HPC Compiler Quality Checker

HCQC : HPC Compiler Quality CheckerLinaro The document introduces the HPC Compiler Quality Checker (hcqc), a tool developed by Masaki Arai to evaluate and improve the quality of compiler optimizations in high-performance computing applications. It outlines the metrics and configuration needed for quality evaluation, presents various comparisons between different compilers, and discusses future work for enhancements including support for new features and architectures. The hcqc aims to facilitate better performance assessments through detailed analysis of compiler behavior on specific kernels.

Introduction of R on Hadoop

Introduction of R on HadoopChung-Tsai Su R can be used for large scale data analysis on Hadoop through tools like Rhadoop, R + Hadoop Streaming, and Rhipe. The document demonstrates using Rhadoop to analyze a large mortality dataset on Hadoop. It shows how to install Rhadoop, run a word count example, and analyze causes of death from the dataset using MapReduce jobs with R scripts. While Rhadoop allows scaling R to big data on Hadoop, its development is ongoing so backward compatibility must be considered. Other options like Pig with R may provide better integration than Rhadoop alone.

Write on memory TSDB database (gocon tokyo autumn 2018)

Write on memory TSDB database (gocon tokyo autumn 2018)Huy Do The document discusses building a time series database (TSDB) for storing metrics data. It describes needing fast write and read speeds with efficient storage usage. It evaluated existing solutions but found they did not meet performance or cost needs. The team decided to build their own in-memory TSDB using several open source Go packages - Prometheus/tsdb for storage, valyala/gozstd for compression, hashicorp/raft for replication, and LINE/centraldogma for configuration management. Their resulting storage can write over 1 million samples per second and store billions of samples in a single machine.

Ecossistema Hadoop no Magazine Luiza

Ecossistema Hadoop no Magazine LuizaNelson Forte This document discusses the Hadoop ecosystem, including its distributed file system (HDFS), resource management with YARN, and tools like Hive, Impala, HBase, and Pig for processing and analyzing big data. It covers the scalability and versatility of Hadoop for handling large datasets and mentions various functionalities such as data aggregation and SQL-like queries. The document also highlights integration techniques like Sqoop for importing structured data into HDFS.

Odoo command line interface

Odoo command line interfaceJalal Zahid This document summarizes options for running the Odoo server from the command line, including:

- Specifying database, modules, and module paths for installation/updating

- Configuring workers for multiprocessing and limiting resources per worker

- Setting logging options like the log file, database, or specific log levels

- Advanced options like auto-reloading or enabling a proxy

Process scheduling

Process schedulingHao-Ran Liu The document discusses process scheduling in operating systems, focusing on objectives, types of processes (I/O-bound and CPU-bound), and various scheduling policies, including cooperative and preemptive multitasking. It details the Completely Fair Scheduler (CFS), its functioning with time slices, and the implications of process priorities and groups. Additionally, it mentions real-time scheduling and the mechanisms for managing process sleeping and waking in the kernel.

Spying on the Linux kernel for fun and profit

Spying on the Linux kernel for fun and profitAndrea Righi The document discusses techniques for monitoring and tracing the Linux kernel, focusing on tools like strace for system call tracing and eBPF for programmable kernel operations. It outlines the history and evolution of eBPF, its functionality, and various use cases, including real-time tracing of processes and network packets. The importance of understanding kernel behavior for enhancing application performance and security is emphasized.

Data Storage Formats in Hadoop

Data Storage Formats in HadoopBotond Balázs Three data storage formats are described: row-oriented, column-oriented, and record columnar. Record columnar stores data in a hybrid fashion, with columns stored together but records kept together in row groups for better failure handling. Common serialization formats include SequenceFile, Avro, RCFile, and Parquet. Parquet is generally recommended for analytics workloads due to its support for complex data structures and good compression. Failure behavior, read/write speeds, splittability, and compression are important factors to consider when choosing a data storage format and serialization format.

Fluentd v1.0 in a nutshell

Fluentd v1.0 in a nutshellN Masahiro This document summarizes the key features and changes between versions of Fluentd, an open source data collector.

The main points are:

1) Fluentd v1.0 will provide stable APIs and features while remaining compatible with v0.12 and v0.14. It will have no breaking API changes.

2) New features in v0.14 and v1.0 include nanosecond time resolution, multi-core processing, Windows support, improved buffering and plugins, and more.

3) The goals for v1.0 include migrating more plugins to the new APIs, addressing issues, and improving documentation. A release is planned for Q2 2017.

Python arch wiki

Python arch wikifikrul islamy This document provides an overview and instructions for installing and using Python on Arch Linux. It discusses the differences between Python 2 and Python 3, how to install each version, and how to deal with version conflicts. It also lists several integrated development environments (IDEs) for Python programming and describes various widget toolkits available for building graphical user interfaces.

Intro to big data analytics using microsoft machine learning server with spark

Intro to big data analytics using microsoft machine learning server with sparkAlex Zeltov The document discusses Microsoft R Open and its capabilities for parallel and distributed computing, allowing users to reduce computation times without modifying R code. It highlights various libraries and tools for big data processing, machine learning, and model deployment in environments like Hadoop and SQL Server. Additionally, it emphasizes the integration of R with Microsoft's services for real-time scoring and operationalization in applications.

Ml2

Ml2poovarasu maniandan Microsoft R Server allows users to run R code on large datasets in a distributed, parallel manner across SQL Server, Spark, and Hadoop without code changes. It provides scalable machine learning algorithms and tools to operationalize models for real-time scoring. The document discusses how R code can be run remotely on Hadoop and Spark clusters using technologies like RevoScaleR and Sparklyr for scalability.

More Related Content

What's hot (20)

Advanced backup methods (Postgres@CERN)

Advanced backup methods (Postgres@CERN)Anastasia Lubennikova The document discusses advanced PostgreSQL backup and recovery methods, outlining the necessity of backups, different types of backup tools such as Barman, pgBackRest, pg_probackup, and wal-g, and their respective features. It highlights the importance of aspects like convenience, performance, incremental backups, and cloud support when selecting a backup tool. The document also includes detailed information about backup management, wal archive management, streaming backups, and restoring processes.

Porting and Optimization of Numerical Libraries for ARM SVE

Porting and Optimization of Numerical Libraries for ARM SVELinaro Scalasca is an open-source performance analysis toolset for parallel applications that uses MPI and OpenMP, supporting various architectures including ARM64. The latest stable version is 2.3.1, with ongoing developments such as sampling mode and additional plugins for performance analysis. It integrates with the Score-P instrumentation and measurement libraries, providing detailed reports and optimizations for analyzed applications.

Cascalog internal dsl_preso

Cascalog internal dsl_presoHadoop User Group Cascalog is an internal DSL for Clojure that allows defining MapReduce workflows for Hadoop. It provides helper functions, a way to define custom functions analogous to UDFs, and functions to programmatically generate all possible data aggregations from an input based on business requirements. The workflows can be unit tested and executed on Hadoop. Cascalog abstracts away lower-level MapReduce details and allows defining the entire workflow within a single language.

2017 meetup-apache-kafka-nov

2017 meetup-apache-kafka-novFlorian Hussonnois The document discusses several key improvements and changes in Apache Kafka 1.0, including:

1) Tolerating single disk failures in brokers so they remain available.

2) Cleanup and improvements to the Kafka Streams builder API and addition of new classes to view topology and task details.

3) Enhancements to the print and writeAsText methods for debugging streams applications.

4) Addition of exception handlers to Kafka Streams to control behavior on deserialization errors.

E bpf and dynamic tracing for mariadb db as (mariadb day during fosdem 2020)

E bpf and dynamic tracing for mariadb db as (mariadb day during fosdem 2020)Valeriy Kravchuk The document discusses the use of eBPF and dynamic tracing tools for MariaDB DBAs, highlighting tools like ftrace, bcc, and bpftrace for performance profiling. It outlines the limitations of the performance schema, offers suggestions for modern tracing methods, and discusses the different types of tracing events and frontends. The presentation also provides practical examples of using bcc tools in action with MariaDB, emphasizing the advantages of eBPF in modern Linux environments.

More on bpftrace for MariaDB DBAs and Developers - FOSDEM 2022 MariaDB Devroom

More on bpftrace for MariaDB DBAs and Developers - FOSDEM 2022 MariaDB DevroomValeriy Kravchuk The document discusses the use of bpftrace, an eBPF-based tracing tool, specifically for MariaDB developers and DBAs, covering its installation, advanced usage, and challenges such as code coverage testing. It highlights issues encountered during building from source on Ubuntu 20.04, including those related to LLVM versions, and offers insights on accessing complex structures and local variables within the MariaDB server code. Additionally, it emphasizes the potential for developers to leverage bpftrace for efficient debugging and analysis of MariaDB functionalities.

OSBConf 2015 | Backups with rdiff backup and rsnapshot by christoph mitasch &...

OSBConf 2015 | Backups with rdiff backup and rsnapshot by christoph mitasch &...NETWAYS The document provides a detailed overview of two open-source backup tools, rsnapshot and rdiff-backup, discussing their features, benefits, and how to use them effectively. It includes practical examples of configuration, backup procedures, and restoration processes, as well as maintenance tips. Additionally, it touches on automation and monitoring aspects to help users efficiently manage their backup strategies.

Introduction to Parallelization ans performance optimization

Introduction to Parallelization ans performance optimizationCSUC - Consorci de Serveis Universitaris de Catalunya This document provides an overview of parallelization and performance optimization techniques for improving code efficiency. It emphasizes the importance of identifying bottlenecks using profilers, analyzing code structure, and applying appropriate parallel programming paradigms such as MPI and OpenMP. Additionally, it outlines practical programming tips for enhancing memory usage and execution speed in parallel computing environments.

The TCP/IP Stack in the Linux Kernel

The TCP/IP Stack in the Linux KernelDivye Kapoor The document discusses various data structures and functions related to network packet processing in the Linux kernel socket layer. It describes the sk_buff structure that is used to pass packets between layers. It also explains the net_device structure that represents a network interface in the kernel. When a packet is received, the interrupt handler will raise a soft IRQ for processing. The packet will then traverse various protocol layers like IP and TCP to be eventually delivered to a socket and read by a userspace application.

LXC on Ganeti

LXC on Ganetikawamuray This document discusses improvements made to LXC container support in Ganeti through a Google Summer of Code project. Key areas worked on included fixing existing LXC integration, adding unit tests, setting up quality assurance, and adding features like migration. The project was mentored by Hrvoje Ribicic. Future work may include two-level isolation of containers within VMs and live migration of LXC instances.

HCQC : HPC Compiler Quality Checker

HCQC : HPC Compiler Quality CheckerLinaro The document introduces the HPC Compiler Quality Checker (hcqc), a tool developed by Masaki Arai to evaluate and improve the quality of compiler optimizations in high-performance computing applications. It outlines the metrics and configuration needed for quality evaluation, presents various comparisons between different compilers, and discusses future work for enhancements including support for new features and architectures. The hcqc aims to facilitate better performance assessments through detailed analysis of compiler behavior on specific kernels.

Introduction of R on Hadoop

Introduction of R on HadoopChung-Tsai Su R can be used for large scale data analysis on Hadoop through tools like Rhadoop, R + Hadoop Streaming, and Rhipe. The document demonstrates using Rhadoop to analyze a large mortality dataset on Hadoop. It shows how to install Rhadoop, run a word count example, and analyze causes of death from the dataset using MapReduce jobs with R scripts. While Rhadoop allows scaling R to big data on Hadoop, its development is ongoing so backward compatibility must be considered. Other options like Pig with R may provide better integration than Rhadoop alone.

Write on memory TSDB database (gocon tokyo autumn 2018)

Write on memory TSDB database (gocon tokyo autumn 2018)Huy Do The document discusses building a time series database (TSDB) for storing metrics data. It describes needing fast write and read speeds with efficient storage usage. It evaluated existing solutions but found they did not meet performance or cost needs. The team decided to build their own in-memory TSDB using several open source Go packages - Prometheus/tsdb for storage, valyala/gozstd for compression, hashicorp/raft for replication, and LINE/centraldogma for configuration management. Their resulting storage can write over 1 million samples per second and store billions of samples in a single machine.

Ecossistema Hadoop no Magazine Luiza

Ecossistema Hadoop no Magazine LuizaNelson Forte This document discusses the Hadoop ecosystem, including its distributed file system (HDFS), resource management with YARN, and tools like Hive, Impala, HBase, and Pig for processing and analyzing big data. It covers the scalability and versatility of Hadoop for handling large datasets and mentions various functionalities such as data aggregation and SQL-like queries. The document also highlights integration techniques like Sqoop for importing structured data into HDFS.

Odoo command line interface

Odoo command line interfaceJalal Zahid This document summarizes options for running the Odoo server from the command line, including:

- Specifying database, modules, and module paths for installation/updating

- Configuring workers for multiprocessing and limiting resources per worker

- Setting logging options like the log file, database, or specific log levels

- Advanced options like auto-reloading or enabling a proxy

Process scheduling

Process schedulingHao-Ran Liu The document discusses process scheduling in operating systems, focusing on objectives, types of processes (I/O-bound and CPU-bound), and various scheduling policies, including cooperative and preemptive multitasking. It details the Completely Fair Scheduler (CFS), its functioning with time slices, and the implications of process priorities and groups. Additionally, it mentions real-time scheduling and the mechanisms for managing process sleeping and waking in the kernel.

Spying on the Linux kernel for fun and profit

Spying on the Linux kernel for fun and profitAndrea Righi The document discusses techniques for monitoring and tracing the Linux kernel, focusing on tools like strace for system call tracing and eBPF for programmable kernel operations. It outlines the history and evolution of eBPF, its functionality, and various use cases, including real-time tracing of processes and network packets. The importance of understanding kernel behavior for enhancing application performance and security is emphasized.

Data Storage Formats in Hadoop

Data Storage Formats in HadoopBotond Balázs Three data storage formats are described: row-oriented, column-oriented, and record columnar. Record columnar stores data in a hybrid fashion, with columns stored together but records kept together in row groups for better failure handling. Common serialization formats include SequenceFile, Avro, RCFile, and Parquet. Parquet is generally recommended for analytics workloads due to its support for complex data structures and good compression. Failure behavior, read/write speeds, splittability, and compression are important factors to consider when choosing a data storage format and serialization format.

Fluentd v1.0 in a nutshell

Fluentd v1.0 in a nutshellN Masahiro This document summarizes the key features and changes between versions of Fluentd, an open source data collector.

The main points are:

1) Fluentd v1.0 will provide stable APIs and features while remaining compatible with v0.12 and v0.14. It will have no breaking API changes.

2) New features in v0.14 and v1.0 include nanosecond time resolution, multi-core processing, Windows support, improved buffering and plugins, and more.

3) The goals for v1.0 include migrating more plugins to the new APIs, addressing issues, and improving documentation. A release is planned for Q2 2017.

Python arch wiki

Python arch wikifikrul islamy This document provides an overview and instructions for installing and using Python on Arch Linux. It discusses the differences between Python 2 and Python 3, how to install each version, and how to deal with version conflicts. It also lists several integrated development environments (IDEs) for Python programming and describes various widget toolkits available for building graphical user interfaces.

Introduction to Parallelization ans performance optimization

Introduction to Parallelization ans performance optimizationCSUC - Consorci de Serveis Universitaris de Catalunya

Similar to Using R on High Performance Computers (20)

Intro to big data analytics using microsoft machine learning server with spark

Intro to big data analytics using microsoft machine learning server with sparkAlex Zeltov The document discusses Microsoft R Open and its capabilities for parallel and distributed computing, allowing users to reduce computation times without modifying R code. It highlights various libraries and tools for big data processing, machine learning, and model deployment in environments like Hadoop and SQL Server. Additionally, it emphasizes the integration of R with Microsoft's services for real-time scoring and operationalization in applications.

Ml2

Ml2poovarasu maniandan Microsoft R Server allows users to run R code on large datasets in a distributed, parallel manner across SQL Server, Spark, and Hadoop without code changes. It provides scalable machine learning algorithms and tools to operationalize models for real-time scoring. The document discusses how R code can be run remotely on Hadoop and Spark clusters using technologies like RevoScaleR and Sparklyr for scalability.

Extending lifespan with Hadoop and R

Extending lifespan with Hadoop and RRadek Maciaszek The document discusses a project on lifespan extension utilizing R and Hadoop for bioinformatics research at UCL. It highlights the use of R for data science in gene analysis, the challenges of scalability, and the integration of Hadoop for handling large datasets. The future scope includes exploring real-time processing with Storm to enhance computational efficiency.

Tackling repetitive tasks with serial or parallel programming in R

Tackling repetitive tasks with serial or parallel programming in RLun-Hsien Chang The document discusses optimizing R programming for handling repetitive tasks through serial and parallel computing, detailing the syntax and functionality of various R functions and packages. It explains the differences between serial and parallel computing, provides code examples for tasks such as file management and data reading, and emphasizes the performance benefits of parallel processing. Additionally, it highlights the importance of assessing code efficiency and encourages users to leverage multiple CPU cores for independent tasks.

The Powerful Marriage of Hadoop and R (David Champagne)

The Powerful Marriage of Hadoop and R (David Champagne)Revolution Analytics The document presents Revolution Analytics' integration of R with Hadoop, highlighting the capabilities of its packages like rhdfs and rhbase for data manipulation in a big data environment. It emphasizes the ease of using R for complex data analysis without requiring deep knowledge of Hadoop infrastructure. The presentation also discusses the benefits of using R for writing MapReduce programs, showcasing its efficiency and functionality compared to other tools.

Hadoop World 2011: The Powerful Marriage of R and Hadoop - David Champagne, R...

Hadoop World 2011: The Powerful Marriage of R and Hadoop - David Champagne, R...Cloudera, Inc. Revolution Analytics presents the integration of R with Hadoop, highlighting R's capabilities for statistical analysis with large data sets through packages such as rhdfs, rhbase, and rmr. The motivation is to simplify the interaction for R programmers with Hadoop's data stores, enabling efficient data analysis without deep technical infrastructure knowledge. The document discusses functionalities, examples of usage, and advantages of using R in a Hadoop environment for various analytical tasks.

Fundamental of Big Data with Hadoop and Hive

Fundamental of Big Data with Hadoop and HiveSharjeel Imtiaz The document details the fundamentals of big data, focusing on its characteristics such as volume, velocity, variety, and challenges like veracity and variability. It outlines data processing methodologies using Hadoop, including the map-reduce paradigm, and discusses the integration of R for data analysis through specific packages like rhdfs, rmr, and rhive. Additionally, it covers steps for installation and data management within Hadoop ecosystems.

Microsoft R - Data Science at Scale

Microsoft R - Data Science at ScaleSascha Dittmann The document discusses the Microsoft R Server, emphasizing its capabilities as an open-source statistical programming language and data visualization tool used by over 2.5 million users. It addresses the challenges of traditional R, such as limited commercial support and complex deployments, while highlighting Microsoft R's scalability, compatibility with various platforms, and integration with big data technologies. Additionally, it details the functionalities of Microsoft R Server, including parallel processing, predictive modeling, and support for various data sources and algorithms.

St Petersburg R user group meetup 2, Parallel R

St Petersburg R user group meetup 2, Parallel RAndrew Bzikadze This document provides an overview of parallel computing techniques in R using various packages like snow, multicore, and parallel. It begins with motivation for parallelizing R given its limitations of being single-threaded and memory-bound. It then covers the snow package which enables explicit parallelism across computer clusters. The multicore package provides implicit parallelism using forking, but is deprecated. The parallel package acts as a wrapper for snow and multicore. It also discusses load balancing, random number generation, and provides examples of using snow and multicore for parallel k-means clustering and lapply.

Reproducible Computational Research in R

Reproducible Computational Research in RSamuel Bosch The document offers a comprehensive introduction to reproducible computational research using R, highlighting the importance of tracking results, using version control like Git, and best practices for managing R packages. Key topics include writing R Markdown for dynamic document creation, utilizing Packrat for project isolation and version control, and developing R packages with testing practices. It provides practical examples and links to resources for further learning in R programming and reproducibility.

Parallel Computing with R

Parallel Computing with RAbhirup Mallik The document covers parallel computing using R, emphasizing its necessity due to R's default limitation in utilizing multiple cores. It details methodologies for implementing parallel computing through libraries like 'parallel' and 'snow', along with examples like cross-validation and cluster creation. Additional resources and code availability are provided at the end of the document.

R, Hadoop and Amazon Web Services

R, Hadoop and Amazon Web ServicesPortland R User Group The document discusses the integration of R programming with Hadoop and Amazon Web Services (AWS) for large-scale data processing, emphasizing the importance of collaboration and open-source technology. It outlines the basics of Elastic MapReduce, the MapReduce computational paradigm, and shares practical examples and insights from the author's experience in developing applications. Additionally, it highlights the challenges and benefits associated with utilizing AWS for running Hadoop jobs and offers advice on effective data management.

"R, Hadoop, and Amazon Web Services (20 December 2011)"

"R, Hadoop, and Amazon Web Services (20 December 2011)"Portland R User Group The document details a presentation about using Hadoop and Amazon Web Services (AWS) for developing MapReduce applications in R, aiming to engage fellow R users in collaborative efforts and knowledge sharing. It highlights the significance of understanding large data sets and introduces Elastic MapReduce as a powerful, cost-effective tool for parallel computation. Various technical challenges, advantages, and R-specific packages are discussed to aid in efficient data processing and analysis.

SQL Server 2017 Machine Learning Services

SQL Server 2017 Machine Learning ServicesSascha Dittmann The document discusses Microsoft R Server, which integrates various services and environments for big data analytics, including support for Linux, Windows, Hadoop, and Teradata. It outlines the use of R packages, remote execution contexts, and the ability to run predictive algorithms on large datasets through distributed computing. Key functionalities include analyzing data, creating linear models, and executing external scripts within SQL Server environments.

Language-agnostic data analysis workflows and reproducible research

Language-agnostic data analysis workflows and reproducible researchAndrew Lowe The document presents language-agnostic data analysis workflows for reproducible research, showcasing the ability to work across various programming languages without disrupting the analysis process. It discusses tools like feather for data exchange among languages and systems like knitr and R markdown for producing publishable documents that combine text and code seamlessly. It emphasizes the importance of making research reproducible and sharing data and code to benefit collaborations and future research efforts.

R - the language

R - the languageMike Martinez The document provides a comprehensive history and overview of the R programming language, detailing its origins, development, and applications in statistical analysis and data visualization. It outlines various data types, control structures, and key packages used in R, including tools for reproducible research and data exploration. Additionally, it highlights resources for sharing research findings and creating interactive visualizations with R.

Getting started with R & Hadoop

Getting started with R & HadoopJeffrey Breen The document discusses the integration of R programming with Hadoop for data analysis, emphasizing the advantages of MapReduce as a programming pattern for parallel data analysis. It outlines various R packages that facilitate interaction with Hadoop, including their functionalities and usage examples. The presentation also shares personal insights from the speaker on the evolution of their use of R and Hadoop for data-driven analytics.

Running R on Hadoop - CHUG - 20120815

Running R on Hadoop - CHUG - 20120815Chicago Hadoop Users Group The document summarizes a presentation on using R and Hadoop together. It includes:

1) An outline of topics to be covered including why use MapReduce and R, options for combining R and Hadoop, an overview of RHadoop, a step-by-step example, and advanced RHadoop features.

2) Code examples from Jonathan Seidman showing how to analyze airline on-time data using different R and Hadoop options - naked streaming, Hive, RHIPE, and RHadoop.

3) The analysis calculates average departure delays by year, month and airline using each method.

Building a Scalable Data Science Platform with R

Building a Scalable Data Science Platform with RDataWorks Summit/Hadoop Summit This document discusses building a scalable data science platform with R. It describes R as a popular statistical programming language with over 2.5 million users. It notes that while R is widely used, its open source nature means it lacks enterprise capabilities for large-scale use. The document then introduces Microsoft R Server as a way to bring enterprise capabilities like scalability, efficiency, and support to R in order to make it suitable for production use on big data problems. It provides examples of using R Server with Hadoop and HDInsight on the Azure cloud to operationalize advanced analytics workflows from data cleaning and modeling to deployment as web services at scale.

A Step Towards Reproducibility in R

A Step Towards Reproducibility in RRevolution Analytics This document discusses reproducibility in R and tools provided by Revolution Analytics to improve reproducibility. It summarizes R's growing popularity, its use by major companies like Google and Facebook, and fields that use R like insurance, finance, agriculture, and more. It then discusses the importance of reproducible research and defines reproducibility. Finally, it outlines Revolution Analytics' reproducibility tools like Revolution R Open, a static CRAN mirror, daily CRAN snapshots, and the checkpoint package to ensure projects use consistent package versions.

Ad

Recently uploaded (20)

一比一原版(TUC毕业证书)开姆尼茨工业大学毕业证如何办理

一比一原版(TUC毕业证书)开姆尼茨工业大学毕业证如何办理taqyed 鉴于此,办理TUC大学毕业证开姆尼茨工业大学毕业证书【q薇1954292140】留学一站式办理学历文凭直通车(开姆尼茨工业大学毕业证TUC成绩单原版开姆尼茨工业大学学位证假文凭)未能正常毕业?【q薇1954292140】办理开姆尼茨工业大学毕业证成绩单/留信学历认证/学历文凭/使馆认证/留学回国人员证明/录取通知书/Offer/在读证明/成绩单/网上存档永久可查!

如果您处于以下几种情况:

◇在校期间,因各种原因未能顺利毕业……拿不到官方毕业证

◇面对父母的压力,希望尽快拿到;

◇不清楚认证流程以及材料该如何准备;

◇回国时间很长,忘记办理;

◇回国马上就要找工作,办给用人单位看;

◇企事业单位必须要求办理的

◇需要报考公务员、购买免税车、落转户口

◇申请留学生创业基金

【办理开姆尼茨工业大学成绩单Buy Technische Universität Chemnitz Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

开姆尼茨工业大学成绩单能够体现您的的学习能力,包括开姆尼茨工业大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

624753984-Annex-A3-RPMS-Tool-for-Proficient-Teachers-SY-2024-2025.pdf

624753984-Annex-A3-RPMS-Tool-for-Proficient-Teachers-SY-2024-2025.pdfCristineGraceAcuyan The little toys

Artigo - Playing to Win.planejamento docx

Artigo - Playing to Win.planejamento docxKellyXavier15 Excelente artifo para quem está iniciando processo de aquisiçãode planejamento estratégico

Presentation by Tariq & Mohammed (1).pptx

Presentation by Tariq & Mohammed (1).pptxAbooddSandoqaa this presenration is talking about data and analaysis and caucusus analysis of the rotten egg tommetos and viral infections

Model Evaluation & Visualisation part of a series of intro modules for data ...

Model Evaluation & Visualisation part of a series of intro modules for data ...brandonlee626749 Model Evaluation & Visualisation part of a series of intro modules for data science

定制OCAD学生卡加拿大安大略艺术与设计大学成绩单范本,OCAD成绩单复刻

定制OCAD学生卡加拿大安大略艺术与设计大学成绩单范本,OCAD成绩单复刻taqyed 2025年极速办安大略艺术与设计大学毕业证【q薇1954292140】学历认证流程安大略艺术与设计大学毕业证加拿大本科成绩单制作【q薇1954292140】海外各大学Diploma版本,因为疫情学校推迟发放证书、证书原件丢失补办、没有正常毕业未能认证学历面临就业提供解决办法。当遭遇挂科、旷课导致无法修满学分,或者直接被学校退学,最后无法毕业拿不到毕业证。此时的你一定手足无措,因为留学一场,没有获得毕业证以及学历证明肯定是无法给自己和父母一个交代的。

【复刻安大略艺术与设计大学成绩单信封,Buy OCAD University Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

安大略艺术与设计大学成绩单能够体现您的的学习能力,包括安大略艺术与设计大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

我们承诺采用的是学校原版纸张(原版纸质、底色、纹路)我们工厂拥有全套进口原装设备,特殊工艺都是采用不同机器制作,仿真度基本可以达到100%,所有成品以及工艺效果都可提前给客户展示,不满意可以根据客户要求进行调整,直到满意为止!

【主营项目】

一.安大略艺术与设计大学毕业证【q微1954292140】安大略艺术与设计大学成绩单、留信认证、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理国外各大学文凭(一对一专业服务,可全程监控跟踪进度)

英国毕业证范本利物浦约翰摩尔斯大学成绩单底纹防伪LJMU学生证办理学历认证

英国毕业证范本利物浦约翰摩尔斯大学成绩单底纹防伪LJMU学生证办理学历认证 taqyed LJMU利物浦约翰摩尔斯大学毕业证书多少钱【q薇1954292140】1:1原版利物浦约翰摩尔斯大学毕业证+LJMU成绩单【q薇1954292140】完美还原海外各大学毕业材料上的工艺:水印,阴影底纹,钢印LOGO烫金烫银,LOGO烫金烫银复合重叠。文字图案浮雕、激光镭射、紫外荧光、温感、复印防伪等防伪工艺。

【主营项目】

一、工作未确定,回国需先给父母、亲戚朋友看下文凭的情况,办理毕业证|办理文凭: 买大学毕业证|买大学文凭【q薇1954292140】学位证明书如何办理申请?

二、回国进私企、外企、自己做生意的情况,这些单位是不查询毕业证真伪的,而且国内没有渠道去查询国外文凭的真假,也不需要提供真实教育部认证。鉴于此,办理利物浦约翰摩尔斯大学毕业证|LJMU成绩单【q薇1954292140】国外大学毕业证, 文凭办理, 国外文凭办理, 留信网认证

三.材料咨询办理、认证咨询办理请加学历顾问【微信:1954292140】毕业证购买指大学文凭购买,毕业证办理和文凭办理。学院文凭定制,学校原版文凭补办,扫描件文凭定做,100%文凭复刻。

美国毕业证范本中华盛顿大学学位证书CWU学生卡购买

美国毕业证范本中华盛顿大学学位证书CWU学生卡购买Taqyea 1:1原版中华盛顿大学毕业证+CWU成绩单【Q微:1954 292 140】鉴于此,CWUdiploma中华盛顿大学挂科处理解决方案CWU毕业证成绩单专业服务学历认证【Q微:1954 292 140】办理教育部学历认证,留学回国证明,中华盛顿大学毕业证、中华盛顿大学成绩单、中华盛顿大学文凭(留信学历认证+永久存档查询)办理本科+硕士+博士毕业证成绩单学历认证,我们一直是留学生的首选,质量行业第一,诚信可靠。

【中华盛顿大学成绩单一站式办理专业技术完美呈现Central Washington University Transcripts】

购买日韩成绩单、英国大学成绩单、美国大学成绩单、澳洲大学成绩单、加拿大大学成绩单(q微1954292140)新加坡大学成绩单、新西兰大学成绩单、爱尔兰成绩单、西班牙成绩单、德国成绩单。成绩单的意义主要体现在证明学习能力、评估学术背景、展示综合素质、提高录取率,以及是作为留信认证申请材料的一部分。

中华盛顿大学成绩单能够体现您的的学习能力,包括中华盛顿大学课程成绩、专业能力、研究能力。(q微1954292140)具体来说,成绩报告单通常包含学生的学习技能与习惯、各科成绩以及老师评语等部分,因此,成绩单不仅是学生学术能力的证明,也是评估学生是否适合某个教育项目的重要依据!

【主营项目】

一.毕业证【q微1954292140】成绩单、使馆认证、教育部认证、雅思托福成绩单、学生卡等!

二.真实使馆公证(即留学回国人员证明,不成功不收费)

三.真实教育部学历学位认证(教育部存档!教育部留服网站永久可查)

四.办理各国各大学文凭(一对一专业服务,可全程监控跟踪进度)

Flextronics Employee Safety Data-Project-2.pptx

Flextronics Employee Safety Data-Project-2.pptxkilarihemadri This PPT are using to show employee safety

Ad

Using R on High Performance Computers

- 1. using R and High Performance Computers an overview by Dave Hiltbrand

- 2. talking points ● why HPC? ● R environment tips ● staging R scripts for HPC ● purrr::map functions

- 3. what to do if the computation is too big for your desktop/laptop ? • a common user question: – i have an existing R pipeline for my research work. but the data is growing too big. now my R program runs for days (weeks) to finish or simply runs out of memory. • 3 Strategies – move to bigger hardware – advanced libraries/C++ – implement code using parallel packages

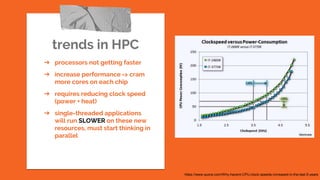

- 4. trends in HPC ➔ processors not getting faster ➔ increase performance => cram more cores on each chip ➔ requires reducing clock speed (power + heat) ➔ single-threaded applications will run SLOWER on these new resources, must start thinking in parallel https://www.quora.com/Why-havent-CPU-clock-speeds-increased-in-the-last-5-years

- 5. strategy 1: powerful hardware Stampede2 - HPC ● KNL - 68 cores (4x hyperthreading 272)/ 96GB mem/ 4200 nodes ● SKX - 48 cores (2x hyperthreading 96)/ 192 GB mem/ 1736 nodes Maverick - Vis ● vis queue: 20 cores/ 256 GB mem/ 132 nodes ○ RStudio/ Jupyter Notebooks ● gpu queue: 132 NVIDIA Telsa K40 GPUs Wrangler - Data ● Hadoop/Spark ● reservations last up to a month

- 6. allocations open to national researcher community do you work in industry? XSEDE ● national organization providing computation resources to ~ 90% of cycles on Stampede2 tip if you need more power all you have to do is ask https://portal.xsede.org /allocations/resource- info

- 7. HPCs are: ➔ typically run with linux ➔ more command line driven ➔ daunting to Windows only users ➔ RStudio helps the transition

- 8. login nodes ➔ always log into the login nodes ➔ shared nodes with limited resources ➔ ok to edit, compile, move files ➔ for R, ok to install packages from login nodes ➔ !!! don’t run R Scripts!!! compute nodes ➔ dedicated nodes for each job ➔ only accessible via a job scheduler ➔ once you have a job running on a node you can ssh into the node

- 9. access R command line ● useful to install packages on login nodes ● using interactive development jobs you can request compute resources to login straight to a compute node and use R via command line RStudio ● availability depends on the structure of the HPC cluster ● at TACC the window to use RStudio is only 4 hours through the visual portal batch Jobs ● best method to use R on HPCs ● relies on a job scheduler to fill your request ● can run multiple R scripts on multiple compute nodes

- 10. sample batch script #!/bin/bash #---------------------------------------------------- # #---------------------------------------------------- #SBATCH -J myjob # Job name #SBATCH -o myjob.o%j # Name of stdout output file #SBATCH -e myjob.e%j # Name of stderr error file #SBATCH -p skx-normal # Queue (partition) name #SBATCH -N 1 # Total # of nodes (must be 1 for serial) #SBATCH -n 1 # Total # of mpi tasks (should be 1 for serial) #SBATCH -t 01:30:00 # Run time (hh:mm:ss) #SBATCH [email protected] #SBATCH --mail-type=all # Send email at begin and end of job #SBATCH -A myproject # Allocation name (req'd if you have more than 1) # Other commands must follow all #SBATCH directives... module list pwd date # Launch serial code... Rscript ./my_analysis.R > output.Rout >> error.Rerr # ---------------------------------------------------

- 11. .libPaths and Rprofile() using your Rprofile.site or .Rprofile files along with the .libPaths() command will allow you to install packages in your user folder and have them load up when you start R on the HPC. in R, a library is the location on disk where you install your packages. R creates a different library for each dot-version of R itself when R starts, it performs a series of steps to initialize the session. you can modify the startup sequence by changing the contents in a number of locations. the following sequence is somewhat simplified: ● first, R reads the file Rprofile.site in the R_Home/etc folder, where R_HOME is the location where you installed R. ○ for example, this file could live at C:RR- 3.2.2etcRprofile.site. ○ making changes to this file affects all R sessions that use this version of R. ○ this might be a good place to define your preferred CRAN mirror, for example. ● next, R reads the file ~/.Rprofile in the user's home folder. ● lastly, R reads the file .Rprofile in the project folder tip i like to make a .Rprofile for each GitHub project repo which loads my most commonly used libraries by default.

- 12. going parallel often you need to convert your code into parallel form to get the most out of HPC. the foreach and doMC packages will let you convert loops from sequential operation to parallel. you can even use multiple nodes if you have a really complex data set with the snow package. require( foreach ) require( doMC ) result <- foreach( i = 1:10, .combine = c) %dopar% { myProc() } require( foreach ) require( doSNOW ) #Get backend hostnames hostnames <- scan( "nodelist.txt", what="", sep="n" ) #Set reps to match core count num.cores <- 4 hostnames <- rep( hostnames, each = num.cores ) cluster <- makeSOCKcluster( hostnames ) registerDoSNOW( cluster ) result <- foreach( i = 1:10, .combine=c ) %dopar% { myProc() } stopCluster( cluster )

- 13. profiling ➔ simple procedure checks with tictoc package ➔ use more advanced packages like microbenchmark for multiple procedures ➔ For an easy to read graphic output use the profvis package to create flamegraphs checkpointing ➔ when writing your script think of procedure runtime ➔ you can save objects in your workflow as a checkpoint ◆ library(readr) ◆ write_rds(obj, “obj.rds”) ➔ if you want to run post hoc analysis it makes it easier to have all the parts

- 14. always start small i’m quick i’m slow build a toy dataset find your typo’s easier to rerun run the real data request the right resources once you run a small dataset you can benchmark resources needed

- 15. if you don’t already you need to Git Git is a command-line tool, but the center around which all things involving Git revolve is the hub— GitHub.com—where developers store their projects and network with like minded people. use RStudio and all the advanced IDE tools on your local machine then push and pull to GitHub to run your job. RStudio features built-in vcs track changes in your analysis, git lets you go back in time to a previous version of your file

- 16. Purrr Package Map functions apply a function iteratively to each element of a list or vector

- 17. the purrr map functions are an optional replacement to the lapply functions. they are not technically faster ( although the speed comparison is in nanoseconds ). the main advantage is to use uniform syntax with other tidyverse applications such as dplyr, tidyr, readr, and stringr as well as the helper functions. map( .x, .f, … ) map( vector_or_list_input, , function_to_apply, optional_other_stuff ) modify( .x, .f, …) ex. modify_at( my.data, 1:5, as.numeric) https://github.com/rstudio/cheatsheets/raw/master/purrr.pdf

- 18. map in parallel another key advantage from purrr is use of lambda functions which has been crucial for analysis involving multiple columns of a data frame. using the same basic syntax we create an anonymous function which maps over many lists simultaneously my.data %<>% mutate( var5 = map2_dbl( .$var3, .$var4, ~ ( .x + .y ) / 2 )) my.data %<>% mutate( var6 = pmap_dbl( list( .$var3, .$var4, .$var5), ~ (..1 + ..2 + ..3) / 3 )) tip using the grammar of graphics, data, and lists through tidyverse packages can build a strong workflow

- 19. closing unburden your personal device ➔ learn basic linux cli using batch job submissions gives you the most flexibility ➔ profile/checkpoint/test resources are not without limits ➔ share your code don’t hold onto code until it’s perfect. use GitHub and get feedback early and often

- 20. $ questions -h refs: 1. https://jennybc.github.io/purrr-tutorial/ 2. https://portal.tacc.utexas.edu/user-guides/stampede2#running-jobs-on-the-stampede2-compute-nodes 3. https://learn.tacc.utexas.edu/mod/page/view.php?id=24 4. http://blog.revolutionanalytics.com/2015/11/r-projects.html